Adobe researchers develop ``SceneComposer'', an image generation system that allows you to intuitively specify the layout with paint and easily replace some images

A research team at Johns Hopkins University and Adobe Research has developed an image generation system ` ` SceneComposer '' that allows you to easily specify the placement of objects using hand-drawn layouts and to fine-tune images after generation.

SceneComposer: Any-Level Semantic Image Synthesis

GitHub - zengxianyu/scenec

https://github.com/zengxianyu/scenec

SceneComposer: Any-Level Semantic Image Synthesis

https://zengyu.me/scenec/

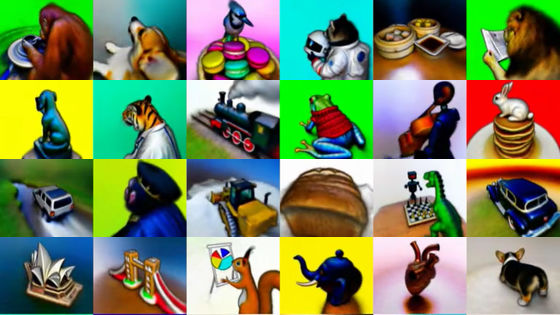

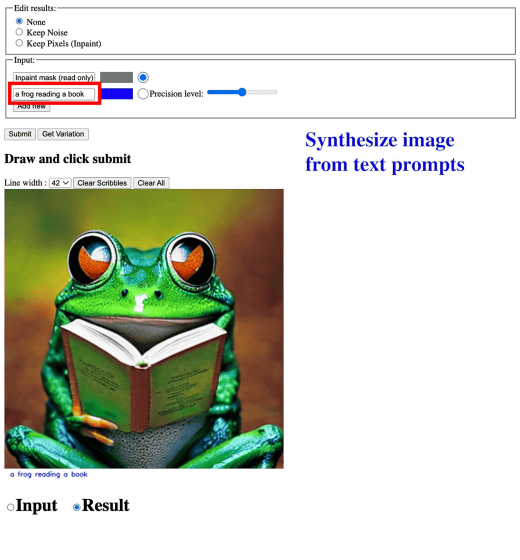

'SceneComposer' can generate images from text in the same way as normal image generation AI. In the video published by the research team, such an image was generated when the text 'a frog reading a book' was entered.

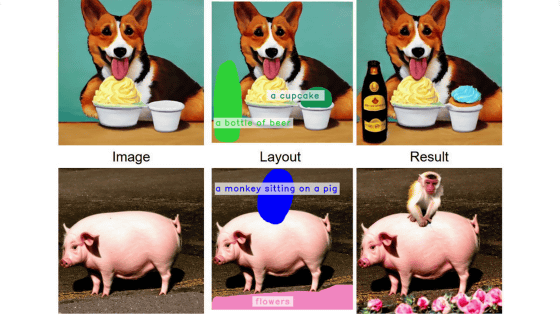

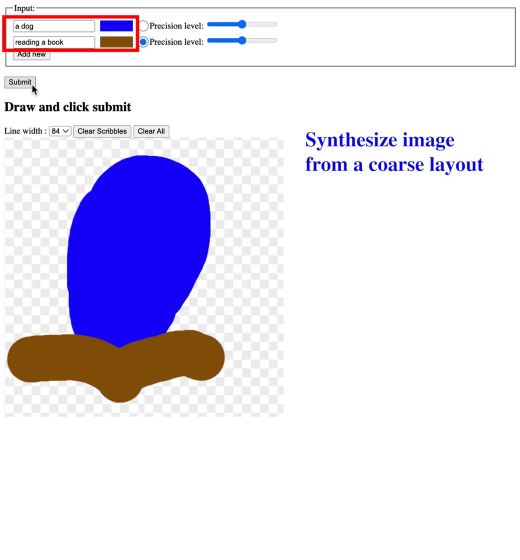

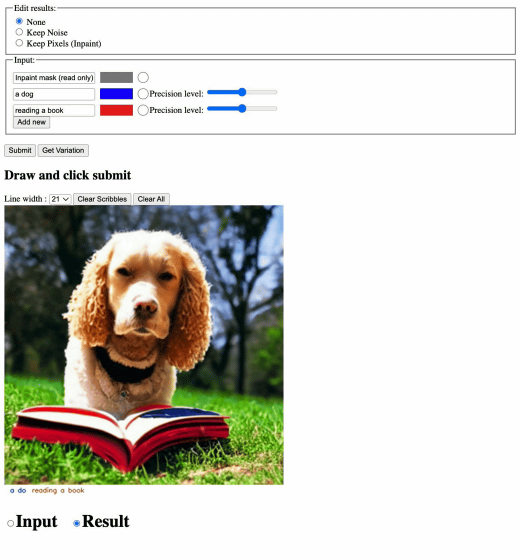

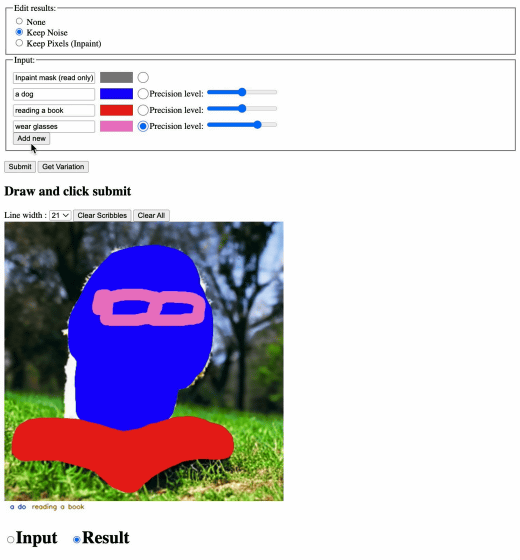

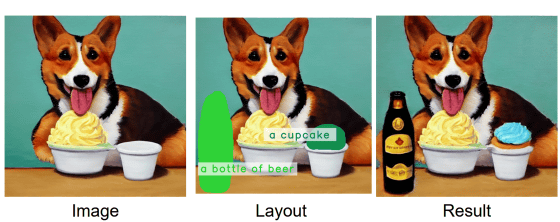

You can also split the text into parts and specify the layout by coloring specific parts. For example, split the text 'a dog reading a book' into two parts 'a dog' and 'reading a book' ” in blue and ”reading a book” in brown.

An image was generated according to the layout specified in advance.

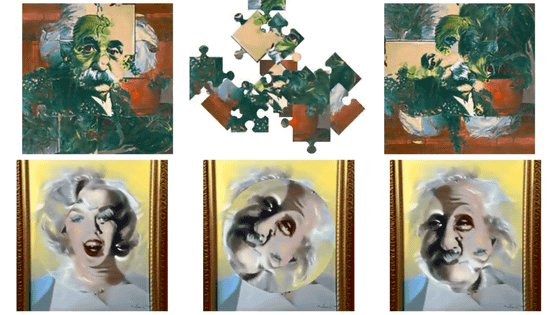

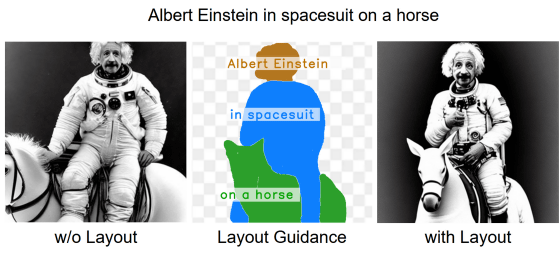

Based on the text 'Albert Einstein in spacesuit on a horse', the one generated without specifying the layout is the leftmost. If you specify the layout like in the middle, the rightmost image will be generated.

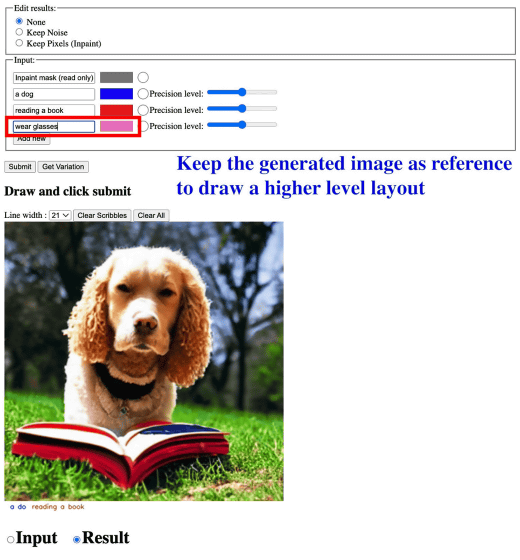

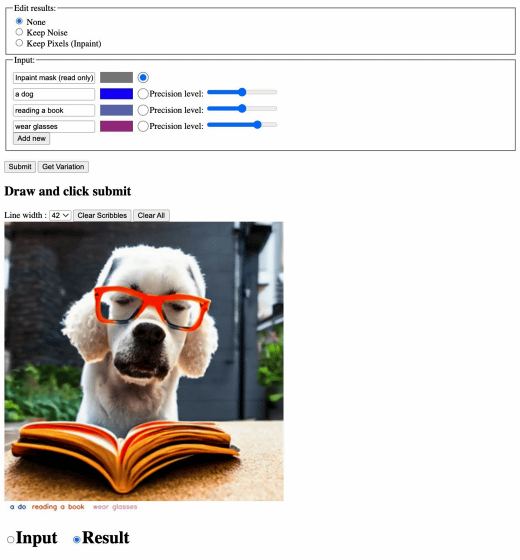

Furthermore, it is also possible to replace or add objects while maintaining the composition of the image. This time, I will add the element 'wear glasses' to the image where the dog is reading a book.

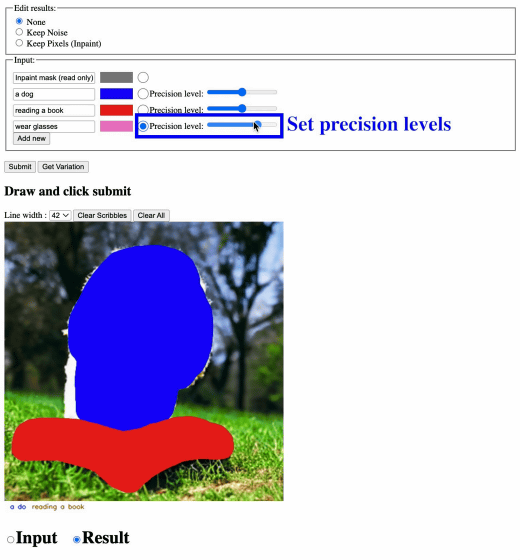

In addition, it is possible to increase or decrease the accuracy of a particular part by adjusting the 'Precision level' item next to the text or specified color.

Let's generate an image by specifying the place where you want to wear glasses with pink, which is the specified color of 'wear glasses'. In addition, SceneComposer can fix the noise of the image and regenerate it.

The generated image looks something like this. I was able to make a dog read a book and wear glasses while maintaining the composition and atmosphere.

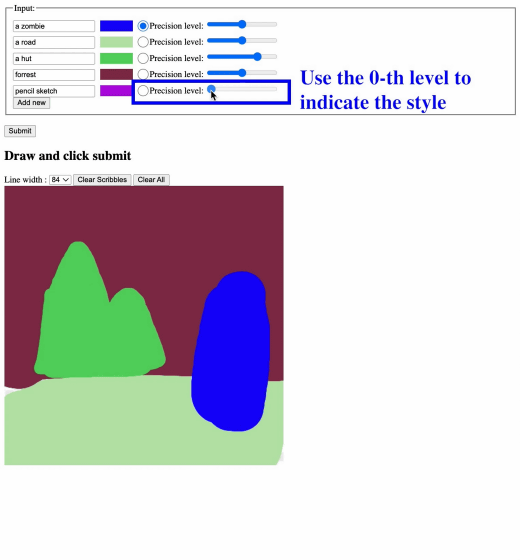

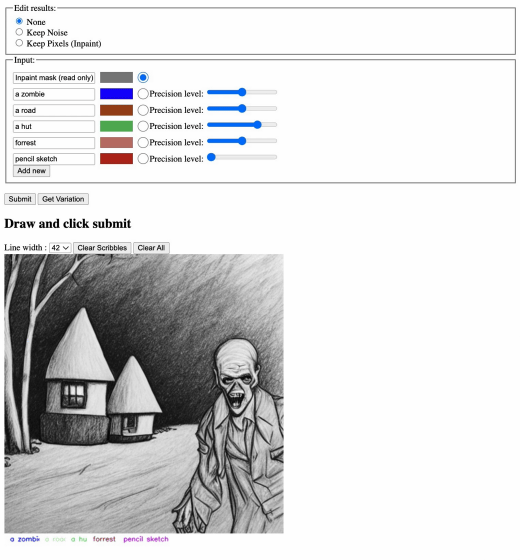

Next, let's generate an image with the text 'a zombie', 'a road', 'a hut', 'forest', and 'pencil sketch'. At this time, it is good to set 'Precision level' to '0' for 'pencil sketch' which is a style specification and not an object.

Zombies against hovels and trees were generated with a pencil-like touch.

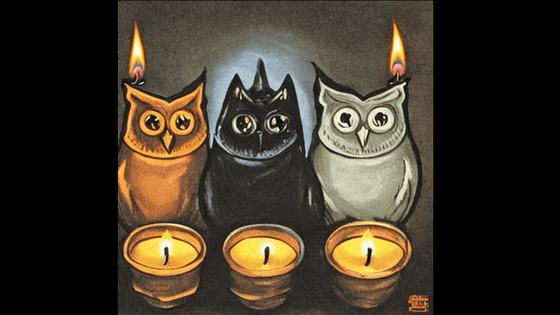

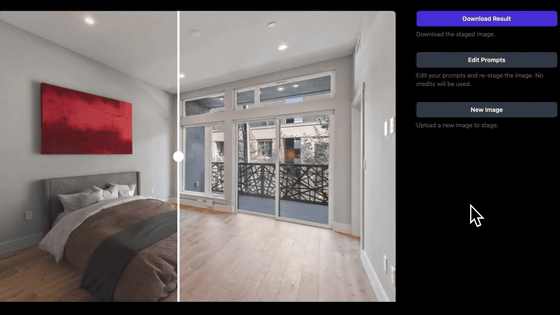

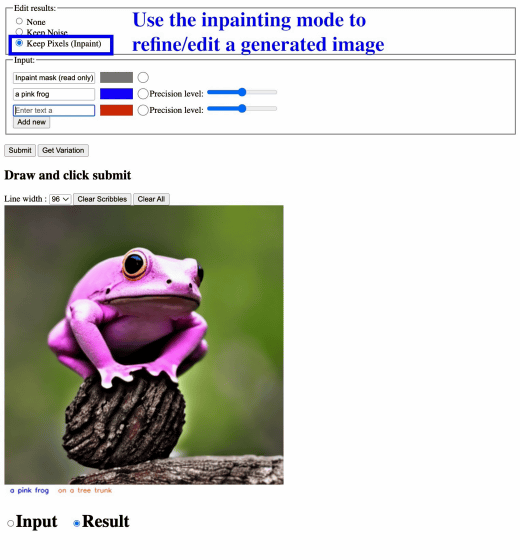

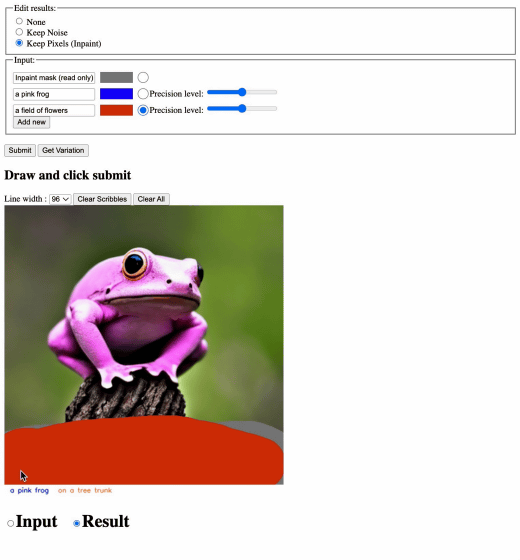

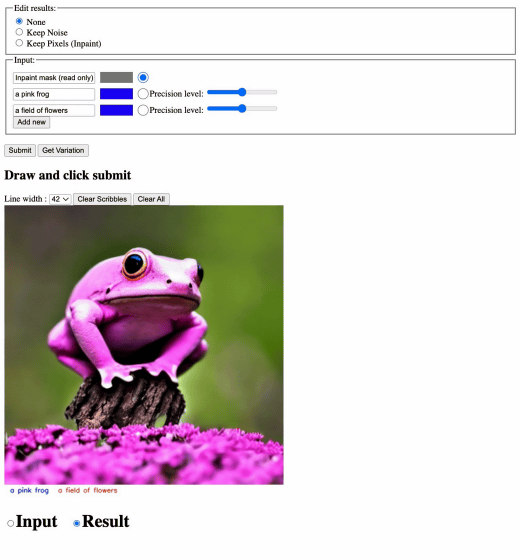

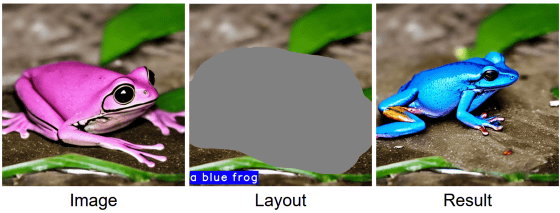

Also, if you check 'Keep Pixels', you can add or replace only the parts you want to replace while leaving the parts you do not want to change about the generated image.

Specify the layout of the element 'a field of flowers' only at the bottom of the generated image.

Then, the ground became a flower garden without changing the frog and the background.

An example of editing a part of the image while maintaining the part you do not want to change is like this. ``Our framework flexibly supports users with different drawing expertise and who are at different stages of the creative workflow,'' said the research team.

At the time of writing the article, detailed information is not posted on the GitHub page of 'SceneComposer', but it is scheduled to be updated soon, and the web application will be released soon.

Related Posts: