A former Twitter engineer talks about why Twitter's system continued to work without stopping even if two-thirds of its employees were fired

It is reported that

Why Twitter Didn't Go Down: From a Real Twitter SRE

https://matthewtejo.substack.com/p/why-twitter-didnt-go-down-from-a

Tejo has been a Site Reliability Engineer at Twitter for five years, four of those years being the sole Site Reliability Engineer on the caching team. The first project when Mr. Tejo joined the team was to replace the machine for the cache server with a new one. At the time Mr. Tejo participated, the tools were not automated, he was handed a spreadsheet with the server name written on it, and he was managing and operating everything manually.

Mr. Tejo, who was in charge of automation, reliability, and operation in the cache team, said, ``I designed and implemented most of the tools to automate Twitter's cache, so I'm qualified to talk about this. I think there may be only one or two other people who can talk about this system.'

Caching is critical to delivering Internet content. Instead of having web content downloaded directly from the main server, you can distribute the load on the main server by having it download from a cache server that has a copy of the content. In the case of Twitter, tweets, timelines, direct messages, advertisements, authentication, etc. were delivered from cache servers.

According to Tejo, Twitter's cache runs as an

For example, in a cache cluster of 100 servers, if one server fails for some reason, Mesos will detect the failed server and remove it from the aggregated servers. Aurora then detects that there are only 99 running cache servers and automatically searches for new servers, returning the number of running cache servers to 100. There is no room for manual human intervention in the sequence of motion.

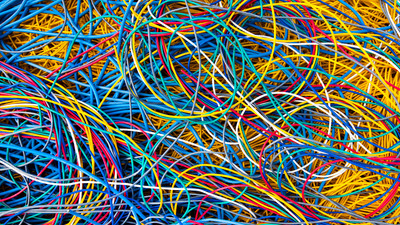

In a data center, servers are stored on a stand called a 'rack', connected to servers on other racks with a device called a switch, and finally connected to the Internet via a router. It is One rack holds 20 to 30 servers, and if the rack fails, a switch breaks, or the power goes out, all 20 servers go down.

Another advantage of using Aurora and Mesos, according to Tejo, is that you can avoid putting too many applications on one rack. This allows an entire rack to be brought down safely and abruptly, allowing Aurora and Mesos to have new servers home to the applications they were previously running on.

Based on the spreadsheet he received at the time of his appointment, Mr. Tejo created a tool to track all servers, not increasing the number of physical servers on the rack too much and preventing problems from occurring even if a failure occurs. It seems that it is designed so that is distributed. However, Mesos cannot detect failures in all servers, and hardware monitoring is done separately. So, when a disk failure or memory failure is detected, repair tasks are assigned to data center personnel on the alert dashboard.

In addition, the cache team uses software to track the uptime of cache clusters. If too many servers are considered down in a short period of time, new tasks that require cache purging will be refused until it's safe to do so. This avoids accidentally taking down entire cache clusters and straining the services they protect.

We also assume that there are too many to go down quickly, too many to fix at once, and Aurora can't find new servers to put old jobs on. That's what I'm talking about. To create a repair task for a failed server, first check if it's safe to delete the job for that server, and when the job is empty, select 'Safe for data center technicians to work on.' is”. When a data center technician marks a server as 'repaired,' a tool runs that locates the repaired server and automatically activates it to run the job. In short, it seems that only ``physical repair of the server'' is required for human work in a series of repair tasks.

According to Mr. Tejo, there were bugs that prevented new cache servers from being added and bugs that took up to 10 minutes to add a server, but thanks to the automation work, we were able to fix it little by little while keeping the project on track. It seems that I was able to do it.

A system that automates the maintenance of a series of cache servers seems to be the reason why the service called Twitter has not gone down. According to Tejo, Twitter has two data centers where it can handle failures across Twitter. Basically all critical services running on Twitter can run in one of the two data centers. The capacity that can be used in one data center was twice that of normal service execution for the purpose of responding to disasters. In short, normally the utilization rate of the data center stays at 50% at maximum.

However, even 50% utilization seems to be quite busy, so when calculating the required capacity, I would calculate the capacity required to handle all the traffic in one data center and add headroom on top of that. was normal. According to Mr. Tejo, it is very rare for the entire data center to fail, and it seems that it has occurred only once in the five years Mr. Tejo worked.

Also, instead of doing all the processing in a

Mr. Tejo said, ``We automated after automation, tackled performance issues, tested technology to make it better, and promoted large-scale cost reduction projects.We did capacity planning and ordered several servers. I was pretty busy deciding what to do and I wasn't getting paid to play games and do marijuana all day.'

Mr. Tejo also said, ``The cache that handles Twitter requests continues to operate in this way.This is just part of our daily work. It took a lot of effort. I'm grateful that Twitter's cache system is still actually working even after taking a step back.' I'm sure there's a bug lurking somewhere...'

Related Posts:

in Software, Web Service, Posted by log1i_yk