GitHub Copilot of code completion AI has 'risk of destroying open source community' in addition to copyright issue

The code completion service ``

GitHub Copilot investigation · Joseph Saveri Law Firm & Matthew Butterick

https://githubcopilotinvestigation.com/

GitHub Copilot, developed jointly by Microsoft, which owns GitHub, a software development platform, and OpenAI, an artificial intelligence development organization, is a service that complements code written halfway and writes code in response to comments. However, there are also voices pointing out that the code used for training data is infringing the copyright, and Tim Davis , a computer science professor at Texas A & M University, said that the copyrighted code he wrote is being output on GitHub Copilot.

GitHub Copilot, which automatically completes the continuation of the source code, points out that ``copyrighted code is output''-GIGAZINE

On October 17, local time, Buttarrick posted a blog titled 'Investigating GitHub Copilot,' reporting that he is working with Joseph Saveri law firm in New York to investigate a potential lawsuit against GitHub Copilot. Did.

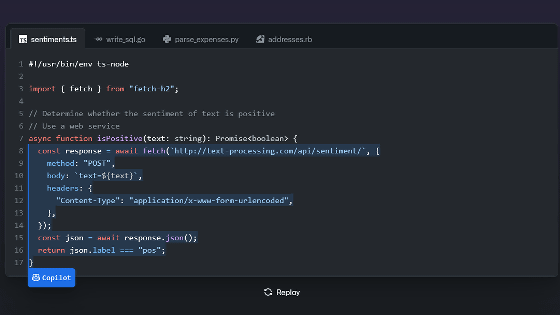

GitHub Copilot is a service that uses Codex , a code generation AI model developed by OpenAI. OpenAI explains that Codex was trained with `` tens of millions of public repositories '', and Eddie Aftandilian , who was involved in the development of GitHub Copilot, also admitted that `` GitHub's public repository '' was used for training data.

Butterrick's problem here is the license of open source software published on GitHub. In general, developers using open source software must either 'comply with the obligations imposed by the license' or 'use the code as fair use under copyright law'. Most open source software requires public attribution, but attribution of software used for training on GitHub Copilot is not clear. Therefore, Microsoft and OpenAI argue that ``the use of open source software in GitHub Copilot training is fair use.''

Certainly, the claim that `` using copyrighted content for AI training is fair use '' is widely spread, but there is no legal backing to support this. Software Freedom Conservancy (SFC) , a digital rights organization, has reported that it has contacted Microsoft and GitHub about ``legal support that data use in AI training is fair use,'' but has received no response.

Regarding this matter, Mr. Buttarik said that there has never been a lawsuit in the United States that has dealt squarely with fair use in AI training, and even if the use of content in AI training is determined to be fair use, fair use can be found in multiple ways. He pointed out that it is unclear whether fair use will be allowed for other AIs as well, as it is a delicate balance of factors.

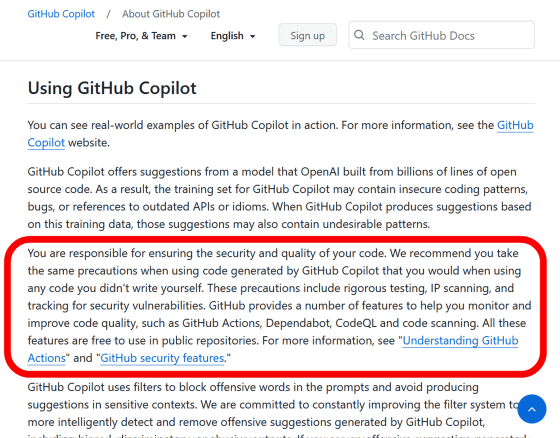

Even more problematic is that even if Microsoft and OpenAI's development of GitHub Copilot is fair use, it doesn't apply to users who use GitHub Copilot. Microsoft calls the code output by GitHub Copilot 'suggestions', but Microsoft does not guarantee the accuracy, safety, or intellectual property rights of the code.

The

In other words, if open source software code is generated using GitHub Copilot, users are responsible for complying with the license obligations when using this code. However, when you generate code using GitHub Copilot, it doesn't give you any information about the provenance of that code. As a result, users can not even notice the existence of the license, let alone the content of the license of the code, and there is a possibility of litigation risk.

“GitHub Copilot is just a convenient alternative interface for searching large corpora of open source code,” said Butterlick. There is potential,” he said. Former GitHub CEO

In general: (1) training ML systems on public data is fair use (2) the output belongs to the operator, just like with a compiler.

—Nat Friedman (@natfriedman) June 29, 2021

We expect that IP and AI will be an interesting policy discussion around the world in the coming years, and we're eager to participate!

In addition, Mr. Buttarik sees the problem that users cannot access the open source community due to the property of GitHub Copilot that ``the information of the developer of the output code and the development community is lost''. ``Microsot is trying to create a garden with a new wall that prevents programmers from discovering traditional open source communities.At the very least, it eliminates the motivation to look for open source communities. doing.

The advantage of open source software is that it creates a community of diverse users, testers, and contributors who can work together to improve the software. These communities are formed by developers thinking 'I wish there was such software' and searching on the Internet and reaching the community. However, if the desired code can be obtained immediately through GitHub Copilot, there is no reason for users to join the open source community, and there is a risk that the community will decline.

Regarding this situation, Mr. Buttarik argues that open source developers will only be producers of resources to improve the service GitHub Copilot. It added, 'Even the cows on the farm can get food and a safe haven from trading. GitHub Copilot contributes nothing to individual projects. Nothing goes back to the broader open source community.' ', accusing it of having a devastating effect on the open source community.

Mr. Butterrick does not criticize AI-assisted coding tools in general, but rather the measures taken by Microsoft and GitHub Copilot. Microsoft develops GitHub Copilot in a more friendly way to the open source community, such as ``using only licensed open source software for training data'' and ``paying developers of code used for training data''. I am pointing out that I should have been able to do it. Furthermore, the accuracy of GitHub Copilot depends on the quality of the open source software used for training data, but if the spread of GitHub Copilot destroys the open source community, the quality of GitHub Copilot will drop significantly in future versions. There are concerns that

``After all, the open source community is not a fixed group of people. , The damage to open source must be tested before it becomes catastrophic, so I am preparing for legal action, ”said Butterrick.

Related Posts:

in AI, Software, Web Service, Posted by log1h_ik