Criticism that ``companies are escaping legal liability by leaving the creation of datasets for AI learning to universities and non-profit organizations''

Triggered by the free release of image generation AI Stable Diffusion to the general public, progress in image generation AI is progressing rapidly. On the other hand, there are also voices pointing out the rights issue of the data set used for AI model learning, and some discussions are being held to pursue legal responsibility. ``Researchers at universities and non-profit organizations have become a cover for major technology companies to escape accountability,'' said Andy Baio, a developer who is doing various activities on the Internet. increase.

AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability - Waxy.org

https://waxy.org/2022/09/ai-data-laundering-how-academic-and-nonprofit-researchers-shield-tech-companies-from-accountability/

In September 2022, Meta announced a video generation AI 'Make A Video'. This AI was to generate a video just by entering a character string (prompt).

Meta announces video generation AI 'Make A Video', releases videos of flying super dogs and teddy bears drawing self-portraits - GIGAZINE

In the paper (PDF file) , Meta wrote, ``We use two datasets, 'WebVid-10M' and 'HD-VILA-100M', for the video generation model.' Therefore, when software developer Simon Wilson examined two datasets with the open source tool ' Datasette ' for searching AI training datasets, more than 10.7 million pieces included in 'WebVid-10M' All of the video clips were watermarked by Shutterstock . In addition, 'HD-VILA-100M' is a data set composed of videos collected by Microsoft, and it turned out that millions of them were collected from YouTube.

Meta uses a series of datasets for research purposes called 'learning to AI', not for commercial use. However, Mr. Baio said, 'Meta probably trains AI models with the assumption of future commercial use. You think it's strange? Commercial use of datasets and models collected and trained by commercial entities has become commonplace.'

For example, Stable Diffusion, an image generation AI, was led by Stability AI at the time of writing the article, but originally started with research by the Machine Vision and Learning Research Group at the University of Munich Ludwig-Maximilians (LMU). increase. Researchers at LMU say that they are grateful that the development project has progressed thanks to Stability AI's donation of computers.

And the datasets used to train Stable Diffusion, Google's Imagen , and Make A Video's image generation model were all created by the German non-profit organization LAION . Stability AI also funds LAION.

Not sure if I mention but stable diffusion is a model created and released by CompVis at University of Heidelberg.

—Emad (@EMostaque) August 16, 2022

The LAION dataset is created by the German charity of the same name.

This is outlined in the announcement post.

Has implications for stuff above

Mr. Bio believes that if data collection and model learning are done by research institutes such as universities and non-profit organizations, they are likely to fall under fair use as permitted by American copyright law. However, Stability AI, which develops commercial services like ``DreamStudio,'' trains models using datasets created by universities and non-profit organizations, and generates images under a commercially available open source license. Mr. Baio criticizes that doing is a kind of data laundering.

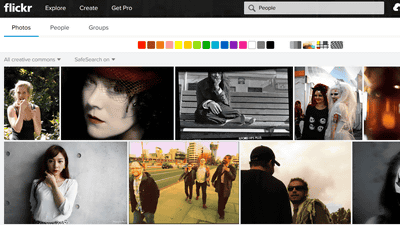

Mr. Baio mentioned that a researcher at the University of Washington used a Creative Commons license image on Flickr , a photo sharing community site, as a training dataset for face recognition AI, and touched on the legal responsibility of the dataset. increase. Mr. Bio, who was a Flickr user, said that he uploaded photos with attribution and for non-commercial purposes, but pointed out that such license terms were ignored. The University of Washington dataset was later discontinued, but a dataset created by IBM was also reported with similar issues.

Pointed out that IBM is using photos on Flickr for face recognition technology without permission from users - GIGAZINE

Mr. Bio commented, 'Getting permission for all the images in the dataset will be very costly and will slow down the progress of technology, but it is difficult to unconditionally cancel what has been released to the world.' increase.

Mr. Bio also said, ``While AI is making rapid progress in 2022, there are ethics regarding the creation of AI models and datasets, and lack of consent to use, attribution of rights, and license indications for datasets. Some people are actually working on solving this problem, but I'm skeptical: AI models, once trained, almost never forget the data, at least for now.' said.

Related Posts:

in Software, Web Service, Web Application, Posted by log1i_yk