``Textual Inversion'' technology that realizes ``generate this image-like XX'' with image generation AI ``Stable Diffusion'' has appeared

Image generation AI `` Stable Diffusion '' is an AI that outputs an image along the sentence when you enter `` a sentence explaining the image you want to generate ''. Stable Diffusion is

An Image is Worth One Word: Personalizing Text-to-Image Generation using Textual Inversion

https://textual-inversion.github.io/

[Tutorial] 'Fine Tuning' Stable Diffusion using only 5 Images Using Textual Inversion.

https://www.reddit.com/r/StableDiffusion/comments/wvzr7s/tutorial_fine_tuning_stable_diffusion_using_only/

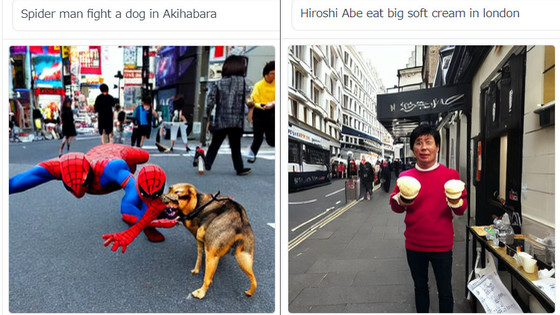

Stable Diffusion is an AI that outputs an image in line with the text by inputting a text that represents the image you want to generate, such as `` an image of a bear playing in the forest '' or `` an image of a cat and a dog watching baseball ''. is. You can see what kind of images can be output with Stable Diffusion by looking at the following article.

AI ``Stable Diffusion'' that creates pictures and photos that look like they were drawn by humans according to keywords has been released to the public, so I tried using it-GIGAZINE

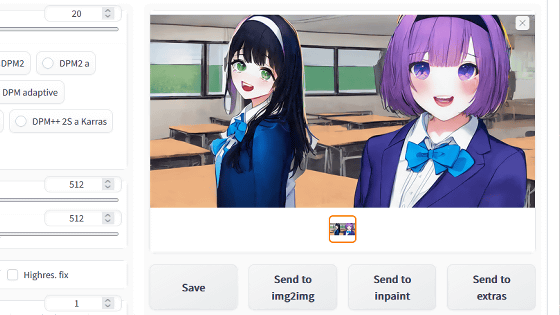

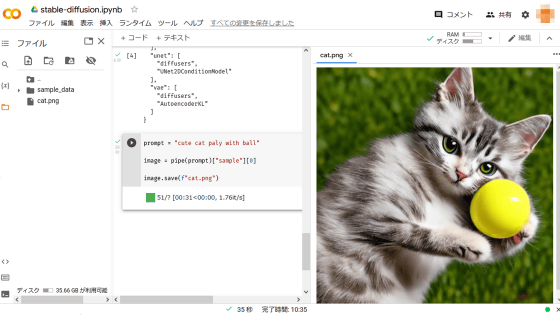

The article above uses the Stable Diffusion demo page, which can cause long wait times. If you want to generate images quickly without worrying about waiting time, build an execution environment on your own machine or prepare an execution environment using Google's Python execution environment 'Colaboratory' by referring to the following article. is recommended.

Summary of how to use image generation AI ``Stable Diffusion'' even on low-spec PCs for free and without waiting time - GIGAZINE

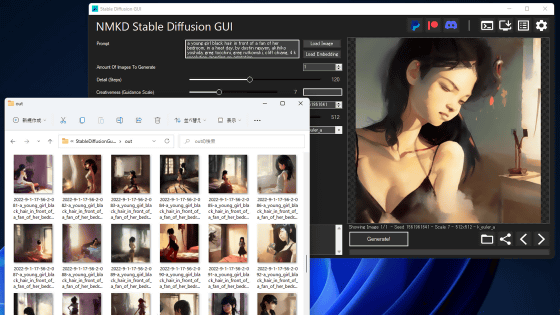

Stable Diffusion can be used easily on a demo page or in an environment you have built yourself, but for example, if you enter a sentence such as `` An image of a bear playing in the forest '', ``I want to make it look like an animation, but it looks like a live action 'I want a deformed bear, but a realistic bear is output.' In order to solve this problem, a technique called 'Textual Inversion' was devised to output an image close to the image.

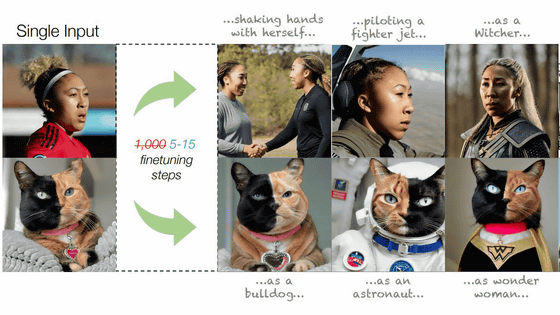

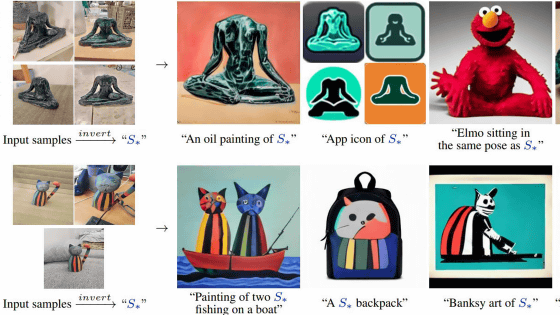

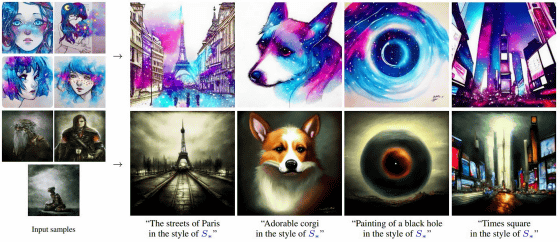

'Textual Inversion' is a technology that can be used to give instructions to AI by compressing the 'style', 'type of subject', 'color', etc. of an image into words. Below is a diagram showing how 'Textual Inversion' works. The style of the leftmost image is compressed into the word 'S*', and instructions such as 'S* style Paris', 'S* style dog', 'S* style black hole', and 'S* style Times Square'. Just by giving , an image with a similar style is output.

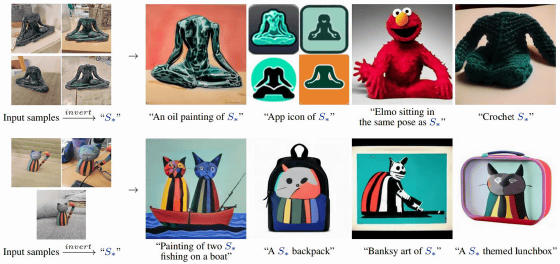

In 'Textual Inversion', in addition to the style of painting, elements such as the type and posture of the subject can also be compressed into words. For example, in the example below, instructions such as 'Draw S* with oil paint', 'Elmo sitting in a posture like S*', 'S* drawn by Banksy', and 'Lunch box with S* as a motif' are executed. .

A method of giving accurate instructions to Stable Diffusion using such 'Textual Inversion' is explained by Reddit user ExponentialCookie. ExponentialCookie's procedure explanation can be confirmed by clicking the embed below. However, in order to use the following method, a graphic board with at least 20 GB of memory is required.

Related Posts: