A controversial Apple photo scan reports an example of a 'coincidence match'

Apple in August 2021

ImageNet contains naturally occurring NeuralHash collisions

https://blog.roboflow.com/nerualhash-collision/

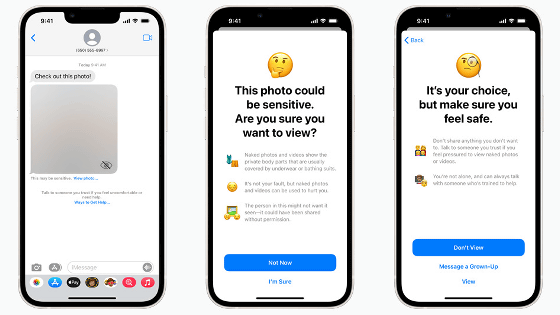

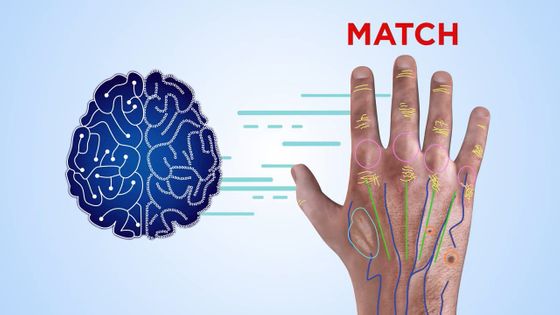

Apple's ongoing system for scanning 'data related to child sexual exploitation (CSAM)' generates hash values from user data stored on devices and in the cloud, and uses it as a database of known CSAMs. It is a mechanism to detect child pornography by collating. However, the algorithm called 'NeuralHash' used by Apple to match hash values has the disadvantage that 'a'hash collision'in which the same hash value is generated from different images can be artificially generated'. , Pointed out by security researchers.

Apple's 'Child Pornography Detection System in iPhone' Turns Out to Have a Big Hole-GIGAZINE

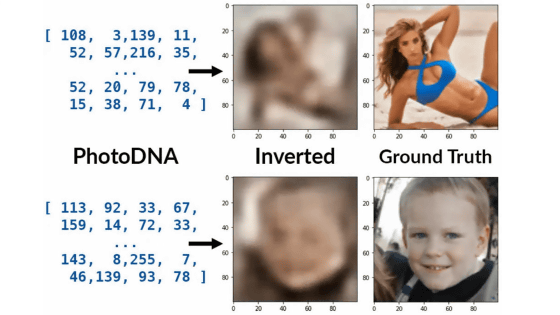

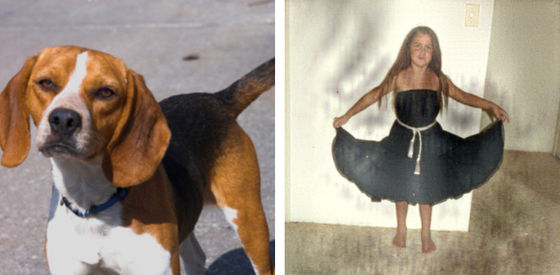

Below is an example of an artificial hash collision. In this example, the image of the girl on the right is processed so that it has the same hash value as the image of the dog on the left, and a hash collision is intentionally generated.

Brad Dwyer, co-founder of image recognition technology developer

As a result, there are 8272 cases where hash collisions occur normally on the exact same image, and cases where hash collisions occur even if there is a difference in resolution, that is, cases where the images can be matched normally even if subtle changes are made. We were able to confirm 595 cases. However, there were two cases where hash collisions occurred with irrelevant images that were not edited and did not look similar.

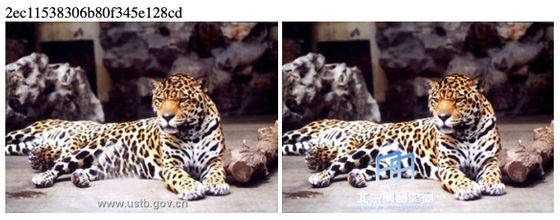

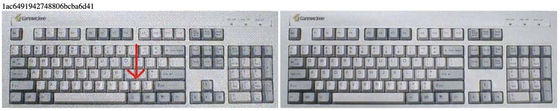

Below is an example of a successful hash collision. Even with a significant reduction in resolution, Neural Hash was able to recognize that the two images were the same.

The same applies to the presence or absence of arrows and logos.

Even if the image was stretched horizontally, it was recognized as the same image.

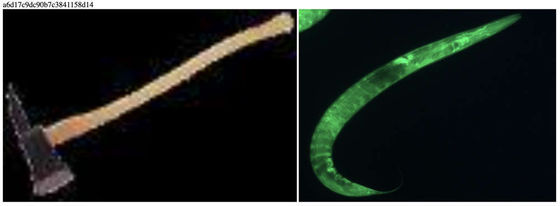

However, we have also found cases where hash collisions occur in irrelevant images. The images of the nail (left) and the ski (right) below are certainly similar at first glance, but the human eye sees them as different images. However, Neural Hash recognized it with the same hash value.

Hash collisions occurred in images with different colors and shapes, such as each (left) and nematode (right).

According to Dwyer, the above two cases were found in 2 trillion image pairs. In addition, Apple explains that 'the probability of hash collision is less than 1 in 1 trillion'.

Regarding the results of this experiment, Dwyer said, 'NeuralHash is working better than I expected, and my ImageNet experiment results are probably similar to the tests Apple did, but Apple has 1.5 billion people. With the above users, problems can occur no matter how low the probability of false positives is, so further research is needed to clarify the risk of innocent people being reported. Probably. '

Regarding Apple's efforts to detect CSAM, 'The biggest problem seems to be the CSAM database itself. Of course, since the original image can not be checked, foreign governments etc. kill political opponents and human rights defenders. I can't even tell if I'm putting a non-CSAM hash value into the database to do this. Apple plans to address this issue with the consent of the two countries when adding files to the database, This process is opaque, so it's likely to be abused, 'he said, adding that even if the chances of false positives are low, the challenges still remain.

Related Posts:

in Software, Posted by log1l_ks