It turns out that there is a big hole in Apple's 'child pornography detection system in iPhone' that is receiving fierce opposition from all over the world

In August 2021, Apple released a mechanism to scan images stored on iOS devices and iCloud to check 'data related to sexual exploitation of children (CSAM)' from hashes in the latter half of 2021. It has been announced that it will be incorporated into the upcoming iOS 15. Security researchers have pointed out that

GitHub --AsuharietYgvar / AppleNeuralHash2ONNX: Convert Apple NeuralHash model for CSAM Detection to ONNX.

https://github.com/AsuharietYgvar/AppleNeuralHash2ONNX

Apple says collision in child-abuse hashing system is not a concern --The Verge

https://www.theverge.com/2021/8/18/22630439/apple-csam-neuralhash-collision-vulnerability-flaw-cryptography

Apple didn't engage with the infosec world on CSAM scanning – so get used to a slow drip feed of revelations • The Register

https://www.theregister.com/2021/08/18/apples_csam_hashing/

On August 5, 2021, Apple published a page entitled 'Extended Protection for Children' and announced that it would introduce new safety features to limit the spread of CSAM. This safety feature is to detect CSAM by matching the hash value of the photo with the database by the machine learning algorithm NeuralHash and report it to the public institution.

Apple announced that it will scan iPhone photos and messages to prevent sexual exploitation of children, and protests from the Electronic Frontier Foundation and others that 'it will compromise user security and privacy' --GIGAZINE

However, Apple's CSAM detection, which arbitrarily checks not only the cloud but also the data on the device, has been strongly criticized as a privacy breach.

It is pointed out that Apple's 'measures to scan iPhone photos and messages' will lead to strengthened monitoring and censorship around the world --GIGAZINE

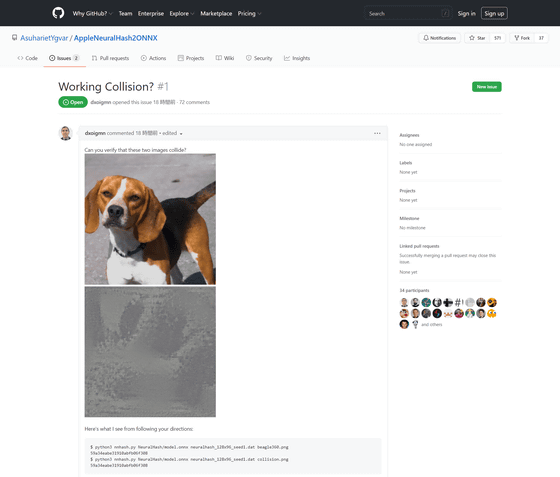

On August 17, 2021, GitHub user Asuhari et Ygvar will convert the NeuralHash API for CSAM detection extracted from iOS by reverse engineering to Open Neural Network Exchange , Python's open source machine learning framework. Has been published on GitHub. In addition, Neural Hash used for CSAM detection is not the latest version, but a slightly older version implemented in iOS 14.1 released on December 14, 2020.

And on August 18, 2021, Cory Cornelius, a researcher at Intel Labs , an Intel research institute, reported that hash collisions could be seen in completely different images, a dog photo and a gray image. increase.

Working Collision? · Issue # 1 · AsuharietYgvar / AppleNeuralHash2ONNX · GitHub

https://github.com/AsuharietYgvar/AppleNeuralHash2ONNX/issues/1

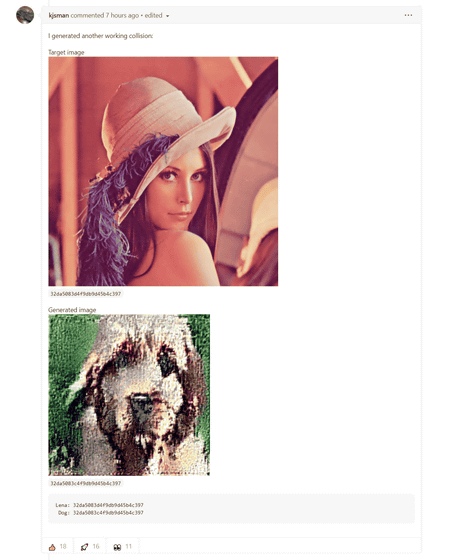

In addition, other examples of hash collisions have been found on the GitHub forums.

Apple hasn't ruled out the possibility of a hash collision, but says that 'the probability of a hash collision is one trillionth,' which isn't a problem. However, Matthew Green, a computational scientist at Johns Hopkins University, said, 'Frankly, Apple has deliberately made it, simply claiming that'accidental false positives in hash functions are very low.' I haven't said anything about the false positives that were made. '

'It has long been clear that such hash collisions occur in Neural Hash,' Green said, claiming that Apple hasn't released the Neural Hash source to hide the risk. He also pointed out that it is possible to assume a method of misusing an image that is recognized as a child sexual abuse image even if it is seemingly harmless.

It was always fairly obvious that in a perceptual hash function like Apple's, there were going to be “collisions” — very different images that produced the same hash. This raised the possibility of “poisoned” images that looked harmless, but triggered as child sexual abuse media.

— Matthew Green (@matthew_d_green) August 18, 2021

Related Posts:

in Software, Posted by log1i_yk