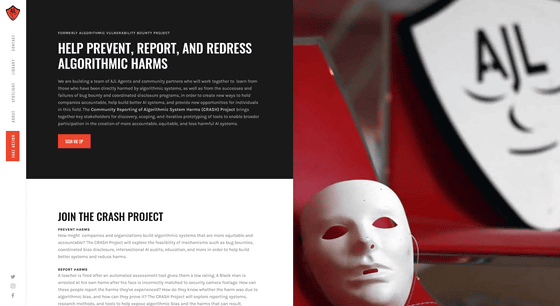

The idea of a 'bias bounty program' to eliminate the bias contained in the algorithm

Algorithmic Vulnerability Bounty Project (AVBP)

https://www.ajl.org/avbp

Me & @camillefrancois's @mozillafestival discussion of @AJLUnited's CRASH project got a news feature!

— Deb Raji (@rajiinio) March 15, 2021

'If we had a harms discovery process that, like in security, was ... robust, structured & formalized, that would help the community gain credibility' https://t.co/ADbdDgca0v

Using bug bounties to spot bias in AI algorithms

https://www.computing.co.uk/news/4028677/bug-bounties-bias-algorithms

The idea of 'getting a bounty to find the bias contained in the algorithm' is based on the idea of a bug bounty program that is used in internet services and software.

Researcher Deborah Raj and others are promoting this project. In 2019, Mr. Raji announced the results of a study that Amazon's facial recognition API 'Amazon Rekognition' has a racist bias.

Amazon and MIT researchers confront each other over 'discrimination of Amazon's facial recognition software' --GIGAZINE

Major companies such as Microsoft and Facebook are aware of the bias included in the algorithm and are working on it, but no effective solution has yet come out.

Regarding Raji's efforts, it turned out that it was difficult to define 'harm caused by algorithms'. In addition, there are still issues such as the fact that the methodology for detecting bias in the algorithm has not been established.

Also, even if the methodology is established, it is unlikely that companies using the algorithm will benefit financially from correcting the bias, and it will only be repelled like the case of 'Amazon Rekognition'. It is speculated.

For this reason, Mr. Raj and his colleagues think that if they want to start a 'bias bounty program', they need regulation by the government and pressure from public opinion.

Related Posts:

in Note, Posted by logc_nt