Should the development of unethical artificial intelligence (AI) be stopped?

Since artificial intelligence (AI) inherits human prejudice and discrimination, there have been concerns about the dangers of AI from an ethical perspective in recent years. Historically, research in the field of computer science has not had ethical debates, but when things are changing dramatically, science writer Matthew Hanson summarizes the transformation of the scientific community.

Who Should Stop Unethical AI? | The New Yorker

https://www.newyorker.com/tech/annals-of-technology/who-should-stop-unethical-ai

According to Hanson, the main opportunity for peer-reviewed papers in computer science to be published is conferences, not journals, which sets them apart from other academic studies.

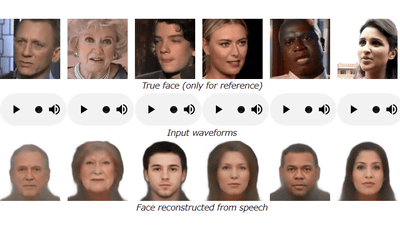

At a conference in 2019, Hanson saw a poster for the AI 'Speech 2 Face,' which generates a speaker's face image from his voice. The 'face' predicted by Speech2Face is such that the characteristics such as gender, age, and ethnicity match, and it seems that 'it was not a particularly impressive technology', but Speech2Face later caused a great deal of controversy.

AI to generate a speaker's face image from 'voice' will be developed --GIGAZINE

Sociology Alex Hannah said on Twitter that Speech2Face is a ' transphobic ' idea, pointing out that there is a problem in linking biological traits and identities. On the other hand, other users have also suggested that this technology may be useful in criminal investigations.

Such issues of 'AI and ethics' have been attracting attention in recent years, and it is said that computer science treatises are increasingly facing ethical issues. 'We are on the verge of the Manhattan Project ,' said Michael Kahn, author of ' Ethical Algorithms, ' about this situation surrounding AI.

In the discussion on AI and ethics, he also mentioned how the Ethics Committee (IRB) is involved in the process of publishing papers in the field of computer science. Many human studies, such as biology, psychology, and anthropology, involve IRB scrutiny in the process of proceeding, but it is customary to assume that computer science research does not involve human subjects. IRBs were rarely installed. It was pointed out that this is the reason why AI research lacks an ethical perspective.

Also, computer science research is not purely academic and is premised on the use of technology by companies and organizations, and while the impact of AI technology is widespread, the US Department of Health and Welfare has been assigned to the IRB. On the other hand, the statement that 'the long-term impact of research should not be evaluated' is also related to the problem.

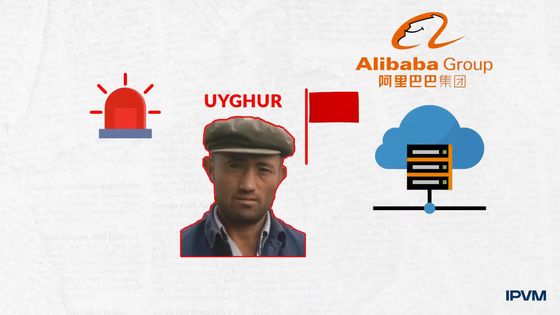

For example, it is reported that China offers a face recognition cloud service that identifies Uighurs. AI itself for face recognition is not a problem, but technology is being used for ethnic persecution.

It was pointed out that Alibaba provided 'a face recognition cloud service that identifies Uighurs' --GIGAZINE

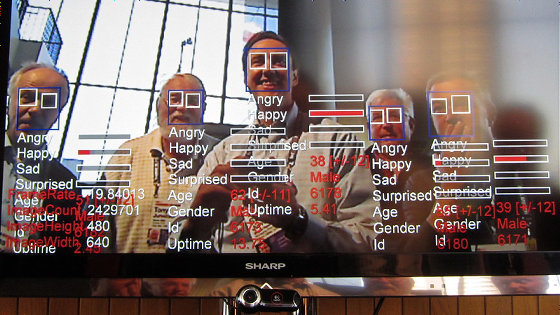

In addition, as a result of police adopting face recognition technology in criminal investigations, it became a problem especially when there were many false arrests of black people. This is another example of how discrimination was actually promoted, even though there were no problems when the technology was developed.

A man who was mistakenly arrested for being innocent because of face recognition technology-GIGAZINE

For this reason, it is necessary to improve the academic research process from an ethical point of view, but on the other hand, it is pointed out that improving the system in the academic field alone cannot suppress the social adverse effects of the technology. There is also.

On the other hand, some researchers believe that IRB involvement has a direct impact on AI research. For example, considering the ethical aspects of research, if the algorithm learns using a public database of photographs, it may be necessary to ask the subject for consent before conducting the research. Many researchers assume that 'human subjects are not involved in the research', but if 'human involvement' is recognized, the research itself for developing the technology has been done so far. Will follow a very different process.

So far, AI used by private sector companies is rarely audited because regulators' understanding of technology has not caught up. For this reason, most AI research relies on self-regulation, and some conferences are trying to create new norms. The Association for Computational Linguistics is asking reviewers to consider the ethical implications of research, and NeuroIPS , a leading international conference in the field of AI, also positively and negatively stated 'the wide potential impact of research' in its submitted papers. We are calling for discussions from both sides.

NeuroIPS, the top AI society, requires authors of papers to describe 'the impact of theory on society' --GIGAZINE

The demands of NeurIPS have become a hot topic among researchers, and researchers in technologies that are expected to be used in the military have come to abandon their research altogether. On the other hand, Jason Gabriel, who actually reviewed the paper, also said that the content of the discussion about the impact of the study was good. It seems that many researchers are relieved to be able to publicly discuss the 'moral crisis' they had personally.

Historically, scientific regulation and self-regulation have been created after the tragedy. The National Research Act, which paved the way for the establishment of IRB, was created in the wake of the clinical trial ' Tuskegee Syphilis Experiment ' for syphilis in black subjects, and research to increase the power of influenza virus etc. was conducted by the US government. Funding has been suspended. No major tragedy has occurred with AI yet, which is why rules and norms are being created on an ongoing basis to prevent major incidents in various studies in rapidly evolving fields. ..

Related Posts:

in Science, Posted by darkhorse_log