College students are struggling to monitor the 'fraud prevention software' introduced with remote learning

The pandemic of the new coronavirus has introduced remote work and remote learning around the world, and many students are educated at home without going to school even at the time of writing the article. Meanwhile, some universities have introduced 'fraud prevention software' to monitor student fraud in tests, but it has been pointed out that students are suffering from false fraud judgment by software and privacy issues. ..

College Students Are Learning Hard Lessons About Anti-Cheating Software — Voice of San Diego

https://voiceofsandiego.org/topics/education/college-students-are-learning-hard-lessons-about-anti-cheating-software/

As the introduction of remote education progressed at a rapid pace, various problems emerged, one of which was 'How to detect fraud by students taking tests at home?' Companies that have entered the market are companies that provide 'fraud prevention software' such as Respondus , Honorlock , ProctorU, and Proctorio .

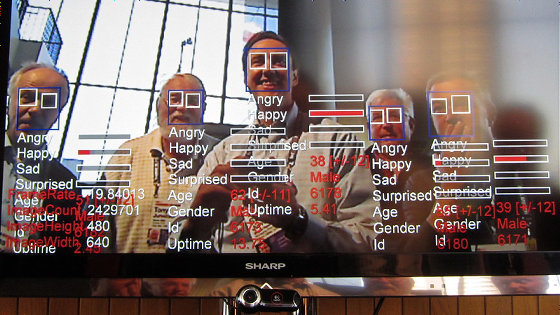

Respondus explains on its website that surveillance software monitors student behavior to detect suspicious behavior during the exam and flags it so that the professor can see it after the exam. The monitoring software recognizes the student's face, collects data such as face data and mouse activity, and uses powerful AI to recognize 'patterns and abnormalities related to fraud'.

However, the face recognition technology used by Respondus and others raises various doubts in addition to privacy issues. In particular, it has been often pointed out that face recognition technology has a racial bias, and that the proportion of black faces that are not recognized well is high . In the United States, several cities that have questioned the imperfections in the introduction of facial recognition technology have banned the use of facial recognition systems one after another.

Big cities in the United States ban face recognition systems one after another --GIGAZINE

by Mike MacKenzie

John Davison, senior attorney at the Electronic Privacy Information Center , a privacy advocacy group headquartered in Washington, DC, points out that facial recognition technology has a clear unfair adverse effect on people of color. In addition, it is possible that AI may mistakenly set a flag not only for people of color but also for people with distinctive appearances.

Davison points out that while fraud prevention software collects a lot of data about students, it's a problem that students don't have the option to turn off data collection. 'Many companies make a fairly remarkable claim that they can detect signs of fraud in the data they collect, but this is due to an opaque algorithm that allows the system to correctly detect signs of fraud. It's very difficult to assess whether you're flagging, 'he said, questioning the effectiveness of the software.

However, despite the difficulty of knowing the accuracy of the algorithm, companies selling fraud prevention software are growing during the pandemic. According to the Washington Post, fraud prevention software developers have signed contracts with many universities and are making millions of dollars in profits .

San Diego State University is also one of the universities that has implemented Respondus fraud prevention software. William Scott Molina, a student at San Diego State University, was asked to install software called 'Respondus LockDown Browser' in a business administration lecture that began in the summer of 2020.

According to Molina, Respondus LockDown Browser needs to record the entire desk, under the desk, and the entire room with a camera, and also requires a mirror to be installed to show that there is nothing extra on the PC keyboard. It seems that he did. Furthermore, if the internet connection is interrupted during the exam, the student will be automatically determined to have cheated. For Molina, 31, who lives with her girlfriend and her three-year-old daughter, it's not uncommon for an unexpected happening to break the internet connection, which alone was very stressful.

Molina had a good record in the remote lecture, but after the second test in mid-August, she said, 'I didn't show the front and back of the prepared note paper to the camera.' 'I was talking during the test. I received an email from my instructor, Lenny Merrill, warning me of cheating. Molina said she didn't know that she didn't show both sides of the notepad and that 'the habit of speaking out difficult problem sentences' was judged to be suspicious, and she sent an e-mail with an excuse to Mr. Merrill.

However, even in the third test that was conducted after that, Molina said that 'I left my seat for about a minute immediately after the start of the test' and 'I was using the calculator because of a problem that I should not use the calculator'. Was considered and was not accredited. According to Molina, she left her seat shortly after the exam began because her daughter knocked on the door of the room and waited for her girlfriend to take her. Molina also claims that the timing of using the calculator did not match the order in which the questions were answered, because she did not solve the problems in order from the beginning.

Molina appealed to the Center for Student Rights and Responsibilities at San Diego State University on a series of issues in protest of the credit disapproval. The scheduled meeting was postponed twice due to the effects of the new coronavirus, but more than a month after the complaint, Molina was finally notified by the university that she would not dispose of it. It seems to be.

Molina then forwarded the notice to a professor of business administration, repeatedly insisting on giving her the right grades, and was only able to earn credits two months after her first disqualification. It was. During this time, Molina said she was very stressed and continued to suffer from credit disqualification issues whether she slept or woke up.

Not only Molina is feeling the same stress, but San Diego State University's third-year student, Nicholy Solis, also demanded that 'when using a calculator, explain the calculations to the camera verbally.' He said he was stressed. “I'm worried about taking online exams in lectures that I find difficult,” Solis said.

Mariana Edelstein also reports that using Respondus anti-fraud software during online exams can reduce exam performance. 'I can't control external factors. I can't control the time my neighbors mow the lawn or the time Amazon deliverers visit my house and ring the bell,' Edel said, making testing in a remote environment difficult. Stein insists.

Edelstein is also concerned about the privacy practices of fraud prevention software. In a test one day, Respondus' anti-fraud software demanded that it also show under the desk, but Edelstein said he was wearing shorts and his bare feet were shot by the software for a few seconds. That thing. Jason Kelly, research director at the Electronic Frontier Foundation , points out that fraud prevention software other than Respondus also requires a view of the lower body.

In addition, Respondus has a default retention period of 5 years for the movies it shoots, and it is possible to extend the retention period further at the request of the client. 'This is ridiculous to say the least. Teachers and colleges store data only for as long as it takes to determine if there has been any suspicious activity, which can be as long as a week or two. Is it about a month? '

In response to this concern, San Diego State University argued that the captured movie was used solely for fraud review purposes and would not be permanently stored. David Smither of Respondus also explained that the data collected by fraud prevention software is limited to a small number of engineers, and access to certain data will notify the security and privacy team. I will.

Bruce Schneier, a security expert at Harvard Kennedy School , said the university should be responsible for partnering with tech companies without scrutiny in advance. Lynette Attai, an expert on educational data privacy regulations, argued that companies and universities should make the data they collect visible to students. “Students should be well informed about university and corporate decisions to understand privacy and security practices,” said Attai.

Related Posts:

in Software, Web Service, Security, Posted by log1h_ik