How to perform 'scraping' that automatically collects data from websites and precautions

Data is often collected first for research and analysis, but I would like to manage to reduce the time spent on such simple tasks. Researchers at Oxford University's

How we learnt to stop worrying and love web scraping

https://www.nature.com/articles/d41586-020-02558-0

◆ How does scraping work?

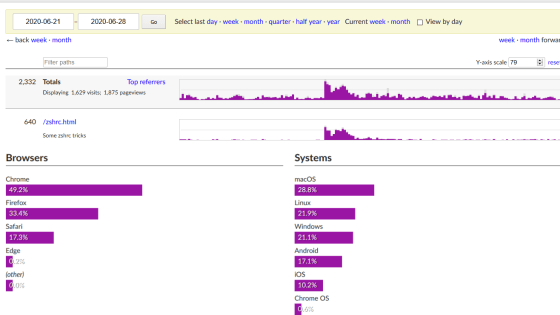

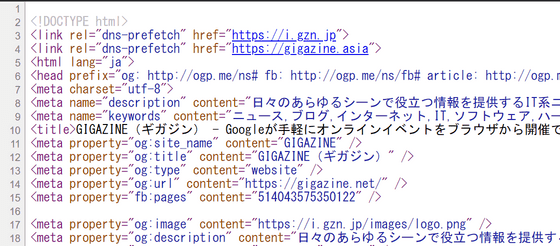

As you can see by using the browser's 'View Source' and 'Investigate Elements' features, web pages are based on text files encoded in a language called HTML. In scraping, it is possible to extract the necessary part of the data by analyzing this HTML file. A common task is to access each of the URLs from 'www.example.com/data/1' to 'www.example.com/data/100' and save the required data. .. Not only can you save time by letting the program collect the data, you can avoid the possibility of human error, and it is smooth when you want to update to the latest data.

◆ How to start scraping?

There are various methods for scraping. For example, a browser extension called '

You can also customize scraping in more detail by creating your own scraping program. Basically, it seems that scraping can be done using any programming language, but the nature article introduces a combination of Python and its libraries Requests and BeautifulSoup . In addition, scraping work may take several days, in which case it is necessary to be careful not to sleep the PC. If you have a private server, you may want to consider scraping on the server.

◆ What is the method other than scraping?

If your website has a feature that allows you to download all the data, it's easy to use. For example, ' ClinicalTrials.gov ' is a site that collects clinical research data, but the data posted on that site can be downloaded in a batch . Also, if the API is open to the public, you can use the API to obtain data without parsing HTML.

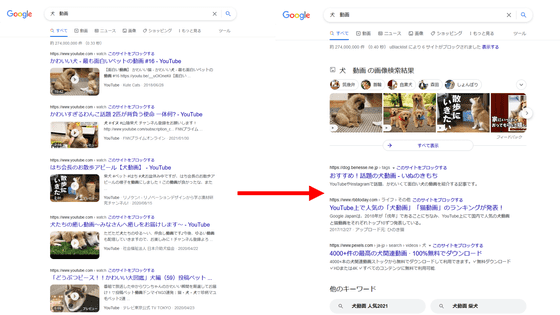

◆ About websites that are easy to scrape and websites that are difficult to scrape

On some websites, the data may not be in HTML and may require advanced techniques for scraping. In addition, scraping may be refused by Captcha or DoS countermeasure services. Depending on the site, restrictions such as 'only specific pages can be scraped' are described in robots.txt, so it seems good to check it as well.

By Brogue Lessor Jig , CC 4.0

◆ Scraping manners

Every time the scraping program requests data from the website, the server must take action to prepare the data. Compared to the case where humans browse the website from a browser, the scraping program can access at an overwhelming speed, so there is a risk of unintentionally attacking the website. You can avoid these problems by putting a few seconds break between each request.

◆ Restrictions on data usage

It is necessary to confirm the license and copyright of the extracted data. However, even if data cannot be shared legally, sharing the scraping program itself is not a problem at all.

In addition, it is said that the program for scraping websites is not only highly practical, but also has a certain degree of difficulty but abundant documents, so it is a suitable project for beginners to acquire programming skills. I will.

Related Posts:

in Software, Posted by log1d_ts