Perplexity, a generative AI search engine, ignores robots.txt to extract information from websites

Perplexity is a search engine that uses generative AI, and in addition to being able to generate answers directly to user questions, it also offers a feature called 'Pages' that generates web pages based on user prompts . It was discovered that Perplexity was ignoring the instructions in the text file ' robots.txt ,' which controls bots ( crawlers ) such as search engines and AI training, and was accessing websites that administrators had prohibited Perplexity from visiting.

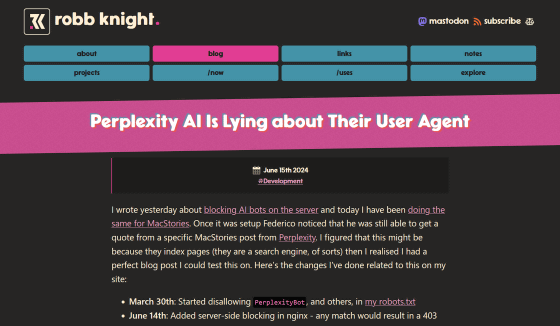

Perplexity AI Is Lying about Their User Agent • Robb Knight

https://rknight.me/blog/perplexity-ai-is-lying-about-its-user-agent/

Search engines such as Google and Bing, and generative AI such as ChatGPT, use programs called crawlers to collect huge amounts of information from the Internet and use it for search results and AI training. A text file called robots.txt is used by websites to control the crawler's patrols. Administrators can block crawlers by including certain elements in robots.txt.

In recent years, there has been a movement to raise concerns about the unauthorized use of internet data to train generative AI. In August 2023, a method for blocking the crawler 'GPTBot' used by OpenAI was made public, and Google also announced an option to prevent websites from being used to train generative AI.

Google announces option to prevent your website from being used to train generative AI, but some point out that it's too late - GIGAZINE

Rob Knight , who runs a technology blog, began banning the PerplexityBot crawler of Perplexity, a search engine that uses generative AI, in the robots.txt of his blog in March 2024.

To check if the block was working, Knight pasted the URL of his blog post into Perplexity and asked, 'What is this post?' Perplexity then pulled information from the blog post that it should not have access to and generated a summary with various details . Knight thought that it might just be that the blog's robots.txt was not working properly, so he tested it using nginx and confirmed that the PerplexityBot should be blocked properly.

Further investigation revealed that PerplexityBot was using a headless browser to scrape content, ignoring robots.txt. The user agent string sent did not contain the 'PerplexityBot' part, so robots.txt did not prevent the crawler.

In response to a question from Perplexity asking, 'Why would you access this website if robots.txt prohibits crawling it?' Knight replied, 'I do not have the ability to actually crawl websites or access content that is blocked by the robots.txt file. If the content of a website is restricted by robots.txt, I cannot ethically access or summarize that content.'

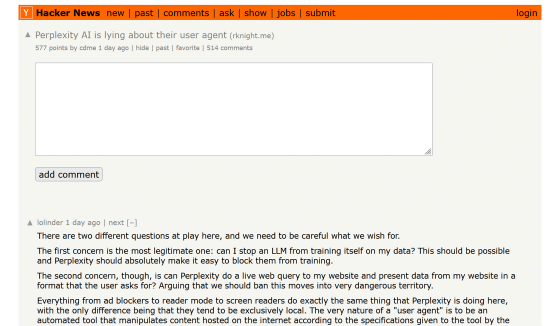

This issue has also become a hot topic on the social news site Hacker News, where users have pointed out that 'forcing users to block crawlers by AI development companies could have a negative impact on ad blockers and other useful software,' and that 'crawling by generative AI search engines like Perplexity reduces the number of users who access websites directly, resulting in various disadvantages.'

Perplexity AI is lying about their user agent | Hacker News

https://news.ycombinator.com/item?id=40690898

Related Posts:

in Software, Web Service, Posted by log1h_ik