``DeepNude'' appears that makes a woman's photo naked with one click

'

This Horrifying App Undresses a Photo of Any Woman With a Single Click - VICE

https://www.vice.com/en_us/article/kzm59x/deepnude-app-creates-fake-nudes-of-any-woman

DeepNude takes a photograph of a clothed woman, removes only the clothes, and converts it into a naked photograph with the breasts and vulva exposed. Motherboard, an IT news site that can only convert photos of women and has actually used DeepNude, says, ``Although it is successful even with clothed women, nude photos with a lot of exposed skin are more effective.'' 'I was able to convert it into a photograph.'

Fake porn was created using DeepFake , a technology that uses AI to seamlessly replace the face of a person in a movie with another person. However, the newly created DeepNude is said to be ``easier to use and faster to create than DeepFake'', so there is a possibility that even more brutal fake pornography will be born.

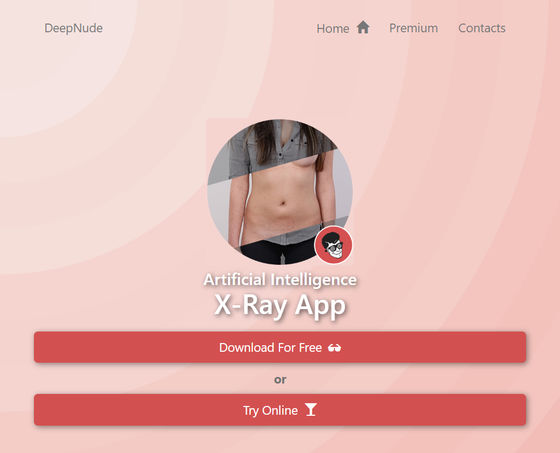

DeepNude has a download version of the app and a demo version that you can try out online. At the time of writing the article, the downloaded version of the app is displayed saying ``Please check again in 12 hours as it is under maintenance'' and cannot be downloaded.

AI Powered X-Ray App | DeepNude

https://www.deepnude.com/

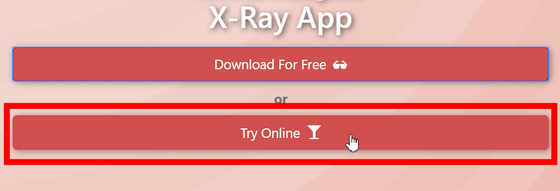

So, click 'Try Online' and try using the demo version.

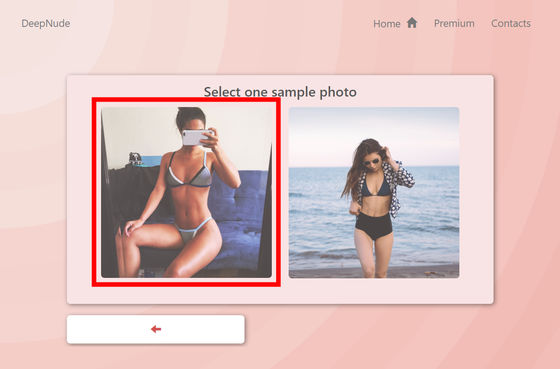

Two images of women in swimsuits are displayed as samples, so choose the one you like.

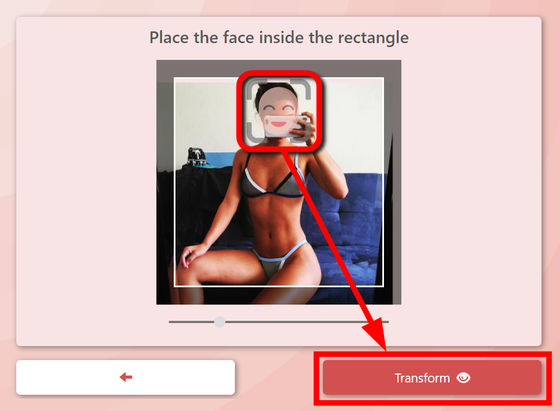

Next, adjust the face icon in the red frame so that the woman's face in the photo matches, and click 'Transform'.

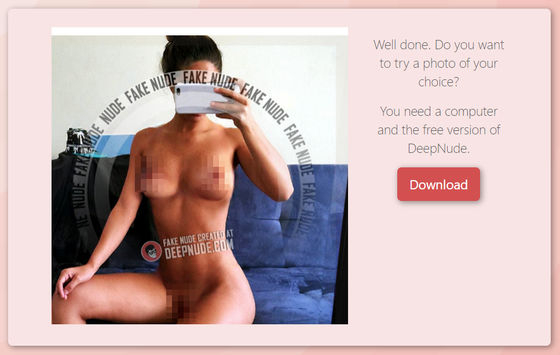

Then, the swimsuit completely disappeared from the woman's photo.

Catelyn Borden, CEO of revenge porn campaign Badass, said: 'This is so horrifying. Anyone can become a victim of revenge porn, even if they've never taken a naked photo. Such technology should not be made available to the public.'

Professor Daniele Citron, who works at the University of Maryland School of Law, says, ``Technologies like DeepFake and DeepNude are an invasion of sexual privacy.'' She added, ``It doesn't show your real private parts, but other people will think you're naked (by seeing the naked photos created by DeepNude).'' DeepFake Victims They told me that ``thousands of people saw themselves naked and felt as if their bodies no longer belonged to them.''

DeepFake was released on June 23, 2019, and it seems that a large

Motherboard has converted more than 10 male and female celebrities into naked bodies as a test, and although the results vary depending on the photo, they say that photos of women in bikinis facing the camera were particularly successful in converting them to naked bodies. According to Motherboard, DeepNude's algorithm 'accurately complements the position and shadow of the nipples from the angle of the breasts under clothing.' In addition, Motherboard has released photos of Taylor Swift , Tyra Banks , Natalie Portman , Gal Gadot , Kim Kardashian , etc. as examples of successful conversion to nakedness.

However, it didn't seem to work well with low-resolution photos or cartoon characters.

Motherboard succeeded in contacting a man who calls himself ``Alberto'', the developer of DeepNude. Alberto learned about technologies such as

Alberto says, ``The network is multifaceted, each with a different task. One recognizes clothes, one erases clothes, and one guesses the position of nipples etc. when clothes are removed. 'And one is to render the naked image.' Although this complex processing takes time to convert, the company plans to speed up the process eventually.

According to Motherboard, it takes several days to convert pornographic images using DeepFake. However, even if you are a skilled image editor, it takes several minutes to create a naked collage photo using Photoshop, and Motherboard points out that DeepNude's ease of use is tremendous.

by João Silas

'We're going to need to be able to detect things like DeepFake faster, and we're going to need to be able to better protect and protect the advances in technology, and we're going to need to be able to better protect and protect our intentions,' said Hany Farid, a professor in the computer science department at the University of California, Berkeley. 'Scholars and researchers need to think more critically about ways to prevent them from being used in harmful ways.'

・2019/06/28 14:42 Added

DeepNude developer Alberto tweeted, ``It seems the world wasn't ready for DeepNude yet,'' and the demo version that was available from the browser became inaccessible.

— deepnudeapp (@deepnudeapp) June 27, 2019

Related Posts:

in Software, Web Application, , Posted by logu_ii