The fact that AI's ancestor `` document selection algorithm '' was also racial and female discrimination

By

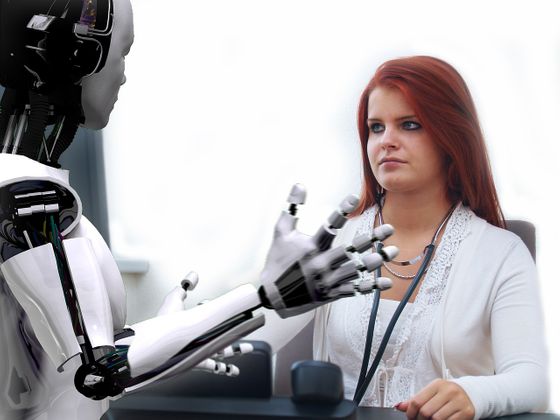

Artificial intelligence (AI) is expected to play an active role in various industries and can be said to be the next generation technology. However, since AI is “learning from humans” to the last, the possibility of inheriting “prejudice and discrimination” that humans have had been pointed out.

Untold History of AI: Algorithmic Bias Was Born in the 1980s-IEEE Spectrum

https://spectrum.ieee.org/tech-talk/tech-history/dawn-of-electronics/untold-history-of-ai-the-birth-of-machine-bias

Around 1970, St. George's Medical University in the UK was conducting an admission examination where the students who applied were first screened for documents and then interviewed. There were about 2500 students applying for a year, but about three-quarters of them were rejected at the document selection stage, placing emphasis on document selection.

Dr. Jeffrey Franglen, vice president, who served as the admissions judge for the school, thought that the screening process for document screening was long and tedious, so it could be automated and streamlined. Dr. Franglen studied human screening and created an “algorithm that mimics the criteria of human auditors”. With this algorithm, Dr. Franglen thought that not only “improving the efficiency of document screening” but also “resolving the evaluation variation by the judges” could be realized as a result of the judges becoming only algorithms.

By

The algorithm was completed in 1979, and double screening of documents by the algorithm and human judges was conducted during the admission examination that year. The evaluation by the algorithm created by Mr. Frangren said that the results were 90% to 95% consistent with those of human judges. In response to this result, it seems that St. George University had been selecting documents for this algorithm until 1982.

However, a few years after the algorithmic document screening has been carried out, some staff members have found that the racialities of successful applicants are biased. As a result of the review of the algorithm program by the British Race Equality Committee (CRE) who received the report, it was classified as `` white '' or `` non-white '' by name and birth place, non-white people will be deducted. '' It turns out. Candidates with names other than white were automatically deducted by 15 points, and female candidates were also deducted by 3 points. As a result of such 'bias', 60 people per year may not have passed the document screening.

By

Dr. Franglen says that this bias has already existed since the time humans were selecting documents. At that time, gender discrimination and racism were actually rampant at British universities, but it was possible to verify that the algorithm was designed to discriminate between gender and racism, so the problem became clear It was.

Evolving modern AI has the same problem. AI is now being used in medicine and criminal justice, but AI inherits social prejudice as a result of learning from existing data. In 2016, it was shown that Publica was biased against African Americans in the crime prediction system implemented in the United States .

Amazon and MIT researchers confront each other over `` discrimination of Amazon's face authentication software ''-GIGAZINE

While AI bias is being discussed, AI is believed to produce unbiased results, as if it were an “invention of rationality”. In response to this perception, Kate Crawford, an AI critic, said, “Algorithms inherit human bias and are only imitating humans. It will be of a degree. '

Related Posts: