Google explains the detailed cause about the fact that Gmail · Google Drive · Google photo is temporarily unavailable

by Charles PH

Access failure occurred on Facebook & Instagram on March 14, 2019 Japan time, and a large-scale system failure occurred on Apple's iCloud , but Google's Gmail for business on March 13 ahead of these And Google Drive had a system failure . Google explains the cause of this system failure.

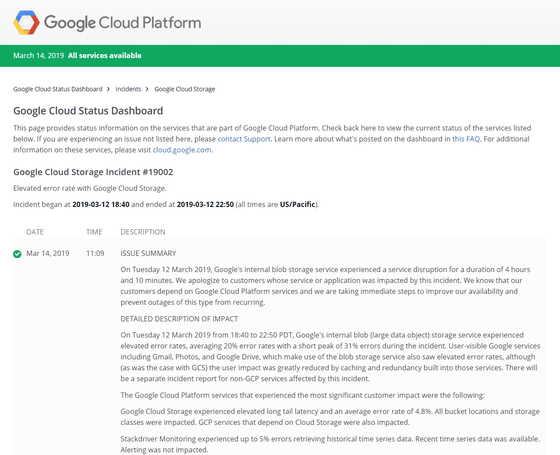

Google Cloud Status Dashboard

https://status.cloud.google.com/incident/storage/19002

The system failure that occurred in Gmail and Google Drive continued for about 4 hours from 18:40 to 22:50 on March 12, 2019 local time. The cause was the interruption of Google's internal Binary Large Objects (BLOB) storage service, which affected multiple Google Cloud Platform (GCP) services. Google states that they are taking immediate action to improve GCP availability and to prevent this type of failure in the future.

In the event of a failure, Google's internal BLOB storage service confirmed an increase in the error rate, which reached an average of 20%, and at peak times it reached an error rate of 31%. Services that use Google's internal blob storage service include things like Gmail and Google Drive, and these services have also seen rising error rates. However, as with Google Cloud Storage (GCS), Google's internal blob storage service is also cache and redundant, so the actual impact on users is from the perspective of the scale of the system failure. "I was able to reduce it."

The most impacted GCP service GCS increased long tail latency, reaching an average error rate of 4.8%. In addition, all buckets and storage classes are affected, and GCP services that depend on GCS are also affected. In addition, Google's " Stackdriver Monitoring " seems to have an error of up to 5% while acquiring time series data. In addition, with Google App Engine 's Blobstore API, it seems that the wait time for fetching BLOB data has increased and the error rate has reached 21%. In addition, the Google App Engine deployment has reached a peak error rate of 90%, and it has been revealed that an error has occurred even in the delivery of static files.

Google App Engine Documentation | App Engine Documentation | Google Cloud

On March 11, 2019, Google SRE was warned that the storage resources for metadata used in internal blob services were significantly increased. On the following day, to reduce resource usage, SRE made a configuration change with the side effect of "overloading important parts of the system to locate the BLOB data". The change is likely to eventually cause cascade failures as the load increases, which is the root cause of Google's system failure.

As soon as a system failure occurs, Google SRE immediately stops jobs that have been changing settings. In order to recover from cascading failures, the level of traffic to the BLOB service has been reduced manually, and it has been adjusted so that the task can be launched without crashing under heavy load.

Google "improves the separation between storage service areas so that failures are less likely to impact the global in order to prevent this type of service interruption. To recover from cascading failures caused by high loads, Improves the ability to provision resources more quickly, and also provides software protection to prevent configuration changes that would otherwise overload the major parts of the system, plus the load shedding behavior of the metadata storage system We will improve it and allow it to drop properly in the event of an overload, "says a precautionary measure to prevent similar problems from happening in the future.

Related Posts:

in Web Service, Posted by logu_ii