Researchers created a model that supplements 'blind spots' of artificial intelligence with feedback from humans.

by Amanda Dalbjörn

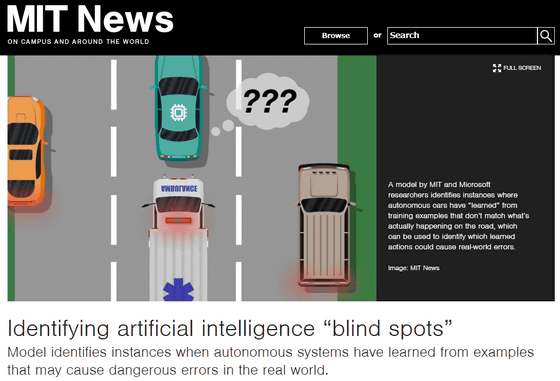

Artificial intelligence used in automatic driving systems etc. learns various situations by simulation, but when encountering a situation rarely occurred in the real world, it can not respond to the situation and cause an error . For this reason, Massachusetts Institute of Technology and Microsoft researchers carefully observed how humans respond and created a model to incorporate them into the policy for taking the best action.

Identifying artificial intelligence "blind spots" | MIT News

http://news.mit.edu/2019/artificial-intelligence-blind-spots-0124

Even with conventional training, there are cases where human feedback is given, but it is only to update the operation of the system, it can not identify "blind spots" leading to safe judgment when operating in the real world. In the new model, artificial intelligence observes "action actually taken by humans", compares the difference with "actions that would be done with current training content", and creates a "policy" of judgment.

There are "demonstration" and "correction" in the form of information provision.

For example, if the training of the automatic driving system is inadequate and the "large white car" and "ambulance" can not be distinguished, there is a possibility that even if an ambulance arrives directly behind, it will not give way. When a human driver actually encounters this situation, identifying an ambulance with a siren or red light gives up his path. Artificial intelligence observes this demonstration behavior and confirms "inconsistency" with actions that they might have taken.

by Benjamin Voros

Also, while heading to a specific destination, the person who got into the driver's seat does not put out his hand unless the route is fine, but when he is about to run on the wrong road he grips the handle himself and corrects the route . Again, "inconsistency" occurs between artificial intelligence and human behavior.

After gathered information on such feedback, the system learned what actions that should not be taken by conducting multiple labeling, whether incompatible behavior is acceptable or not, until then it became "blind spot" The part that was being is buried. In case of responding to the "ambulance" mentioned above, labeling will be done if we give way 9 times out of 10 "safe movement is safe to give way when an ambulance comes".

However, since those belonging to "behavior that artificial intelligence is unacceptable" are extremely rare, if you learn only with this form, many moves become "acceptable". Researcher Ramya Ramakrishnan also acknowledges the danger, so we use the Dawid-Skene algorithm to gather data labeled "acceptable" and "unacceptable", and for each situation Depending on the confidence level, we output "safety" "blind spot" label. This left the possibility that the situation is still ambiguous "blind spots" even if the percentage of "acceptable" was 90%.

With this learning model, artificial intelligence is expected to behave more carefully and intellectually. According to Ramakrishnan, if the situation is predicted to be "blind spot" with a high probability, the system will be able to take more safe action by asking for permissible actions for humans .

Related Posts:

in Note, Posted by logc_nt