HPE releases prototype of memory-driven computer "The Machine", with 160 TB of memory installed in a single unit

Memory-driven computer that Hewlett-Packard Enterprise (HPE) is developing as a "new form" leaving the basic configuration of a conventional computer composed of CPU, main memory (DRAM), storage device and network device A new prototype of "The Machine" has been released. It is said that it is the world's largest single memory computer.

Today@ HPEIntroduced world's largest single-memory computer, capable of holding 160 Terabytes of data.#AtlanticSciencePic.twitter.com/ZBPo2xYYuf

- HPE News (@ HPE_News)May 16, 2017

HPE Newsroom | HPE Unveils Computer Built for the Era of Big Data

https://news.hpe.com/a-new-computer-built-for-the-big-data-era/

As HPE's largest research and development in company history, custom designed for big dataMemory Driven ComputingWe are working on it. The latest milestone of the project "The Machine research project" is the prototype of the world's largest single memory computer announced this time.

"Memory-driven computing" is also expressed as "memory-driven computing" "memory-driven computing". The conventional computer has a CPU at its center, but "The Machine" is centered on nonvolatile universal memory that combines DRAM, HDD, SSD, etc. into one, and for this huge memory pool The SoC accesses by photonics technology. Universal memory eliminates input / output bottlenecks and dramatically speeds system performance. This is also reported in the "HP Tech Power Club 2015 General Assembly Autumn" held in Marunouchi in October 2015.

The Machine: This will change everything! | HPE Japan

http://h50146.www5.hpe.com/products/servers/techpower/report/soukai_20151022.html

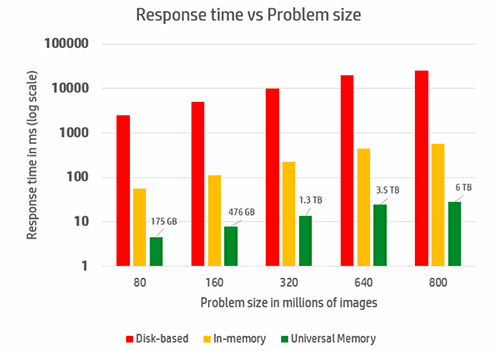

Performing an image search performance simulation to search for one person from 320 million people in the United States revealed that The Macine with universal memory issued numbers that overwhelm both disk-based clusters and in-memory based clusters . In the following graphs, red indicates disk base, yellow indicates in-memory base, and green indicates universal memory. The horizontal axis shows the number of images and the vertical axis shows processing speed (unit: milliseconds). As it is a logarithmic graph, we can see that the universal memory was quick enough to overwhelm the other two.

In the event "Discover 2016 London" held in November 2016, it is reported that the first demonstration experiment succeeded.

HPE, success and announcement in prototype operation experiment of next-generation machine "The Machine" to realize memory-driven computing - Publickey

http://www.publickey1.jp/blog/16/hpethe_machine.html

The new prototype announced this time is based on the result of "The Machine research project", and shares 160 TB of memory with 40 physical nodes interconnected by a high-performance fabric protocol. OS is Linux based, optimized Cavium SoC "ThunderX 2 ARM Processors"use. The photonics / optical communication link including the new X1 photonics module operates online.

HPE's CEO Meg Whitman said, "The secret of technologies that transform industries, technologies that change lives, the next big scientific discovery is hidden behind the huge data we are building on a daily basis To realize this hope, we need to create a computer for the big data era, not depending on past technology. "

Kirk Bresniker, Chief Architect, commented that this prototype, which is expressed as "a radical new departure", will become a "game changer" that will greatly change the flow and way of things.

The Computer Built for the Era of Big Data - YouTube

The amount of data that can be handled by 160 TB of memory is 160 million books equivalent to five US Congress libraries in books, for example. Also, the maximum number of architectures is 4096YB (Yotabite), That is, it can be extended to 1 trillion TB, real time · now impossible with current systemInsightIt is expected to be obtained.

Related Posts: