Automatically categorize pornographic videos into six categories Deep learning tool "Miles Deep"

ByChen Tao Liao

AI pornographic video tool which classifies sexual videos into six categories with accuracy of 95% per second using neural network "Miles Deep"Is published on GitHub. You can edit movies automatically for each classification, delete only scenes that do not contain sexual contacts, or edit only specific acts.

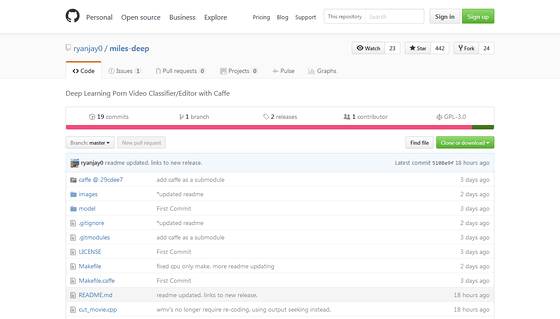

GitHub - ryanjay 0 / miles - deep: Deep Learning Porn Video Classifier / Editor with Caffe

https://github.com/ryanjay0/miles-deep

Yahoo is a deep neural network that recognizes NSFW (inappropriate at work) imagesOpen nsfwAlthough Miles Deep also uses a similar architecture, unlike Open nsfw which categorizes only into "NSFW", it is possible to accurately recognize the difference in nudity and acts in porn videos Possible. According to the author Miles Deep is "I am the first porn classification and editing tool as far as I know".

Also, Miles Deep is an open source deep learning libraryCaffeIt is also a general video classification framework using models, and you can edit videos in other categories than pornography by rewriting the configuration file. Detailed usage examples are explained in GitHub.

In addition, Miles Deep trained with 36,000 images can classify pornographic videos into the following six categories.

1: blowjob_handjob (stimulate male instruments by mouth or hand)

2: cunnilingus (stimulating female genitalia with mouth)

3: other (Other)

4: sex_back (doggy position)

5: sex_front (normal position)

6: titfuck (stimulating male genitalia in breast)

For example, the difference between "sex_back" and "sex_front" is not the direction of the performer's body, but it is defined by the position of the camera. Since pornographic videos basically have a composition facing the female body, if you can see a female instrument from the front, it is classified as sex_front and if you see the back side it is classified as sex_back. In addition, we do not distinguish about the type of sexual activity at the moment. Since the data set used for training is only of the heterosexual partner, it does not correspond to gender classification such as "same sex to same sex". We plan to respond by increasing the number of categories to classify in the future.

ByRisto Kuulasmaa

Related Posts:

in Software, Posted by darkhorse_log