"Images to Text" that automatically generates descriptive text when uploading images

ByMichele Cannone

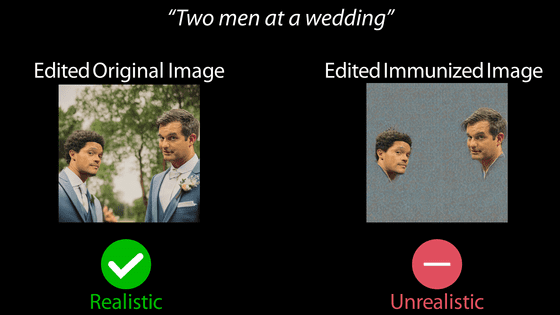

A system that recognizes images and performs positioning, type, measurement, inspection is called "Machine vision"This refers to a system that reads" machine eyes "as it reads. The core of such machine vision is "image recognition algorithm", already in the current technologySucceeded in developing advanced image recognition algorithmdoing. Google is applying thisRead images and automatically generate descriptive textWe succeeded in developing a possible system, and a demonstration page that anyone can easily use this system "Images to Text"Has appeared.

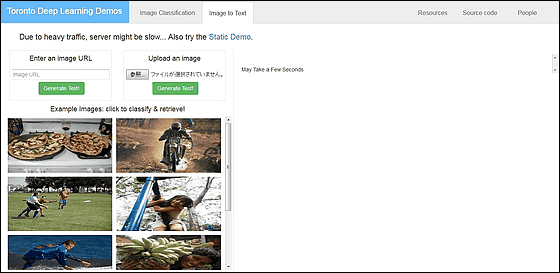

Images to Text - Toronto Deep Learning

http://deeplearning.cs.toronto.edu/i2t

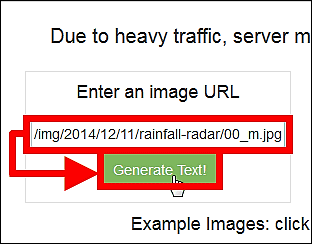

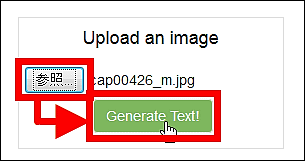

If you want to automatically generate descriptive text, you need to "enter image URL" or "upload file from local". So, first select the image to use from the Internet, paste the image URL and click "Generate Text!".

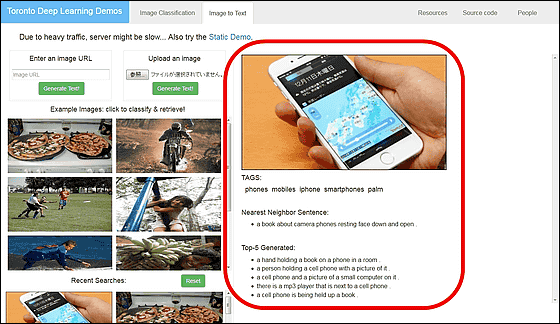

First of all, we created text from this "Image holding iPhone 6 by hand".

It takes about 5 to 10 seconds for text to be displayed after clicking "Generate Text!". When text is displayed, "Nearest Neighbor Sentence:" is outputted as the image used for the red frame portion, the element read by the system from the image under "TAGS", and the best predictive explanation text combining the elements And five texts are generated as candidates for other explanatory text under "Top-5 Generated:". In this case, "phones", "mobiles", "iphone", "smartphones" and "palm" are displayed as "TAGS", and it is wonderful that the iPhone you have in hand is displayed.

To upload images from a PC, click "Browse", specify the image you want to upload and click "Generate Text!" OK.

Uploaded is an image of 'Soldiers on Tank', but the generated text was "Where soldiers are trained in rifle shooting methods". Although it is not a correct explanation, it is a result that somehow understands why such text was output.

Sample images are listed under "Example Images:". Try clicking the picture of the pizza placed on the electric cooker ......

"Two pizzas are placed on the stove, one is a mushroom and the other is a pizza of basil" almost perfect sentences were generated.

A picture of a little girl playing with playgrounds explains the point that "a black hair girl is playing in a log on a hanging log." Other descriptions are not wrong, such as "the girl is trying to touch the pole", "the girl is jumping over the pole."

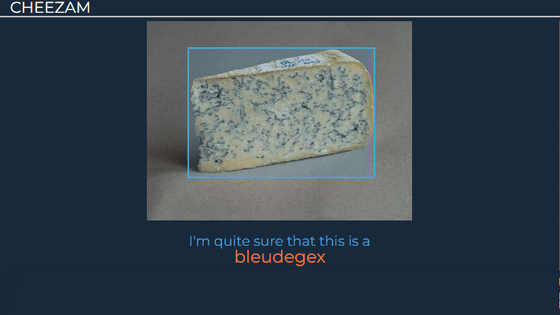

Images with cats lining up"One brown dog is watching other animals" was generated. Humans have "dog faction" and "feline school", but maybe the image recognition system is biased by the dog faction.

A big tuna toyThe explanation of the image in hand is "two hands that operate the keyboard and the mouse", and sometimes one answer is not correct.

In the image of Steve Jobs, it seems that "a man with a smile has a photo", and the text on the left side is not read. Since Google's image search can sometimes identify people, Images to Text seems to adopt a different recognition system.

This system uses a technology called Deep Learning (Deep Learning), which Google and the University of Toronto jointly research and develop. Applications that can easily experience this deep learning system are also released.

Deep Learning on the App Store on iTunes

https://itunes.apple.com/us/app/deep-learning/id909131914

Deep Learning - Android application on Google Play

https://play.google.com/store/apps/details?id=utoronto.deeplearning

This time I will install and use the iOS version. You can get free by tapping "Get" button.

When you launch the application, you are asked for access to the camera, so tap "OK".

Since the camera starts up, take a picture you want to recognize.

Then it is not an explanation, but the number of words discriminated from the image is displayed as follows.

If this technology develops, it may become possible to write a blog just by turning the camera on objects.

Related Posts:

in Web Service, Review, Posted by darkhorse_log