Google develops technology to automatically generate descriptive text of images

Humans can explain what scenes it is like by looking at photos, but this is very difficult for computers. However, Google researchers have succeeded in developing a system with human-like abilities, as it is possible to generate captions that automatically explain the situation by using a machine learning system once viewing the pictures .

Research Blog: A picture is worth a thousand (coherent) words: building a natural description of images

http://googleresearch.blogspot.jp/2014/11/a-picture-is-worth-thousand-coherent.html

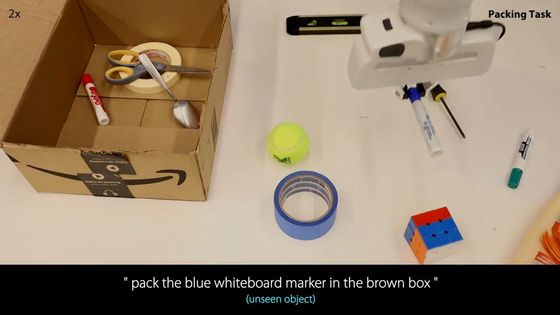

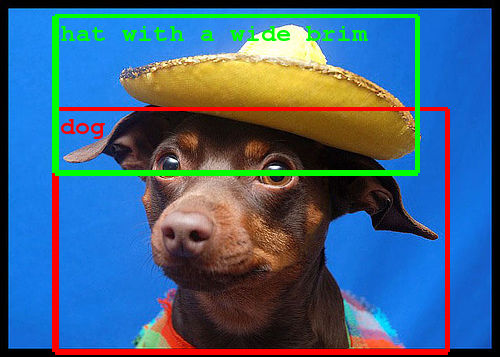

In recent research, technologies such as object detection, classification, labeling, etc. have been greatly improved. However, in order to briefly describe complex situations like human beings, it is necessary to accurately recognize the range of deep expression and a wide variety of objects and express them in natural language.

A system that recognizes images instead of human eyes, recognizes objects, performs positioning, classification, measurement, inspection, etc.Machine visionIt is said that this is a research field for making machine eyes. If the state-of-the-art technology of such machine vision and the natural language processing system which can explain the complicated situation can be properly combined, it should be a wonderful system.

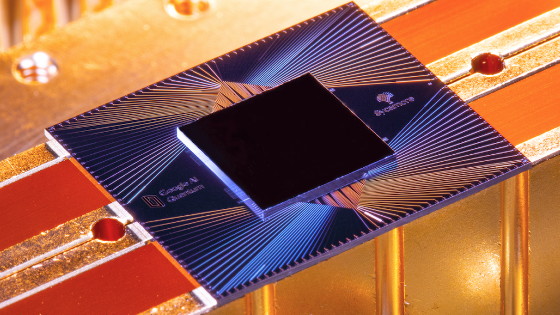

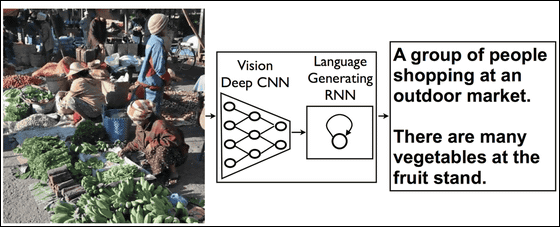

What is essential to realizing this idea is to simulate human brain function on the computerneural networkIt is a derivative ofRecurrent neural network(RNN). We will use this RNN to generate sentences and phrases from images of images and attach captions to photos.

First of all,Convolution neural network(CNN) "to analyze the situation shown in the photo. In the case of an image recognition algorithm using normal CNN, in the final layer of CNN, it seems that work to decide what the object in the picture is based on rough prospects. However, in the system created by Google, this last layer is deleted, and by adding RNN for language generation instead, information on a large amount of images will be supplied to RNN. By doing so, data generated by the existing image recognition algorithm can be used effectively for RNN for language generation.

Furthermore, by letting this system directly recognize various images and generate captions, the system will be able to attach more precise captions by machine learning. Our research team has also succeeded in improving the quality of captions by making it possible to process several open database images.

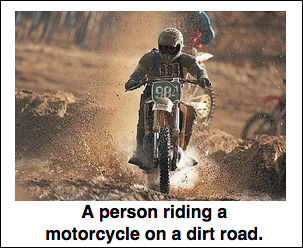

The captions that the Google system actually analyzed the photo are as follows.

· Example of success

"A person riding a motorcycle on a dirt road."

"A group of young people playing a game of frisbee. (Youth group playing with Frisbee"

"A herd of elephants walking across a dry grass field. (A group of elephants walking on dry grassland)"

· Captions with small mistakes

"Two dogs play in the grass." (Two dogs playing on the lawn) ": in reality three animals

"A close up of a cat laying on a couch. (Up pictures of a cat sleeping on the sofa)": It is not up

· Although the situation itself is close to the correct answer, there is a mistake in word units

"A skateboarder does a trick on a ramp." (Skateboarder decides a trick on the ramp): It is BMX rider who decides the trick on the ramp

"A red motorcycle parked on the side of the road." (Red motorcycle parked on the side of the road) ": The color of the motorcycle is pink, and parking is parked

· Example of failure

"A dog is jumping to catch a frisbee." (The dog jumps and catches the Frisbee) ": The dog is not jumping and he does not even have a frisbee

"A refrigerator filled with lots of food and drinks. (In the refrigerator full of food and drinks)": a completely different thing

This system, which is a combination of an astonishing image recognition system and natural language processing system, will be useful in the future for visually impaired people to see the image, or in areas where the internet line speed is slow, It is supposed to be of great use to complement the image by sending a status explanation in text and further improving the search accuracy of Google image search.

Related Posts:

in Note, Posted by logu_ii