Facebook Completely equipped with the latest in-house server and data center photos and specifications, "Open Compute Project" launching advanced server efficiency technology started

The "Open Compute Project" packed with detailed data and specification documents of the latest technology of data center and server operation which Facebook has cultivated independently through research has opened. In order to build the most efficient infrastructure at the lowest possible cost, we adopt a method of designing and building software, data center, from the beginning to the end.

Electricity consumption is more efficient than other data centers by 38%, costs are reduced by 24%, transformer losses to 11% to 17% losses so far are only 2% efficient In addition to eliminating the air conditioner for cooling, taking in outside air and cooling without air conditioning piping. In addition, the chassis for the server has its own design, CAD file can also be downloaded, AMD and Intel motherboard etc. used are also released with specifications, racks etc used are also released with a photo It has become quite awesome.

Browse various publicly available images from the following.

Open Compute Project

http://opencompute.org/

The location is Prineville in Oregon State, and this location selection itself seems to be the first step to make data center power efficiency more efficient.

Data center equipment is using a 48 VDC UPS system integrated into a 277 VAC server power supply.

This is inside the Facebook data center.

The triplet rack you are using

It is in line

It is beautiful to shine blue, but these are LED lights that are powered via Ethernet. This passage also serves as a mechanism that air warmed by the server's heat generated goes out.

A door for entering a passage that moves warmed air by a group of servers. Cooling adopts a passive cooling infrastructure that is passive and reduces energy consumption when keeping equipment moving as much as possible.

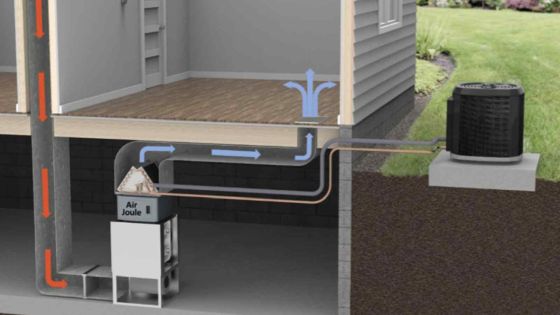

This is not air conditioning air conditioning system. It is a system that cools the air by evaporating the mist, and the point is that moderate humidity can be maintained. This is an indicator of the energy efficiency of the data center facilityPUE(Power Usage Effectiveness) has achieved 1.073, which is even lower than 1.51, the industry average of the state-of-the-art technology defined in EPA.

A cooling part that flows air. It is not a conventional system that puts a huge cooling system in the center, but along with it, it also removes the conventional inline UPS system - a system that converts 480 V to 280 V.

Huge fans for discharging the warmed air outside

Part that incorporates cold outside air into the data center

Filter when taking outside air

The data center cooling system is like this. In order from the left, fresh air is taken in first, then outside air is passed through the system which creates mist, and it is cooled by the huge fans. Since there is a filter in this part, extra dust is removed and cold air flows into the data center. A part of the air warmed by the heat of the data center is further returned to the first outside air part and the filter room, and it is discharged as it is to the outside by natural pressure difference and fan's work.

Electronics laboratory who conducted technology development on Facebook

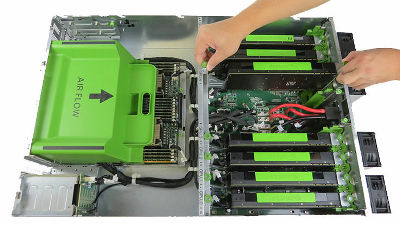

This is a state where each part is placed on a proprietary chassis. This is Facebook server. It combines many of this.

AMD's motherboard.

Two AMD Opteron 6100 can be mounted, DIMM slot can be loaded up to 24. It is a bare bone state where many elements that should be on the conventional motherboard are torn down, which realizes reduction of power consumption and cost reduction.

Heat sink on AMD's motherboard

Airflow flow

Intel's motherboard.

Two Intel Xeon 5500 or Intel Xeon 5600 can be mounted, DIMM slot can be installed up to 18. Like the mother board of AMD, it is a bare bone state where many elements that should be on the conventional motherboard are torn down, which realizes reduction of power consumption and cost reduction.

Airflow flow

When you put the motherboard, it becomes like this

The central blue part is a cabinet rack with a backup battery

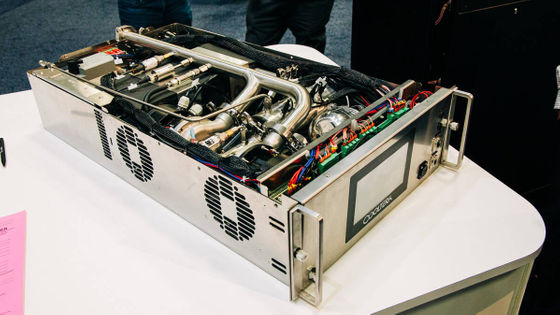

The contents are such a structure

Explanation diagram of the mechanism

This chassis is for storing a customized motherboard and power supply. Snap-in rails are used to eliminate the sharp corners as much as possible, minimize screwing, and quickly attach and detach the motherboard, so that the hard disk can also be attached and detached to slide into the drive bay I will.

It uses 450 W power supply, AC / DC conversion is possible, single voltage is 12.5 VDC, closed frame, high performance with cooling function alone. The converter has separate AC input and DC output connectors and DC input connector for backup voltage. It seems to be featured that it is designed with high priority on high power efficiency.

Power supply explanatory diagram

As for Facebook, by opening the hardware specifications, we develop servers and data centers in the same way as traditional open source software development, and as a first step, we will design specifications and design drawings this time We are hoping to improve the specifications of the community and the second step as a second step. By asking these specifications, we ask them to show where they are bad, and by doing so we will have more efficient data I am trying to build a center.

Details about chassis, rack, etc. are released like this in this way.

Related Posts:

in Hardware, Posted by darkhorse