A demo movie of "v.morish" that mixed Hatsune Miku and a professional singer's song was amazing

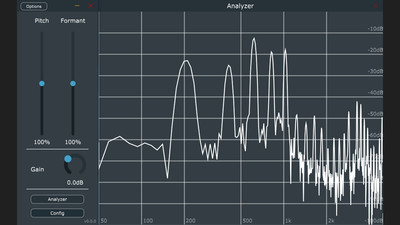

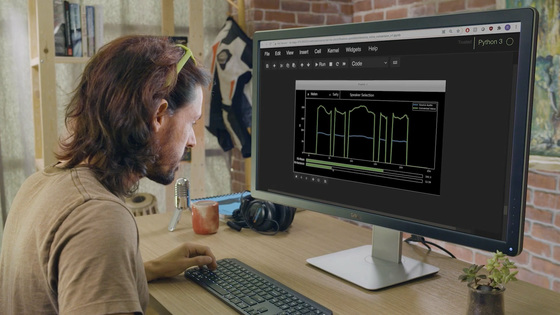

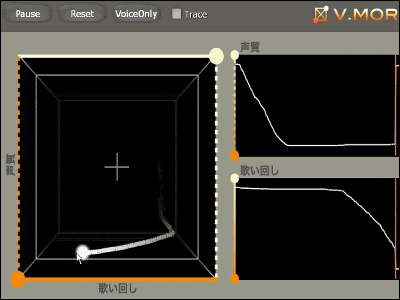

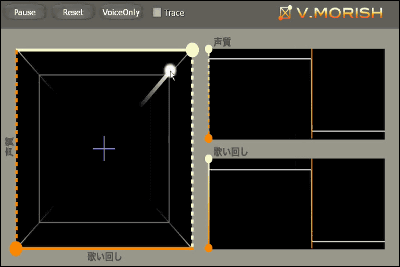

High quality speech analysis conversion synthesis method "STRAIGHT"Separates it into" singing turn "and" voice quality ", and real-time mixing each singers' singing voice at independent ratios, so that the morphing rate can be changed with the mouse even during playback,V.morish"is. What developedKwansei Gakuin UniversitySchool of Science and Technology Department of Information ScienceHaruhiro Katayori LaboratoryMasayoshi Morishi researcher.

When you listen to singing voice, you can understand very well, watching the actual movie what kind of singing voice actually will be. It may be understood that it is of a different dimension from various existing similar technologies.

The singing voice produced by mixing professional singers and Hatsune Miku's singing voice is as follows.

Demonstration movie part 1(WMV format: 3.77 MB,There are some reasons for Nico Nico Douga)

※ 2008/09/20 15: 05 Currently, it is becoming harder to connect to Kwansei Gakuin University server.

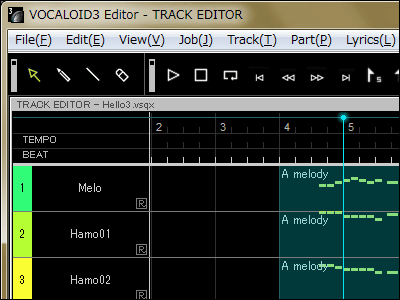

According to the explanation "It is a singing voice created by mixing professional singers and Hatsune Miku's singing voice.The lower left of the figure is a human being (the first playing), the upper right is a singing voice synthesized with Hatsune Miku (the second playing), the third time It shows that the voice quality of Hatsune Miku remains as it is, to the human singing turning, the fourth time it changes from Hatsune Miku to Human beings in stages in order of voice quality and song turning. "

Moreover, an MP3 file called "sound source that mixed singing professional singer with Miku Hatsune with commonly recognized songs" is also made public.

Pori.mp3(MP3 format: 241 KB)

Ultimately, assuming something like singing features can be transferred by simply pressing the button "~ ~ Wind singing way" or "Voice quality wild" with the voice singing by yourself or the voice of Hatsune Miku We are planning to distribute a C language library that can easily perform speech analysis and synthesis based on "STRAIGHT" in 2008.

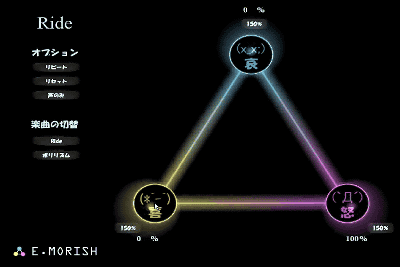

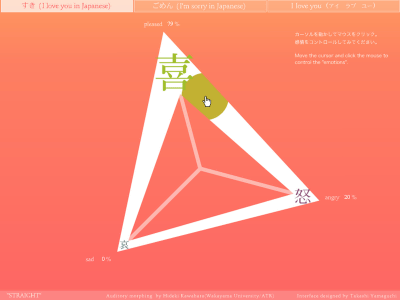

Furthermore, a demonstration movie is released on the following page as "e.morish" which allows one singer to mix the feelings of "joy" "anger" "sorrow" singing in a free ratio I will. This is also amazing.

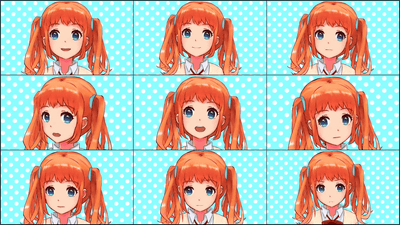

Interface for mixing singing voice

As a feeling, it is easier to understand using the following "emotional voice morphing" demo Flash (one exhibited at the special exhibition at the National Museum of Emerging Science and Innovation). Especially "I Love You" is easy to understand.

The original voice uttered by the voice actor is arranged at each vertex of the triangle, and when clicking on the side of this triangle and the line passing through the center, the emotion is mixed by the allocation according to the clicked position, A mechanism whereby the synthesized sound can be heard.

Although it is not limited to Hatsune Miku, as a technique to train synthetic speech more humanly, it is known as "To blurThere is a system that automatically estimates singing synthesis parameters that mimic user singing "VocaListener" which is famous as "Voca Listener".

VocaListener (in Japanese)

Also, as software that can actually adjust vocal intonation (pitch and intonation), there is already quite famous software called "Auto-Tune".

Antares Auto-Tune Evo Product Information

When listening on a CD etc., there are cases where the pitch and intonation are almost perfect, there are cases that transcend the singing ability of the person himself, but such things sing limitlessly "I recorded the moment of a miracle" Instead, it seems that most of the time you are correcting using such software. For the sake of simplicity, it seems like processing with Photoshop for gravure photography.

By the way, CEDEC Lab held on 11th September at "CEDEC 2008" for game developers "Music information processing technology and entertainmentIn the session of "Can you do songs that will appear in the upper part of the Oricon chart using this speech synthesis technologyProfessor Haruhiro Katayoshi of the Faculty of Science and Technology at Kwansei Gakuin University said "If you are lucky you will come out in 1 or 2 years"I answer.

So, it seems that something that will hurt the vicious rights structure by the existing copyright will appear on the extension line of these technologies in the near future.

Related Posts:

in Video, Posted by darkhorse