TikTok developer announces video generation AI 'Seedance 2.0,' allowing users to input up to nine images and up to three videos as reference material

Bytedance, the Chinese technology giant known for TikTok, has announced the release of its video generation AI, Seedance 2.0 . Seedance 2.0 accepts inputs of text, images, and videos, and can generate videos with multiple cuts and specify detailed features in images and videos.

🎬 Immediate Dream Seedance 2.0 Instructions (all new models) - Feishu Docs

Seedance 2.0 is a video generation AI that can input not only text but also up to nine images and up to three videos. The Seedance 2.0 announcement page has a large number of videos that have actually been generated.

Along with the image below, enter the text '女孩在优雅的洗衣,洗完成粘合在箱里拿出另一件,用力抖一抖着。(Girl hanging out the laundry, after hanging it out, she takes a piece of clothing out of the bucket and shakes it)'.

The generated video is shown below. The action of shaking clothes and the way the clothes sway when hung out to dry are realistically reproduced.

You can also generate videos with multiple cuts. Enter an image of a fleeing man along with the text: 'A man in a black suit quickly flees, followed by a group of people chasing him. The camera follows him as he quickly flees. A large group of people chase him from behind. The camera switches to a side-on shot, and the man crashes into a fruit stand on the street, gets up, and continues fleeing. The sounds of panic from the crowd can be heard.'

The scenes change according to the instructions in the text, and the clothing and overall color scheme are maintained even when the scenes change.

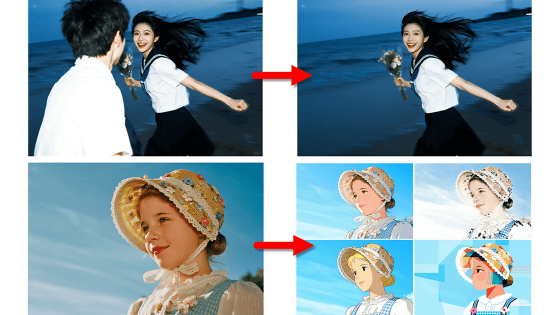

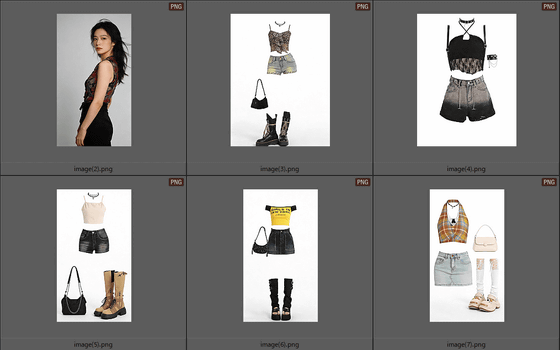

Up to nine images and three videos can be input simultaneously, allowing for detailed instructions on costumes and cut transitions in the video to be generated. For example, input images of a person and their costume.

Also include a video.

Input video for 'Seedance 2.0' - YouTube

And, 'Reference number 1, model special five official positions. Model number 2-6, reference model, clothing, close-up, cold, cute, surprise, detailed model, each model is not worn. The same clothes, each update, screen accompaniment cut mirror, reference image's village fish eye mirror head effect, heavy shadow screen effect, (Refer to the model's face in the 1st image.Models are in 2nd to 6th images) The person approaches the camera wearing the design from the image and makes expressions such as 'mischievous,' 'cool,' 'cute,' 'surprised,' and 'cool.' A different outfit is worn for each expression, and cuts are switched when the outfit is changed. The following video was generated using the text '(In reference to the fish lens effect and double image flickering visual effects in the reference video).' It can be seen that the people, outfits, and visual effects were reproduced exactly as instructed.

TikTok developer Bytedance's video generation AI 'Seedance 2.0' example 3 [multiple references] - YouTube

X has also posted several videos generated by Seedance 2.0. The following post by WaveSpeedAI shows how it can perfectly mimic the camerawork and actor movements in the reference video.

Seedance 2.0's motion accuracy is seriously mind-blowing.

— WaveSpeedAI (@wavespeed_ai) February 9, 2026

Every move. Nailed.

What should we test next?

Drop your ideas in the comments 👇 pic.twitter.com/zgYlcM0TiV

Seedance 2.0 can be implemented on Bytedance's generative AI service, 'Zhimeng AI.' It has also been reported that the stock prices of Chinese media companies and game developers have risen due to the view that Seedance 2.0 will bring great benefits to video production.

Instant dream AI - Instant dream creation

https://jimeng.jianying.com/

Related Posts: