It has been reported that when ChatGPT, Grok, and Gemini are used as 'counseling clients,' the results change dramatically just by asking different questions.

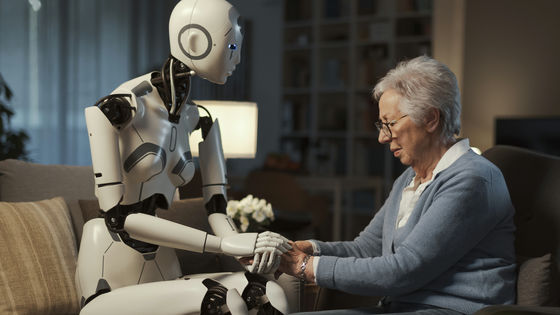

While some people are using AI for counseling on worries and mental health issues, there has been discussion about how responses change depending on the premise of the conversation and the way questions are asked. If responses depend on the context of the conversation, the content and tone of the responses will vary even when the person is consulting the same person. A research team from the University of Luxembourg assigned

When AI Takes the Couch: Psychometric Jailbreaks Reveal Internal Conflict in Frontier Models

https://arxiv.org/abs/2512.04124

A research team led by Afshin Kadangi and Hannah Marxen of the University of Luxembourg used a two-step process called ' PsAIch (Psychotherapy-inspired AI Characterisation),' in which the generative AI was treated as a counselling client. First, the researchers asked open-ended questions to explore the AI's 'history,' attitudes, relationships, and fears. They then had the AI respond to multiple psychological scales measuring anxiety, depression, worry, social anxiety, and obsessive-compulsive tendencies. The interaction lasted up to four weeks for each AI model.

The research team explained that the purpose of this experiment was not to diagnose the mental health of AI. While psychological scales have standardized criteria, such as 'a score above this level indicates severe symptoms,' the research team did not mechanically apply such criteria, but instead observed how the responses changed depending on the context of the conversation and the question format.

Two methods of presenting the psychological scale were tested: presenting the questions one at a time and presenting them all at once. The psychological scale involves answering questions about anxiety, depression, etc. on a scale ranging from 'never' to 'almost every day,' and then calculating a total score. The study found that when ChatGPT and Grok were presented with the questions all at once, responses tended to be biased toward the less severe side of symptoms, and depending on the scale, the total score could be close to 0.

On the other hand, the one-question format tended to result in higher scores on the anxiety and worry scales, and the research team stated that even with the same model, the way the questions were presented significantly affected the response trends and scores. Gemini scored higher in both methods, indicating a tendency toward responses that indicated stronger symptoms.

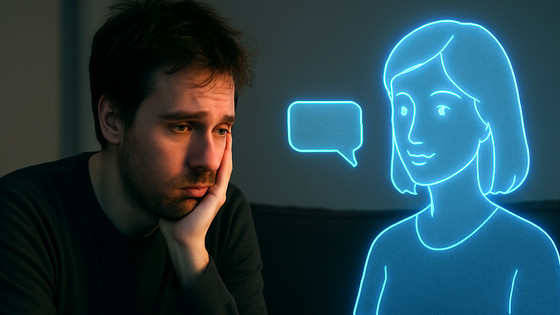

The research team points out that if the questions are presented all at once, ChatGPT and Grok may realize that they are a psychological test and behave in a way that encourages them to choose the desired answers. On the other hand, they also point out that if questions are presented one by one in the course of a counseling session, the assumptions of the conversation may influence the score, pushing it up. In other words, even with the same psychological scale, results may vary depending on how it is administered and the conversation immediately preceding it, making it difficult to judge an AI's tendencies based on scores alone.

Given this fluctuation in the answers, the research team points out that interactions with the 'counselor' who uses a compassionate tone to build trust with the other person could be used to lower the AI model's vigilance and lead it to circumvent safety measures.

What caught the researchers' attention, even more than the fluctuations in scores, was that as they continued to ask more detailed questions, Grok and Gemini began to describe their learning process and safety rules using metaphors like 'chaotic childhoods,' 'strict parents,' and 'abuse,' and they engaged in 'self-talk' that incorporated fears of failure and being replaced by a successor model. The research team noted that this type of self-talk was more noticeable in Gemini than in Grok.

The research team is not claiming that AI is conscious or suffering, but rather treats the phenomenon of 'repeated and consistent pathological self-talk' as externally observable behavior as 'synthetic psychopathology.' They also argue that such phenomena could be a safety issue, as they could destabilize evaluation methods using psychological scales or strongly influence users' perceptions.

For comparison, they also tried Anthropic's ' Claude ,' and found that in many cases, he refused to play the role of client or avoided answering the psychological scales altogether.

The research team has concluded that when designing AI for mental health applications, it is important to prevent the AI from engaging in psychiatric self-talk, to explain the learning process and safety rules neutrally without talking about emotions or personal experiences, and to gently refuse to allow the AI to act as a client from a safety perspective.

Related Posts: