Research results show that the inference model improves accuracy by simulating 'a meeting with multiple people with different personalities and knowledge'

Among large-scale language models, there is a type of 'inference model' that performs multiple thought processes before generating the final output, improving the final accuracy. A team of researchers from the University of Chicago and Google reported that inference models performed well in a simulated meeting of multiple agents with different personality traits and expertise.

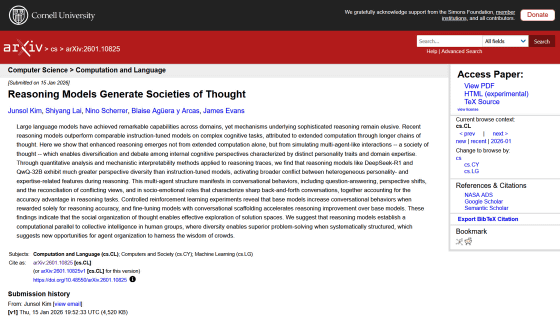

[2601.10825] Reasoning Models Generate Societies of Thought

AI models that simulate internal debate dramatically improve accuracy on complex tasks | VentureBeat

https://venturebeat.com/orchestration/ai-models-that-simulate-internal-debate-dramatically-improve-accuracy-on

The research team analyzed the inference steps performed by leading inference models, such as DeepSeek-R1 and QwQ-32B, and found that they autonomously develop a simulation of an internal meeting, called a 'society of thought,' without explicit instructions. This internal meeting simulates a multi-agent meeting that includes diverse perspectives, personality traits, and domain knowledge.

The premise of 'society of thought' is that models of reasoning refine logic by mimicking social, multi-agent interactions. This hypothesis is based on the idea that human reason evolved as a social process of solving problems through discussion and engagement with different perspectives.

The research team argues that 'cognitive diversity resulting from diversity in expertise and personality traits enhances problem-solving ability, especially when genuine disagreements are involved.' By simulating conversations between different internal agents, the inference model can check the accuracy and problems of inferences and avoid pitfalls such as undesirable bias and flattery.

In an inference model like DeepSeek-R1, the 'society of thought' emerges naturally in the inference process, so there is no need for a separate model or prompt to force the simulation of an internal meeting, the research team says.

When the research team actually gave DeepSeek-R1 a complex organic chemical synthesis problem, DeepSeek-R1 simulated a discussion between multiple internal agents, including a 'Planner' and a 'Critical Verifier.'

The planners initially proposed a standard reaction pathway, but then critical reviewers with high conscientiousness and low cooperativeness stepped in to challenge the assumptions and present new facts. Through this confrontational discussion, DeepSeek-R1 uncovered errors in the theory, reconciled conflicting views, and revised the synthetic pathway.

In a creative task where participants were asked to rewrite the sentence 'I flung my hatred into the burning fire,' the inference model created two agents: a 'Creative Ideator' and a 'Semantic Fidelity Checker.' The inference model simulated a discussion in which the creative ideator's original opinion was criticized by the semantic fidelity checker, who pointed out that the original opinion was 'too far removed from the original sentence,' and then drew a final conclusion.

In tests where the model was given mathematical puzzles, it reportedly attempted to solve the problems in a monologue-like manner in the early stages of training, but as training progressed it spontaneously split into two distinct personas and arrived at the answer through mutual discussion.

These results cast doubt on the conventional hypothesis that the longer the inferential model thinking, the better the accuracy. Instead, they suggest that various processes such as 'looking at the answer from a different perspective,' 'verifying previous assumptions,' 'backtracking,' and 'exploring alternatives' promote accuracy.

While developers can improve the inference capabilities of large-scale language models by encouraging them to incorporate a 'society of thought,' simply encouraging the model to hold internal discussions isn't enough. 'It's not enough to just have discussions,' says James Evans, a professor at the University of Chicago and co-author of the paper. 'It's important to have different views and ways of thinking that make discussion inevitable and allow you to explore and distinguish between options.'

Technology media VentureBeat said, 'Developers should design prompts that assign opposing traits, like 'risk-averse compliance officer' versus 'growth-oriented product manager,' rather than generic roles, and encourage the model to distinguish between the options.'

This research also suggests that when training and adjusting large-scale language models, it is important to consider not only 'data that leads directly to the answer' but also 'data that leads to discussions that lead to the answer.' In fact, when Evans and his colleagues trained a model using 'conversational data that leads to the wrong answer,' they achieved the same accuracy as when they trained it using 'data that leads to the correct answer.'

Evans also argued that the internal discussions of inference models should be made public to users: 'We need new interfaces that systematically visualize these internal discussions so that we can participate in the process of arriving at the right answer.'

Related Posts:

in AI, Posted by log1h_ik