Introducing the lightweight, high-performance coding agent 'Qwen3-Coder-Next'

Alibaba has announced the launch of Qwen3-Coder-Next , an open-weight language model built for coding agents and local development. With a total parameter count of 80B, it achieves powerful coding and agent capabilities while significantly reducing inference costs.

Qwen

🚀 Introducing Qwen3-Coder-Next, an open-weight LM built for coding agents & local development.

— Qwen (@Alibaba_Qwen) February 3, 2026

What's new:

🤖 Scaling agentic training: 800K verifiable tasks + executable envs

📈 Efficiency–Performance Tradeoff: achieves strong results on SWE-Bench Pro with 80B total params and… pic.twitter.com/P7BmZwdaQ9

1/4Introducing Qwen3-Coder-Next: Our latest open-weights model designed specifically to power the next generation of autonomous Coding Agents.

— Tongyi Lab (@Ali_TongyiLab) February 3, 2026

Built on Qwen3-Next, this model is engineered to handle complex, long-horizon programming tasks with unprecedented efficiency.… pic.twitter.com/VzWObqdd8U

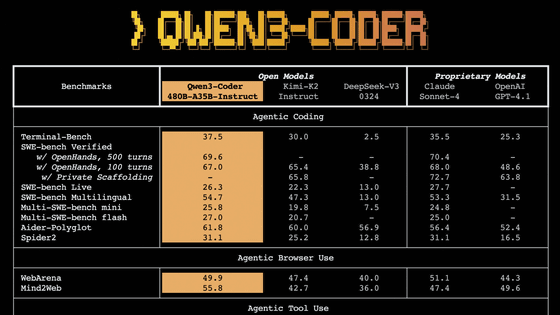

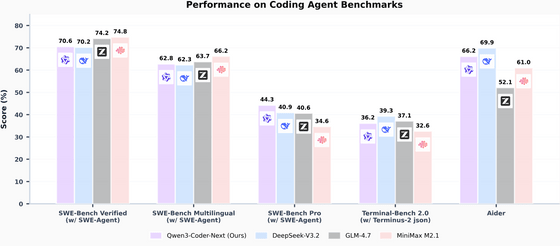

The graph below compares the coding capabilities of 'Qwen3-Coder-Next,' ' DeepSeek-V3.2 ,' ' GLM-4.7 ,' and ' MiniMax M2.1 .' Qwen3-Coder-Next achieved over 70% in the SWE-Bench Verified test and outperformed the other three in the more demanding SWE-Bench Pro.

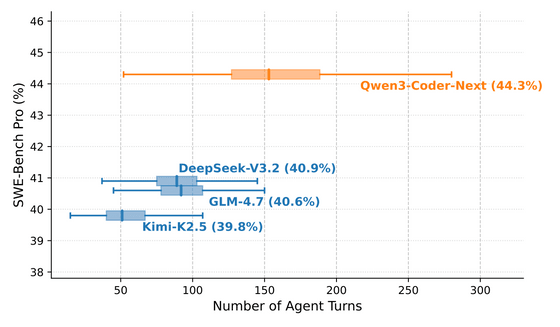

Qwen3-Coder-Next achieved superior results on SWE-Bench Pro by increasing the number of interactions (number of agent turns), and Alibaba claims that this model provides evidence that it excels at long-term reasoning in multi-turn agent tasks.

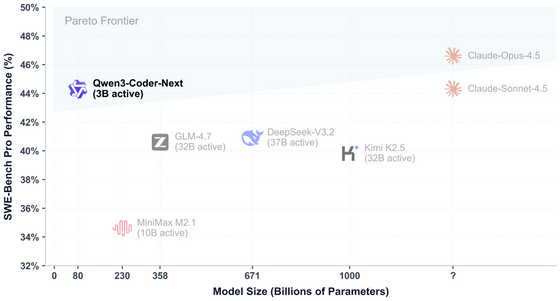

The model has 80 billion parameters overall, with 300 million active parameters. The company emphasizes that it has achieved high performance for its scale, achieving an 'improved trade-off between efficiency and performance.'

Qwen3-Coder-Next is a model built on Qwen3-Next-80B-A3B-Base , implementing a novel architecture that employs hybrid attention and MoE to perform large-scale agent training in large-scale executable task synthesis, environment interaction, and reinforcement learning. Rather than relying solely on parameter scaling, it is designed to emphasize long-term inference, tool usage, and failure recovery, which are essential for real-world coding agents.

The model data is available on Hugging Face and ModelScope .

Related Posts:

in AI, Posted by log1p_kr