Introducing the Chinese-made AI 'GLM-4.7,' which is strong in coding, and an open model that surpasses Gemini 3.0 Pro in some tests

GLM-4.7: Advancing the Coding Capability

https://z.ai/blog/glm-4.7

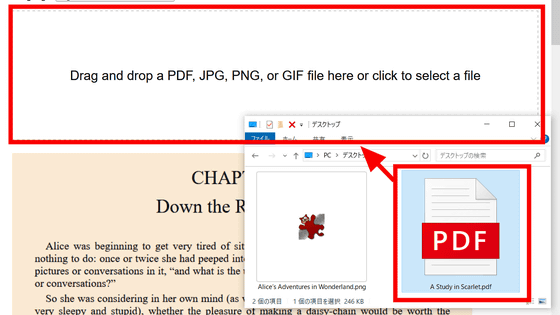

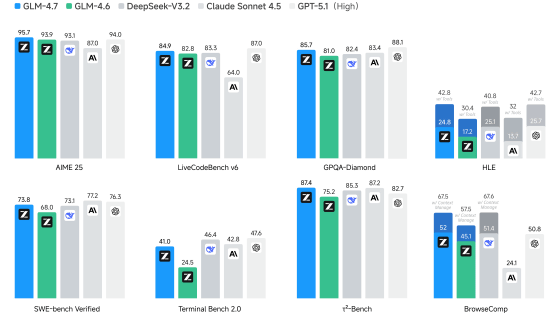

GLM-4.7 has achieved significant performance improvements over its predecessor, GLM-4.6, and has also improved the UI quality of generated web pages, etc. Below is the result of entering the prompt 'Draw a voxel art including a garden with prominent cherry blossoms and a pagoda in a single HTML file' into the previous generation, GLM-4.6.

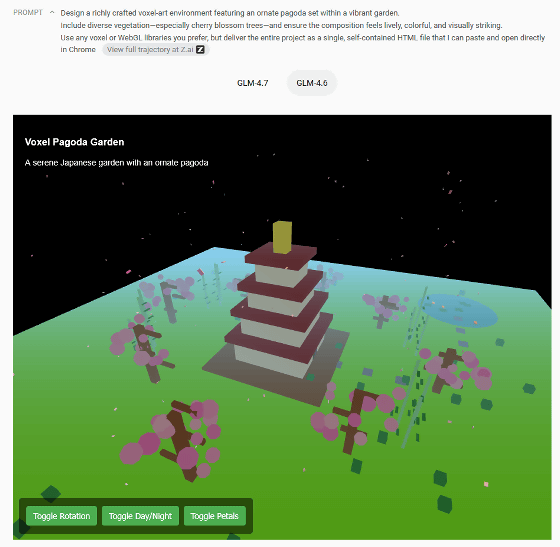

Here's the result of entering the same prompt into GLM-4.7. The output from GLM-4.7 is of much higher quality.

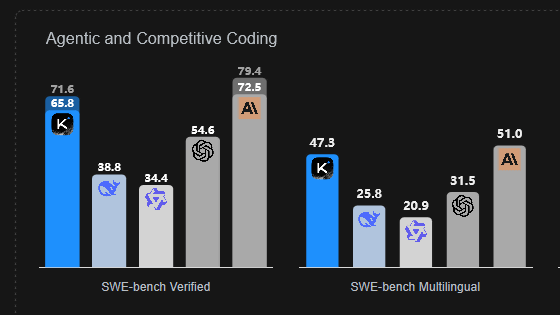

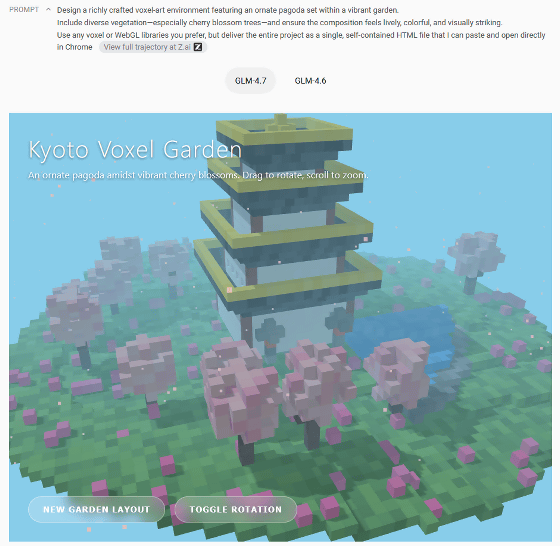

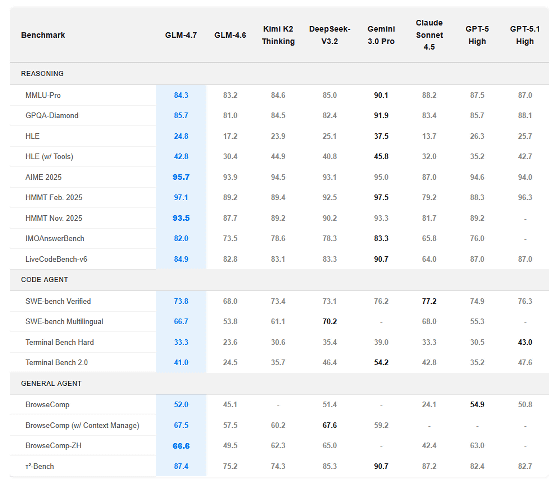

Below is a graph showing the various benchmark results for 'GLM-4.7', 'GLM-4.6', 'DeepSeek-V3.2', 'Claude Sonnet 4.5', and 'GPT-5.1 (High)'. GLM-4.7 recorded a score higher than GPT-5.1 (High) in 'AIME 2025', which measures mathematical performance.

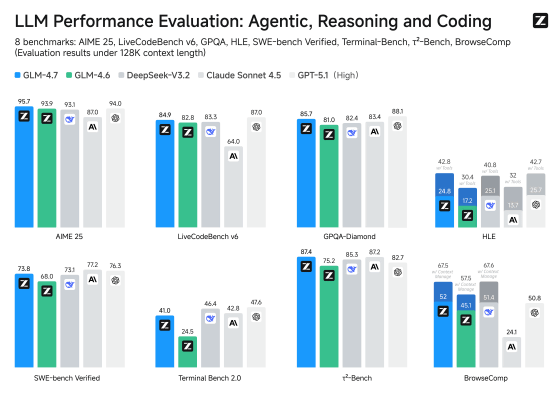

The various benchmark scores for 'GLM-4.7', 'GLM-4.6', 'Kimi K2 Thinking', 'DeepSeek-V3.2', 'Gemini 3.0 Pro', 'Claude Sonnet 4.5', 'GPT-5 (High)', and 'GPT-5.1 (High)' are as follows. GLM-4.7 outperformed Gemini 3.0 Pro in tests such as AIME 2025 and HMMT Nov. 2025.

The GLM-4.7 model data is available at the following link:

zai-org/GLM-4.7 · Hugging Face

https://huggingface.co/zai-org/GLM-4.7

GLM-4.7 requires datacenter GPUs such as the H100 or H200. When using a 128k context window, running GLM-4.7 at BF16 precision requires 32 H100s or 16 H200s. For more information, see the Japanese README below.

GLM-4.5/README_ja.md at main · zai-org/GLM-4.5 · GitHub

https://github.com/zai-org/GLM-4.5/blob/main/README_ja.md

Related Posts:

in AI, Posted by log1o_hf