Google proposes 'Project Suncatcher' to equip artificial satellites with AI chips and launch them into space

by NASA Goddard Space Flight Center

With the rapid development of AI, the demand for computing power and energy is exploding. On November 4, 2025, Google announced a new research project called ' Project Suncatcher ,' which aims to massively expand the computing power of AI (artificial intelligence) in space. This is based on the recognition that AI is a fundamental technology for tackling humanity's most difficult challenges.

Exploring a space-based, scalable AI infrastructure system design

https://research.google/blog/exploring-a-space-based-scalable-ai-infrastructure-system-design/

Project Suncatcher explores powering AI in space

https://blog.google/technology/research/google-project-suncatcher/

Google is not the only company working to build data centers in space; various startups are also working to make this a reality.

Plans to set up a data center in space are close to being realized - GIGAZINE

Google's Project Suncatcher seeks to find more efficient ways to harness the power of the sun, the solar system's largest energy source. At certain orbits in space, solar panels can receive up to eight times more energy per year than on Earth, reducing the need for batteries and enabling near-continuous power generation. Google envisions space as an ideal place to expand AI computing in the future, and says it will undertake this 'moonshot' while minimizing its impact on terrestrial resources such as land and water.

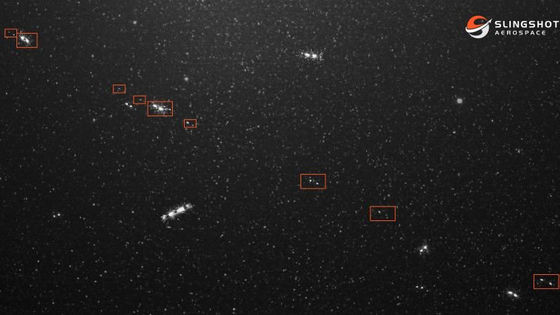

Project Suncatcher's concept is to build a constellation of satellites equipped with Google's proprietary AI accelerator chip, the TPU. These satellites are expected to be launched into Dawndusk orbit, which will keep them out of the Earth's shadow, in order to maximize power generation and minimize communication latency and launch costs. Furthermore, the satellites will be connected via a high-bandwidth network, eliminating the need for assembly in space. The project also features a modular design that links multiple small satellites. This will enable high scalability, aiming for terawatt-class computing power in the future.

However, Google pointed out that there are several major technical challenges to realizing Project Suncatcher.

The first challenge is increasing the bandwidth of inter-satellite communications. Large-scale machine learning clusters on the ground require ultra-high-bandwidth communications of hundreds of Gbps between chips and several Tbps across the entire link. However, the data rates of current commercial inter-satellite optical links, which are only 1-100 Gbps, cannot meet these requirements. Therefore, the research team proposes adopting 'dense wavelength division multiplexing (DWDM)' technology and 'spatial multiplexing,' which are used in terrestrial optical communications.

However, these technologies require receiving optical power several thousand times higher than conventional satellite links. Because receiving power decreases inversely with the square of the distance, this power requirement can be met by flying the satellites very close to each other, within a few kilometers or even a few hundred meters. In basic experiments on the ground, a single transmitter-receiver pair has already been successfully used to transmit a total of 1.6 Tbps (800 Gbps one way) in both directions.

The second challenge was controlling this dense constellation. The study simulated a configuration of 81 satellites arranged in a cluster with a radius of 1 km at an average altitude of 650 km. While the main disturbances in this orbit are the asymmetry of the Earth's gravitational field and atmospheric drag, the model suggested that this close-range formation flight, with the distance between satellites oscillating by a few hundred meters, could be achieved with relatively small orbit-keeping maneuvers while maintaining a sun-synchronous orbit.

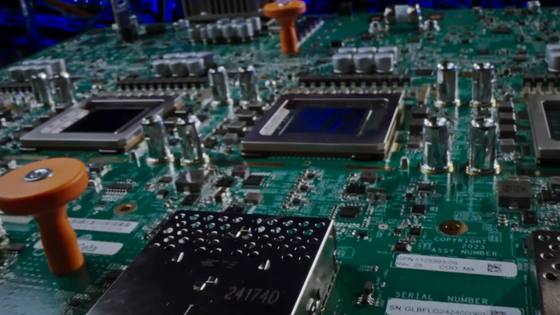

The third challenge is the TPU's resistance to space radiation. In the space environment, there are concerns that cumulative radiation exposure can cause device degradation, and that a single high-energy particle can cause momentary malfunction. The resistance of cutting-edge chips like the TPU was particularly unknown. Google's TPU v6e was therefore subjected to a 67MeV proton beam irradiation test, simulating the environment of a low-earth orbit satellite.

Even the most sensitive high-bandwidth memory (HBM) showed no abnormalities until it reached approximately three times the radiation dose it would receive during a five-year mission. Google reports that the chip itself did not suffer permanent damage even at higher doses, demonstrating the TPU v6e's remarkable robustness for space applications. The rate of uncorrectable errors in the HBM for SEE was also very low, estimated at about one per 10 million inferences in orbit, which is considered acceptable for inference processing.

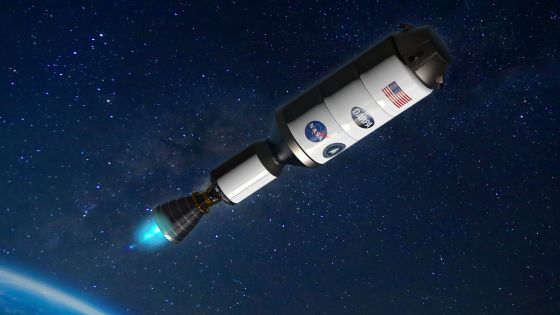

The fourth challenge is economic feasibility, particularly launch costs. Historically, the biggest barrier to space-based systems has been high launch costs. Based on SpaceX's past launch price data, Google analyzed a learning curve showing that 'price decreases by approximately 20% for every doubling of cumulative launch mass.' If this learning rate continues, they predict that launch costs to low Earth orbit could fall to less than $200 (approximately 30,000 yen) per kilogram by the mid-2030s.

At a cost of $200 per kg, the annual electricity cost for a Starlink v2 minisatellite, calculated by dividing the satellite launch cost by its useful life, would be approximately $810 (approximately ¥122,000). This is comparable to the reported annual electricity costs of terrestrial data centers in the United States, which range from approximately $570 (approximately ¥85,500) to $3,000 (approximately ¥450,000), suggesting that AI computing in space could become economically competitive.

As a next step, Google plans to launch two prototype satellites for a 'learning mission' by early 2027. This mission will test how the TPU works in space and demonstrate the effectiveness of optical inter-satellite links for distributed ML tasks.

'Future engineering challenges will need to be addressed, including efficient thermal management in space, high-bandwidth communications with ground stations, and in-orbit system reliability and repair strategies. Ultimately, larger scale and higher integration will advance what's possible in space, potentially leading to new satellite designs where power collection, computing, and thermal management are tightly integrated,' Google said.

Related Posts: