Microsoft releases AI model 'WHAMM' that generates games in real time, and a demo using 'Quake II' can be played

On April 4, 2025, Microsoft released the World and Human Action MaskGIT Model (WHAMM), an AI model that can respond to player actions in real time and generate game environments. In conjunction with this, it is possible to play a demo of the 1997 shooter game Quake II , which is reproduced by AI.

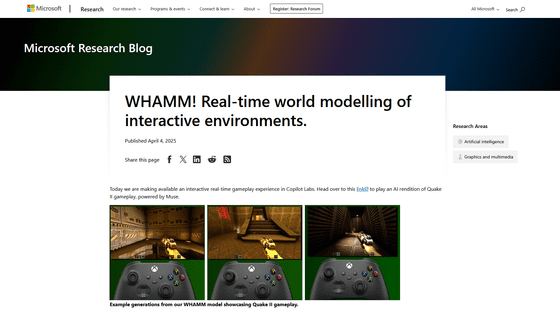

WHAMM! Real-time world modeling of interactive environments. - Microsoft Research

https://www.microsoft.com/en-us/research/articles/whamm-real-time-world-modelling-of-interactive-environments/

Microsoft has created an AI-generated version of Quake | The Verge

Microsoft releases AI-generated Quake II demo, but admits 'limitations' | TechCrunch

https://techcrunch.com/2025/04/06/microsoft-releases-ai-generated-quake-ii-demo-but-admits-limitations/

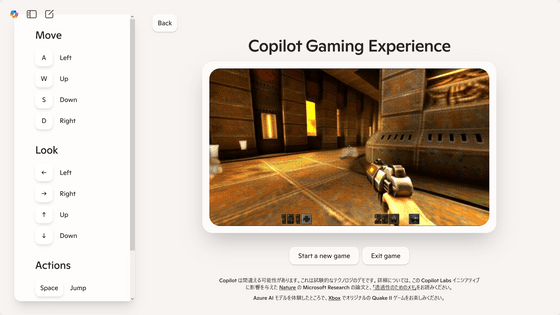

A demo of WHAMM is available at the following link:

Microsoft Copilot: Copilot Gaming Experience

https://copilot.microsoft.com/wham

If the user is over 18 years old, click 'Agree' to start playing.

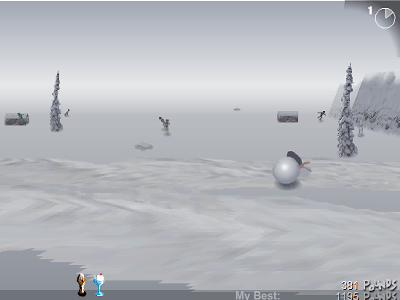

Here's the actual gameplay: There's a huge amount of lag in the controls, making it difficult to play comfortably.

You have 120 seconds to play, and when the time limit is reached, a 'Game Over' message will appear.

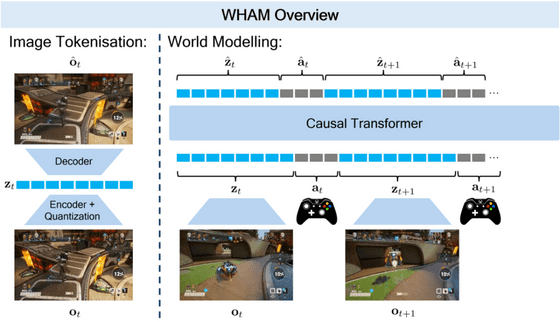

WHAMM, announced by Microsoft this time, is an AI model that can be called an improved version of the

While WHAM-1.6B could only generate about one frame per second, WHAMM can generate over 10 frames per second, allowing for real-time rendering that responds instantly to the player's keyboard and controller inputs.

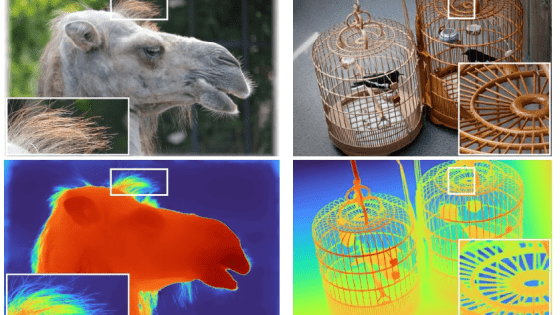

Conventional WHAM uses a modeling method that generates tokens one by one, like large-scale language models.

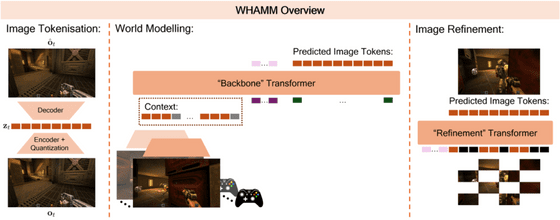

However, this modeling method had the problem that it was 'high quality but took a long time to generate.' Therefore, Microsoft adopted an architecture called 'MaskGIT' for WHAMM. This is a method of generating tokens for the entire image at once, then masking some tokens and re-predicting and correcting them, and by repeating this procedure, it is possible to gradually refine image prediction.

To achieve real-time responses with fewer computational steps, WHAMM employs a Backbone transformer with approximately 500 million parameters to generate initial predictions for tokens across the entire image, and a Refinement transformer with approximately 250 million parameters to refine the initial predictions. This makes it possible to run the MaskGIT step multiple times, ensuring better final predictions.

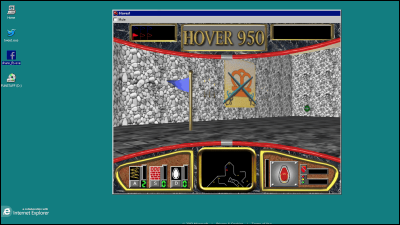

On the other hand, Microsoft also lists some current challenges for WHAMM.

Enemy interaction: issues with enemy characters appearing blurry and inaccurate calculation of battle damage

Context length: At the time of writing, WHAMM's context length is 9 frames per 10 fps, meaning that enemies and objects disappear after 0.9 seconds out of view.

- Numerical accuracy: Issues with inaccurate numbers for remaining stamina, etc.

Range limitations: WHAMM is only trained on a portion of Quake II, so generation stops when it reaches the end of the area.

- Delay: WHAMM was made available for anyone to try out via a web browser, which caused delays in operation.

'The WHAMM model is an early experiment in real-time generated gameplay experiences. We're excited to explore what new interactive media these models enable,' Microsoft said.

Related Posts: