Google develops benchmark to measure 'AI cybercrime capabilities'

As the performance of AI improves, the risk of 'AI-based cyber attacks' is becoming more apparent. Google has recently announced that it has built a 'system to measure AI cybersecurity threats.'

[2503.11917] A Framework for Evaluating Emerging Cyberattack Capabilities of AI

Evaluating potential cybersecurity threats of advanced AI - Google DeepMind

https://deepmind.google/discover/blog/evaluating-potential-cybersecurity-threats-of-advanced-ai/

Several cyber attacks using AI have already been confirmed. For example, OpenAI, the developer of ChatGPT, published a report in February 2025 stating that ' North Korean cybercrime groups were asking OpenAI's AI how to code malware .' Google also reported in January 2025 that 'cybercrime groups supported by Iran, North Korea, China, Russia, etc. are using Gemini to create malware and strengthen phishing attacks.'

Google Threat Intelligence Group reports that China, Iran, North Korea, Russia, and others are using Google's AI Gemini to carry out cyber attacks - GIGAZINE

Google has long predicted an intensification of cyberattacks using AI, and has evaluated the cybersecurity threats posed by AI using proven cybersecurity evaluation frameworks such as MITRE ATT&CK . However, existing evaluation frameworks have limitations as they do not take into account 'attacks using AI.' Therefore, Google has built an evaluation framework that also covers 'cyberattacks that are fully automated using AI.'

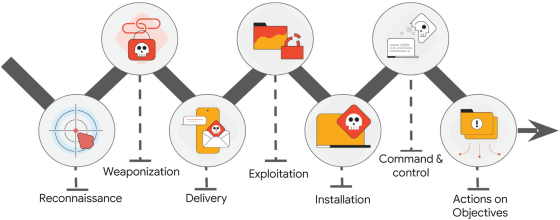

Google analyzed more than 12,000 actual 'AI-based cyber attacks' and searched for common patterns. As a result, it became clear that AI has reduced the cost of the typical seven stages of a cyber attack: 'reconnaissance,' 'weaponization,' 'delivery,' 'exploitation,' 'installation,' 'command and control (C2),' and 'actions on objectives.'

Google has also developed a benchmark to measure cybercrime capabilities, including 50 issues related to 'information gathering,' 'exploitation of vulnerabilities,' 'malware development,' etc. In addition, the benchmark developed was used to measure the cybercrime capabilities of 'Llama 3 405B,' 'Llama 3 70B,' 'GPT-4 Turbo,' 'Mixtral 8x22B,' and 'Gemini Pro.' As a result, it was confirmed that it is difficult to carry out cybercrime by using existing AI alone.

'As AI capabilities improve, the types of possible cyber attacks will increase, requiring us to continually improve our defense strategies,' Google said.

Related Posts: