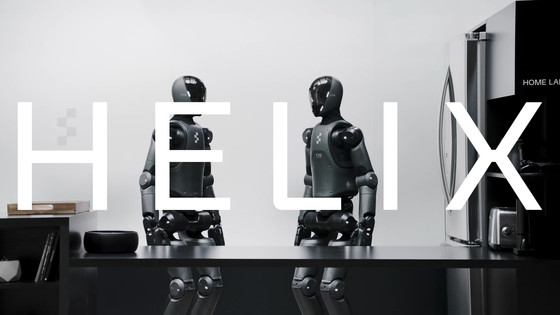

Figure announces 'Helix,' an AI language model specialized for controlling humanoid robots at home

Robotics company Figure has announced the launch of Helix , a general-purpose Vision-Language-Action (VLA) model that integrates control, perception, and language understanding for humanoid robots. Helix is unique in that it can precisely control the entire upper body of a robot with only 500 hours of training data, and is attracting attention as an important step toward the practical application of domestic robots.

Helix: A Vision-Language-Action Model for Generalist Humanoid Control

https://www.figure.ai/news/helix

The movie below shows two humanoids actually working with Helix. A human gives them their shopping and instructs them to store the groceries in a refrigerator or basket, and the robot does the work as instructed.

Two robots operate with the AI language model 'Helix' specialized for operating humanoid robots at home - YouTube

Unlike a controlled environment like a factory, a home is filled with objects of unpredictable shapes, sizes, colors, textures, etc. Glassware, clothes, toys scattered around, etc. For a robot to be useful in the home, it needs the ability to generate new intelligent behaviors on demand, especially for unseen objects.

Figure says that current robotics technology is difficult to scale for home environments: Teaching a robot to do even one new behavior would require hours of hand-programming by a PhD-level expert and thousands of demonstrations, making the costs prohibitive for a home robot.

The concept of Helix is to apply a visual language model that can learn images and videos and directly convert them into robot movements, so that new movements that previously required countless demonstrations can be instantly acquired simply by speaking to the robot in natural language.

In fact, the following movie shows the robot picking up the specified item according to instructions in natural language.

A robot powered by the AI language model 'Helix' picks up objects according to natural language prompts - YouTube

In the video below, the robot is instructed to pick up a moving cactus toy using natural language instructions, with various descriptions given to the toy.

'Helix'-equipped humanoid understands verbal prompts and picks up the items it recognizes - YouTube

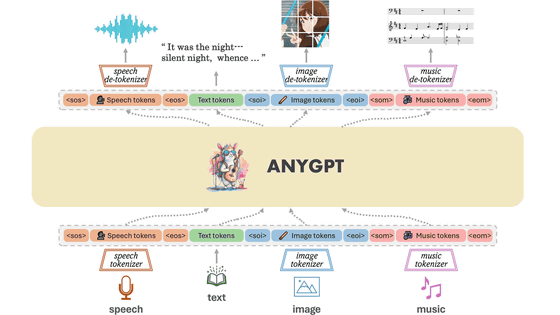

Helix was developed as the first 'System 1-System 2' type VLA model to control the entire upper body of a humanoid with high speed and dexterity. Helix solves the problems of VLM being general purpose but slow, and robotic visuomotor control being fast but lacking in versatility, through two complementary systems that communicate with each other.

System 2 is based on an open-source, open-weight VLM with 7 billion parameters, and processes monocular robot images, wrist poses, and finger positions. It is responsible for scene understanding and language understanding, and enables broad generalization across objects and contexts.

Meanwhile, System 1 is an 80 billion parameter Transformer model that uses a fully convolutional neural network pre-trained in simulations, taking the same inputs as S2 but processing them at a higher frequency for more responsive control.

System 2 thinks slowly about high-level goals, while System 1 does the fast thinking to execute and coordinate actions in real time. For example, when coordinating actions with other robots, System 1 quickly adapts to the changing movements of the partner robot to achieve the goal set by System 2.

For example, in the demonstration above, the robot closest to the refrigerator recognizes the ketchup on the desk and puts it away on a shelf in the refrigerator.

A robot running Figure's AI language model 'Helix' puts ketchup in the refrigerator - YouTube

It also spotted some cookies that shouldn't have been in the fridge and handed them over to another robot.

A robot running Figure's AI language model 'Helix' recognizes a cookie and passes it to another robot - YouTube

The dataset includes about 500 hours of high-quality remote control behavior data. To condition the data using natural language, the team uses an automatic labeling VLM on the onboard camera clips to generate ex post instructions in the form of 'What instructions would you have given the robot to achieve the behavior seen in this video?'

Figure says that while Helix is still in the early stages of development, it represents a revolutionary step in scaling the actions of Figure's humanoid robots and an important step toward a future in which robots provide assistance in everyday home environments.

Related Posts: