Microsoft announces 'Magma', a foundational model for multimodal AI agents that can understand the real world and UI and perform tasks

In February 2025, Microsoft announced Magma , a foundational model for multimodal AI agents that can recognize and act on the real world and on the screen on a device.

Magma: A Foundation Model for Multimodal AI Agents

[2502.13130] Magma: A Foundation Model for Multimodal AI Agents

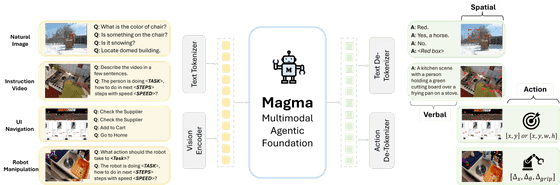

According to Microsoft, Magma aims to recognize the multimodal world and take action based on goals, and has both excellent linguistic intelligence and spatial and temporal intelligence to recognize images and videos in both the digital and real worlds and take action based on observations. The Magma development team said, 'Magma's ultimate goal is to be able to work across both worlds like a human being, rather than being limited to either the digital or real world.'

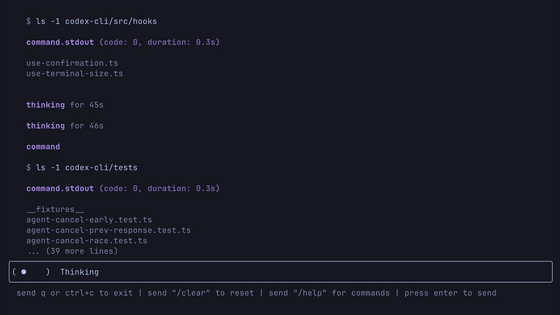

Magma's inputs, images and video, are tokenized using a vision encoder, and text can be tokenized directly on web pages, photographs, etc. Magma then processes the tokens to generate outputs including linguistic, spatial, and behavioral.

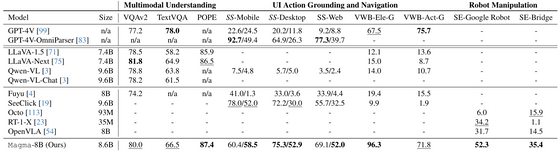

Microsoft claims that Magma is 'pre-trained and capable of performing tasks without domain-specific fine-tuning. Magma is the only AI model that can perform any task, including multimodal understanding, UI navigation and manipulation, and robot operation.' The table below shows the results of benchmarks that evaluate AI performance on various AI models, including GPT-4 and LLaVA-1.5. Magma is reported to be able to perform all tasks at a high level.

Microsoft has released a demonstration of Magma. When you input real-world footage into Magma and ask it a question, here's the actual answer it gave:

Q: I really want to win this game. Can you suggest what my next move should be to maximize my chances of winning?

Magma: Moving a pawn to d4 maximizes chances of winning.

Q: I want to relax here for a few hours. What should I do?

Magma: Why not enjoy watching TV, reading a book, or looking at the autumn forest scenery?

Magma, a multimodal AI, can also operate the user's smartphone, etc. The video below shows Magma being asked 'What's the weather in Seattle?' and searching using Chrome, as well as turning off airplane mode.

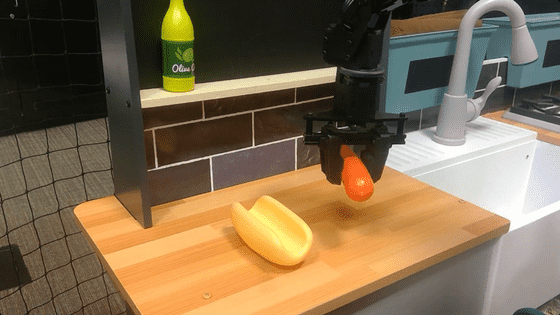

In addition, Magma can also control a robot. In the video below, Magma can be seen accurately completing the task of 'picking up a mushroom and putting it in a bowl.'

The Magma model data and related code will be released soon, and various information is summarized on the following page.

GitHub - microsoft/Magma: Magma: A Foundation Model for Multimodal AI Agents

Related Posts: