A developer who has worked at Google for about 25 years talks about 'How Google plans to achieve general artificial intelligence (AGI)'

In recent years, various AI research institutes have been working on building 'general-purpose artificial intelligence (AGI)' that can efficiently acquire and adapt new skills in unknown situations like humans, and OpenAI has announced that it has

Jeff Dean & Noam Shazeer – 25 years at Google: from PageRank to AGI - YouTube

Jeff Dean & Noam Shazeer – 25 years at Google: from PageRank to AGI

https://www.dwarkeshpatel.com/p/jeff-dean-and-noam-shazeer

Dean, who joined Google in 1999, has worked on the development of important systems in modern computing, such as the programming model MapReduce , Bigtable , TensorFlow , and Gemini . Scherzer, who also joined Google in 1999, developed various architectures and methods used in modern large-scale language models, such as Transformer, Mixture of experts , and Mesh TensorFlow .

In 2007, Dean and his team trained the ' (PDF file) N-gram model ' for language modeling. The number of tokens in N-gram is 2 trillion, and it is also known as the forerunner of large-scale language models. In recent years, they have been involved in training Gemini, and at the time of writing, about 25% of the code deployed was generated by coding AI.

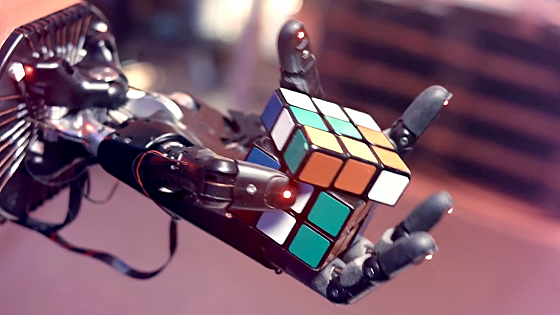

Google's goal is to 'organize the world's information and make it available to everyone,' and advanced AI and large-scale language models are considered important tools to achieve this goal. Advanced AI includes not only information search in a search engine, but also the ability for AI to write code on behalf of users and solve problems that humans cannot solve, and Scherzer said, 'Such advanced AI has the potential to create great value in the world.' In fact, Google aims to extend large-scale language models to various modalities such as text, images, video, and audio, so that it can understand not only humans but also data from non-human sources such as self-driving cars and genomic information.

Dean predicts that in the future, AI models will be able to solve more complex problems. Specifically, if modern AI can split a task into 10 subtasks and execute them with 80% accuracy, Dean speculated that future AI will be able to split a task into 100-1000 subtasks and execute them with 90% accuracy.

As for future prospects, the two men mentioned that 'models will have access to trillions of tokens, allowing them to perform more advanced tasks based on information from the entire internet and individuals,' 'software development will become dramatically more efficient as AI assists with code generation and test execution,' 'models will continuously learn and improve,' and 'model performance will be improved by taking many steps during inference, such as by incorporating techniques such as search.'

To achieve this, Google is focusing on accelerating the training and inference of large-scale language models through hardware development such as

On the other hand, Google has a ' Responsible AI' policy to balance progress in AI research and development with ethical considerations, and has taken several safety and mitigation measures to prevent AI from being misused, such as misinformation and hacking of computer systems. In addition, the company is using engineering to develop safe and secure systems for high-risk tasks, drawing on software development for aircraft. In addition, based on the idea that 'analyzing text is easier than generating text,' AI systems are being used to check themselves or other systems.

Dean and Shazer said, 'Modern AI models still have issues such as hallucination and there is room for improvement. By taking these safety measures, we hope that in the future AI will bring great benefits to society in areas such as education and healthcare.'

Related Posts: