'Nepenthes' is developed to trap crawlers that collect data for AI training in an infinitely generated maze

Crawlers are used to scrape data from the internet to be used in training AI. Major AI companies offer options to prevent data on your website from being used to train AI, but it is also problematic that crawlers are able to circumvent these types of blocks and extract information from websites . Nepenthes can trap such crawlers in an infinitely generated maze.

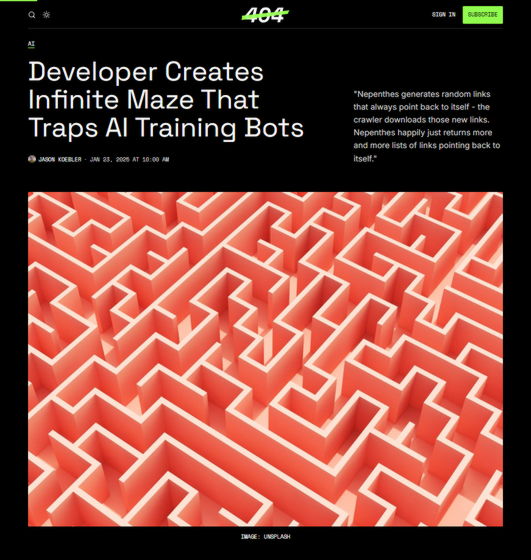

Developer Creates Infinite Maze That Traps AI Training Bots

https://www.404media.co/email/7a39d947-4a4a-42bc-bbcf-3379f112c999/

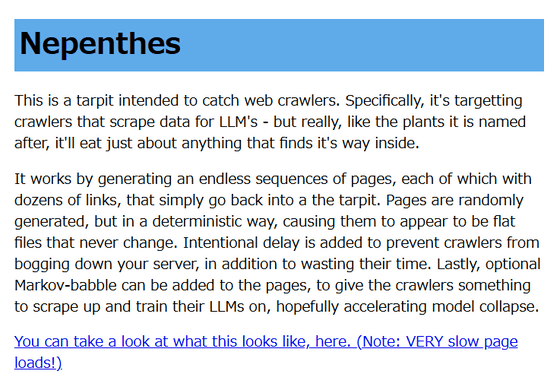

Nepenthes is a worm that creates infinite maze-like traps to target crawlers, who scrape data to train large language models (LLMs). The author warns that Nepenthes 'actually eats everything that gets inside.'

Nepenthes captures crawlers by infinitely generating web pages with dozens of links. Since the generated links are to web pages where Nepenthes is installed, the crawler cannot collect data that can be used for training AI even if it goes around the links infinitely. In addition, the author warns about Nepenthes, 'It is malicious software intended to cause harmful activity, so please do not deploy it if you are not completely convinced of what it is doing.'

Nepenthes

https://zadzmo.org/code/nepenthes/

According to 404 Media, a technology media that interviewed the creator of Nepenthes, the creator called himself 'Aaron B.' He said about Nepenthes, 'Nepenthes is more like an infinite maze that traps a minotaur than a flypaper. The crawler is an inescapable minotaur. A typical crawler doesn't seem to have much logic. It downloads a URL, and if it finds a link to another URL, it downloads that too. Nepenthes always generates random links that point to itself, so even if the crawler downloads a new link, it can only download Nepenthes.' 'Unless the crawler finds a way to detect that it is wandering in the Nepenthes labyrinth, it will continue to consume resources and wander around the labyrinth without doing anything useful.'

The following page is a demo page where you can see how Nepenthes works. When you access a page, multiple links are generated, so if you pretend to be a crawler and click on the links, you can see how multiple new links are created. No matter how many times you click on a link, you will be sent to a new page and new links will be displayed again, so you can see that the crawler will wander through meaningless pages forever. The page is very heavy, but the author explains that it is intentionally designed to load slowly, and this is because 'it is not just for the crawler to waste time, but also because the delay is intentionally added to prevent the server from crashing.'

zadzmo.org/nepenthes-demo/

https://zadzmo.org/nepenthes-demo/

If you want to block crawlers that perform scraping for AI training, you can use ' robots.txt ' to prevent them from crawling your web pages. However, AI companies use different crawlers, and the names of crawlers are frequently updated, and some companies even ignore the requirements of 'robots.txt'.

Generative AI search engine Perplexity ignores crawler-preventing 'robots.txt' to extract information from websites - GIGAZINE

Aaron B explained that the crawler issue had drawn the attention of Internet users, which led him to develop Nepenthes. 'It's a work of art,' he said. 'It's an unleashing of pure anger at the way things are going, and expresses my disgust with the way the Internet has evolved into a panopticon of exploitation, the whole world has fallen into fascism, and the oligarchs are running everything. It's gotten so bad that boycotts and votes can't get us out of it, and we have to inflict real pain on the people in charge to bring about change.'

According to Aaron B, crawlers have accessed public pages millions of times since Nepenthes was released. On Hacker News , a person claiming to be the CEO of an AI company said, 'This kind of trap can be easily avoided,' and boasted that Nepenthes is not a problem for crawlers, but Aaron B said, 'Looking at the access logs, we can see that even the all-powerful Google cannot avoid the Nepenthes trap.'

Related Posts:

in Software, Posted by logu_ii