Developers victimized by AI crawlers are fighting back in creative and humorous ways

FOSS infrastructure is under attack by AI companies

https://thelibre.news/foss-infrastructure-is-under-attack-by-ai-companies/

Open source devs are fighting AI crawlers with cleverness and vengeance | TechCrunch

https://techcrunch.com/2025/03/27/open-source-devs-are-fighting-ai-crawlers-with-cleverness-and-vengeance/

A 'crawler' is a bot that collects information from websites on the Internet, and in recent years, it is known that AI development companies are using crawlers to train and respond to AI. According to Linux developer Niccolo Venerandi , FOSS, which relies on public collaboration and has fewer resources than private companies, is under a heavy burden from increasingly aggressive AI crawlers.

A big problem is that many AI crawlers do not respect the text file ' robosts.txt ' that controls the crawler. On March 17, 2025, Drew DeVault, CEO of the collaborative development platform SourceHut, stated in a blog that crawlers for large-scale language models were crawling data without respecting robots.txt, causing service outages dozens of times a week.

According to DeVault, the AI crawler extracts data from every page and commit in a repository using user agent strings randomly drawn from tens of thousands of IP addresses, each of which makes only a single HTTP request, disguising itself as normal user traffic and hindering mitigation efforts.

Devault claims that developers of large-scale language models have no sincerity, accusing them of 'stop legalizing large-scale language models or AI image generation, GitHub Copilot, or this garbage.' The problem is not unique to SourceHut: 'All my system administrator friends are dealing with the same problem. I was asking one of them for feedback on a draft of an article, but the discussion was interrupted to deal with a wave of large-scale language model bots that had occurred on their server.'

In a January

'Blocking AI crawler bots is futile because they lie, change their user agent, and use your home IP address as a proxy,' Iaso said.

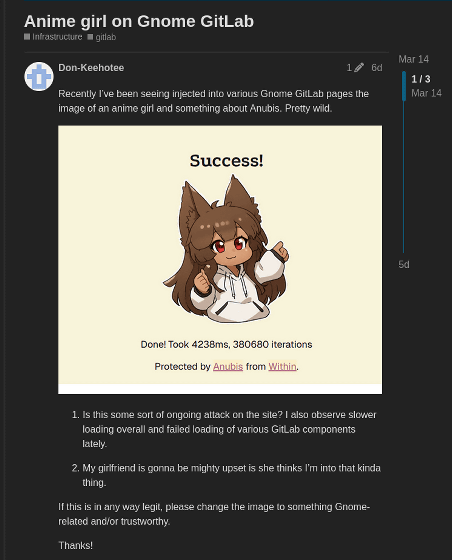

So Iaso developed a tool called ' Anubis' to deal with AI crawlers. Anubis is a proof-of-work system that requires users to complete tasks when accessing a service, and only allows access to users who complete the tasks. Bots such as AI crawlers are blocked, but human-operated browsers can get through.

The name Anubis comes from Anubis , the god of the underworld in Egyptian mythology. Iaso told TechCrunch, a technology media outlet, 'Anubis weighed your soul (your heart), and if it was heavier than the feather, your heart was eaten and you died,' likening the bot's judgment to Anubis's judgment.

While Anubis is running or when it has completed, a cute illustration of Anubis as a personification will be displayed, as shown below.

Anubis seems to be quite effective as a way to eliminate AI crawlers, but there is a problem in that it takes time for human users to access it. There are also reports that it took 1-2 minutes to access it when multiple people access it at the same time from the same IP address, because they are given tasks that take longer to execute. Still, Iaso said, 'In a fair world, this software doesn't need to exist,' and 'But we don't live in a fair world, and we need to take steps to protect our servers from bad actors who scrape them,' explaining that as long as there are malicious AI crawlers that ignore robots.txt, the introduction of Anubis is unavoidable.

When Anubis was released on GitHub on March 19, it attracted enough attention that it had garnered 2,100 stars and 43 forks in about 10 days up to the time of writing, indicating that a significant number of FOSS developers are being plagued by AI crawlers.

GitHub - TecharoHQ/anubis: Weighs the soul of incoming HTTP requests using proof-of-work to stop AI crawlers

https://github.com/TecharoHQ/anubis/tree/main?tab=readme-ov-file

In addition to Anubis, FOSS developers are dealing with AI crawlers in various ways. Some of them are 'blocking IP addresses on a country-by-country basis, such as Brazil and China, to eliminate AI crawlers,' but some developers are trying to fight back against AI crawlers.

In January, a piece of software called 'Nepenthes' was developed that could generate an infinite number of web pages with dozens of links, trapping AI crawlers in circles around useless pages.

'Nepenthes' is developed to trap crawlers that collect data for AI training in an infinitely generated maze - GIGAZINE

Cloud computing service Cloudflare also announced a tool called 'AI Labyrinth' in March to guide AI crawlers to AI-generated content.

Cloudflare announces 'AI Labyrinth' that traps AI crawlers in infinitely generated mazes - GIGAZINE

Venerandi also reported that some FOSS projects are receiving an increasing number of bug reports from AI-generated bug bounty programs, which may look legitimate at first glance, but often contain hallucinations that are typical of AI, leading to human developers wasting time investigating and analyzing them.

'Again, I would like to point out that these issues disproportionately affect the FOSS world. Not only do open source projects often have fewer resources than commercial products, but they are community-driven projects, so they have much more of their infrastructure exposed, making them more susceptible to AI crawlers and AI-generated bug reports,' Venerandi said.

Related Posts:

in Software, Web Service, Security, Posted by log1h_ik