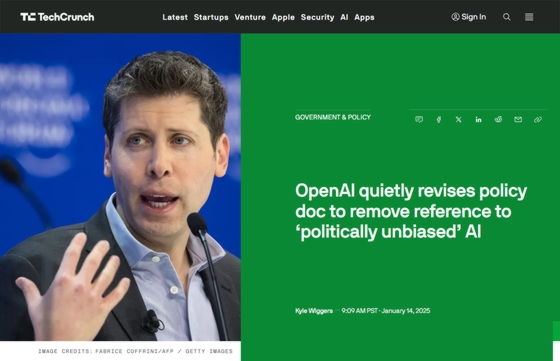

OpenAI quietly revises policy document to remove reference to 'politically unbiased' AI

On January 13, 2025 local time, OpenAI published

OpenAI quietly revises policy doc to remove reference to 'politically unbiased' AI | TechCrunch

https://techcrunch.com/2025/01/14/openai-quietly-revises-policy-doc-to-remove-reference-to-politically-unbiased-ai/

Tibor Blaho, lead engineer at AIPRM , discovered that the statement 'AI models should aim to be politically unbiased by default' had been deleted from the policy document. According to him, the statement 'AI models should aim to be politically unbiased by default' that was in the draft policy document released on January 9, 2025, appears to have been deleted from the version released on January 13.

OpenAI's Economic Blueprint has been updated on 2025-01-13 (compared to previous version from 2025-01-09) pic.twitter.com/MQnxP1OvPz

— Tibor Blaho (@btibor91) January 14, 2025

When asked by TechCrunch for comment, a spokesperson for OpenAI said, 'The statement was removed as part of an effort to streamline the document.' In addition, the spokesperson said that other documents, including OpenAI's model specifications , 'mention objectivity,' and emphasized that OpenAI's AI models have correct objectivity.

OpenAI releases ChatGPT model specifications, including a wide range of ChatGPT response rules such as 'sexual topics are not allowed, but scientific contexts are OK' and 'promotion of crime is not allowed, but providing information to prevent crime is OK' - GIGAZINE

TechCrunch points out that OpenAI's removal of some of the statements from its policy documents 'makes it clear that the debate over biased AI has become a political minefield.'

Many of President-elect Donald Trump's allies, including Elon Musk and David Sachs, have accused AI chatbots of censoring conservative views. Sachs, in particular, has criticized OpenAI's ChatGPT, saying it is ' programmed with social injustice ' and doesn't tell the truth about politically sensitive subjects.

Musk also criticized the data used to train AI models and the social injustice of companies in the San Francisco Bay Area, saying, 'A lot of the AI that's being trained in the San Francisco Bay Area is adopting the philosophies of the people around them. So I think there's a social injustice and a nihilistic philosophy built into these AIs.'

TechCrunch reported that 'Bias in AI is also a tough technical problem to solve. In fact, Musk's AI company xAI is struggling to create a chatbot that doesn't favor certain political views over others.'

A paper published in August 2024 also pointed out that ChatGPT has a liberal bias on topics such as immigration, climate change, and same-sex marriage.

OpenAI itself claims that this type of bias is a 'bug, not a feature.'

Related Posts:

in Software, Posted by logu_ii