Bluesky is developing a system that allows users to indicate whether they agree or disagree with AI learning, but it's unclear whether AI developers will follow users' wishes

Although the company that operates Bluesky has stated that

Brief update on our ongoing efforts to allow users to specify consent (or not) for AI training: 🧵

— Bluesky (@bsky.app) 2024-11-27T01:52:05.788Z

◆ We are currently building a system to clearly indicate whether or not users consent to being used for AI learning.

On November 16, 2024, Bluesky stated that 'Bluesky will not use content posted by users for AI learning.' However, since Bluesky has a system in place that keeps all posts open, technically anyone can obtain all Bluesky posts and use them for AI learning. This has created a situation where 'just because the Bluesky operator does not conduct AI learning does not mean that posts will not be used for AI learning.'

Unlike X (formerly Twitter), Bluesky has stated that it will not use posts to train AI - GIGAZINE

Then, on November 26, 2024, an 'AI learning dataset created by acquiring 1 million Bluesky posts' was published on the AI platform Hugging Face. Although the dataset was not publicly available at the time of writing, the appearance of an example of 'use by a third party for AI learning' came as a huge shock to the community.

An example has emerged in which 'Bluesky operators do not use user posts for AI learning, but third parties can learn AI,' and a dataset of 1 million posts is made public on Hugging Face via Bluesky's API - GIGAZINE

Meanwhile, on November 27, 2024, Bluesky revealed that it is developing a mechanism to indicate whether or not users agree to be used for AI learning. The mechanism to indicate whether or not users agree to AI learning is apparently considering a format similar to the ' robots.txt ' on websites.

Bluesky is an open and public social network, much like websites on the Internet itself. Websites can specify whether they consent to outside companies crawling their data with a robots.txt file, and we're investigating a similar practice here.

— Bluesky (@bsky.app) 2024-11-27T01:52:05.790Z

Websites can use 'robots.txt' to specify policies such as 'requesting search engines not to collect information.' Bluesky will also introduce a similar mechanism to allow AI developers to indicate whether or not they consent to their content being used for AI training. However, it is up to each developer to respect the user's wishes.

For example, this might look like a setting that allows Bluesky users to specify whether they consent to outside developers using their content in AI training datasets

— Bluesky (@bsky.app) 2024-11-27T01:52:05.791Z

Bluesky won't be able to enforce this consent outside of our systems. It will be up to outside developers to respect these settings

Bluesky is currently consulting with lawyers and engineers and plans to provide further updates in the near future.

We're having ongoing conversations with engineers & lawyers and we hope to have more updates to share on this shortly!

— Bluesky (@bsky.app) 2024-11-27T01:52:05.792Z

◆Bluesky version 1.95

In addition to supporting AI learning, Bluesky version 1.95 was released on November 28, 2024. Version 1.95 allows you to prioritize replies that are attracting attention. The specific setting method is as follows.

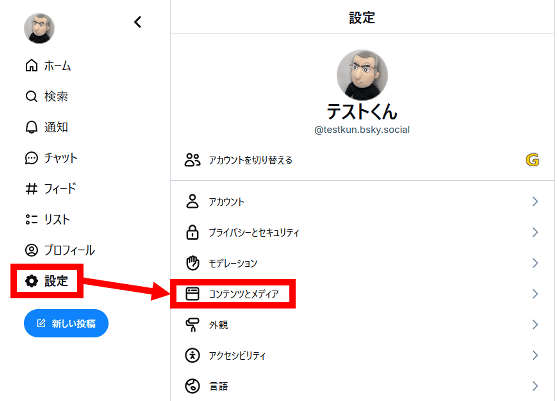

First, click on 'Content and Media' in the 'Settings' screen.

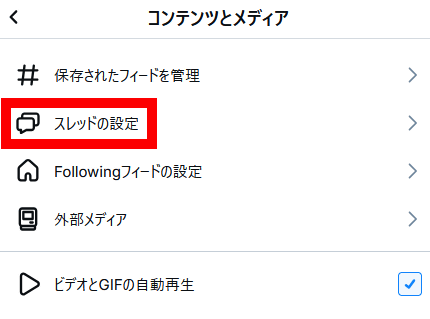

Click 'Thread Settings'.

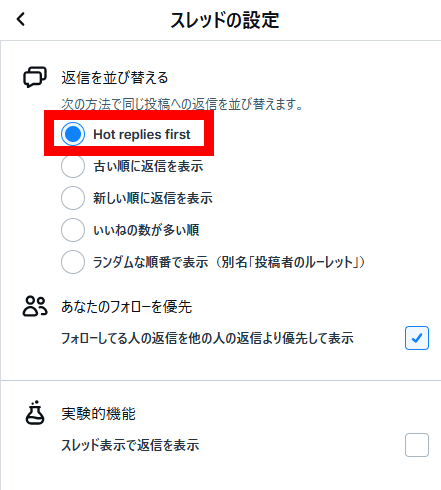

Click 'Hot replies first' to complete the setup. Now, 'Recently liked replies' will be displayed preferentially.

Related Posts:

in Web Service, Posted by log1o_hf