NVIDIA announces 'QUEEN', an open source AI model that enables 'live streaming with freely changeable viewpoints', with compact file size and higher image quality than competitors

Semiconductor giant NVIDIA has announced the open source AI model ' QUEEN ' that will enable the delivery of 'video with freely changeable viewpoints' in video conferencing, live streaming, etc. QUEEN has a low output bandwidth but is capable of generating high-quality scenes, so NVIDIA claims that it will 'bring live streaming to a new dimension.'

QUEEN: QUantized Efficient ENcoding of Dynamic Gaussians for Streaming Free-viewpoint Videos

https://research.nvidia.com/labs/amri/projects/queen/

NVIDIA Research Model Enables Dynamic Scene Reconstruction | NVIDIA Blog

https://blogs.nvidia.com/blog/neurips-2024-research/

There are several types of content that viewers want to check from their preferred viewpoint, such as cooking broadcasts and live sports broadcasts. NVIDIA has developed 'QUEEN' as an AI model to realize such free viewpoint live broadcasts. NVIDIA claims that it will be useful for building immersive streaming applications, and also notes that it can be applied to remotely operating robots in warehouses and manufacturing plants.

Shalini de Mello , research director at NVIDIA, said about QUEEN, 'To stream free viewpoint video in near real time, 3D scenes must be reconstructed and compressed simultaneously. QUEEN balances factors such as compression ratio, image quality, encoding time and rendering time to create an optimized pipeline that sets a new standard for image quality and streamability.' He emphasized that it can output high-quality images despite its low bandwidth.

Below is an example of a free viewpoint video output by QUEEN.

Part 1

Part 2

Part 3

Part 4

Free viewpoint video usually requires a setup similar to that of a movie studio with multiple cameras or a warehouse with multiple security cameras. The basic premise is that the footage is shot with multiple cameras so that the viewpoint can be moved freely. In addition, conventional AI methods have limitations such as the need for a large amount of memory to generate free viewpoint video for live streaming, and the need to sacrifice image quality to reduce file size.

But QUEEN expertly balances image quality with file size to deliver high-quality output, even for dynamic scenes with sparks, flames, furry animals, and more, that can be easily sent from the host server to the client device, and renders the footage faster than traditional methods.

In most real-world environments, many elements of the scene remain static, which means that the vast majority of pixels in a video don't change between frames. To save computing time, QUEEN tracks and reuses rendering of these static areas, focusing instead on reconstructing content that changes over time.

NVIDIA's research team is using NVIDIA Tensor Cores to evaluate the performance of QUEEN in several benchmarks. The results show that QUEEN outperforms other methods of generating free-viewpoint video in a variety of criteria. It is said that rendering free-viewpoint video at approximately 350 frames per second (350 fps) takes less than five seconds using 2D video of the same scene shot from various angles.

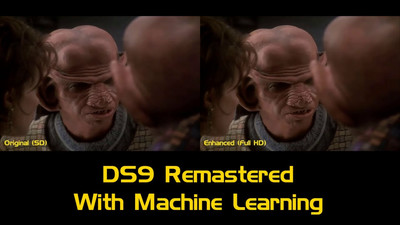

The image below is a cut-out scene from a free viewpoint video output by 3DGStream (left) and QUEEN (right). The image quality of the background is about the same, but the quality of the people moving in the video is clearly better with QUEEN. Also, the file size of the video output by 3DGStream is 8.86MB per frame, while that of QUEEN is 2.18MB, less than a quarter of that.

Thanks to its compact file size yet high image quality, QUEEN 'can support media broadcasts of concerts and sports matches by providing immersive virtual reality experiences and instant replays of key sports moments,' NVIDIA explains.

Details of QUEEN are scheduled to be announced at the annual AI research conference ' NeurIPS ' to be held in Vancouver, Canada on December 10, 2024 local time. QUEEN is scheduled to be released as open source and will be made public on the following project page.

QUEEN: QUantized Efficient ENcoding of Dynamic Gaussians for Streaming Free-viewpoint Videos

https://research.nvidia.com/labs/amri/projects/queen/

Related Posts: