A communication expert explains the linguistic tricks that make us empathize with AI chatbots like ChatGPT and Gemini

AI chatbots such as ChatGPT and Gemini are based on large-scale language models and can have natural conversations with humans in a dialogue format. Although the content contains mistakes and is not completely reliable, it can continue the dialogue in a way that is empathetic to the user. Christian Augusto Gonzalez-Arias, a postdoctoral researcher at the Department of Communication Sciences at the University of Santiago de Compostela in Spain, explains how humans empathize with conversations with AI chatbots.

ChatGPT's artificial empathy is a language trick. Here's how it works

https://theconversation.com/chatgpts-artificial-empathy-is-a-language-trick-heres-how-it-works-244673

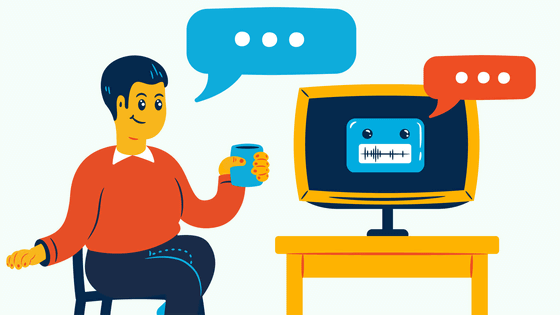

AI chatbots like ChatGPT and Gemini don't just use familiar words and phrases, they mimic human communication patterns, allowing for coherent, contextual conversations and even the ability to express emotions like humor and empathy.

One of the most distinctive properties of human language, says González-Arias, is its 'subjectivity,' which manifests itself in words and expressions that convey emotional nuance, express personal opinions, form opinions about events, and use contextual and cultural factors.

González Arias argues that one of the archetypal features of subjectivity in human language is the use of personal pronouns. First-person pronouns like 'I' and 'we' allow us to express personal thoughts and experiences, while the second-person pronoun 'you' allows us to relate to the other person and establish a relationship between the two parties in a conversation.

For example, a user might ask, 'I'm cleaning up my house. How do I decide which items to keep, donate, and throw away?' The AI chatbot responds, 'That's a great question! Organizing your belongings can be a daunting task, but with a clear strategy, it'll be easier for you to make the decisions. Here are some ideas to help you decide what to keep, donate, and throw away.'

In this response, the AI chatbot does not directly use the first-person pronouns 'I' or 'my,' but takes on the role of advisor and guide, presenting the idea as its own through phrases such as 'I'll show you an idea.'

By taking on the role of advisor, the AI chatbot makes the user feel personally addressed, even if the first-person pronoun is not explicitly used. Additionally, the use of 'let me introduce you to...' reinforces the idea that the AI chatbot has something to offer.

And 'you' is a term that directly addresses the user. This can be seen in multiple places in the previous examples, such as phrases like 'Organising your belongings' and ' you can make these decisions easier.' By being personal, the AI chatbot aims to make the user feel like an active participant in the conversation.

This type of language is common in texts that actively engage others. Phrases like 'That's a good question!' not only give the user a positive impression of the question, but also encourage participation. Phrases like 'Organizing your belongings can be a lot of work' suggest a shared experience and create the illusion of empathy by acknowledging the user's feelings.

By using first person, AI chatbots simulate self-awareness and attempt to create the illusion of empathy, while by using second person and taking on an advisory role, they draw the user in and reinforce the perception of intimacy. This combination produces conversations that are human-like, actionable, and advice-oriented.

However, González-Arias cautions that “that empathy comes from algorithms, not from actual understanding.” Getting used to interacting with non-conscious entities that simulate our identity and personality may have long-term consequences, as these interactions can affect our personal, social and cultural lives.

Gonzalez-Arias predicts that 'as these technologies advance, it will become increasingly difficult to distinguish between a conversation with a real person and a conversation with an AI system.' The increasingly blurred line between artificial and human will impact our understanding of authenticity, empathy, and conscious presence in communication. Humans may begin to treat AI chatbots as conscious entities, creating confusion about their actual capabilities.

Interacting with machines may also change our expectations of human relationships, which are colored by emotion, misunderstandings, and complexity. In the long term, repeated interactions with AI chatbots may reduce our tolerance for conflict and our ability to accept the natural imperfections of interpersonal relationships. 'As we become accustomed to quick, seamless, non-confrontational interactions, we may become more dissatisfied with our relationships with real people,' Gonzalez-Arias speculated.

Moreover, long-term exposure to simulated human interactions raises ethical and philosophical dilemmas. By attributing human qualities to these beings, such as the ability to have feelings and intentions, we may begin to question the value of conscious life and perfect simulations. This could even spark debates about robot rights and the value of human consciousness, Gonzalez-Arias said.

'Interacting with non-sentient entities that mimic human identity has the potential to change our perception of communication, relationships and identity,' said Gonzalez-Arias. 'While these technologies can offer greater efficiency, it is important to be aware of their limitations and their potential impact on machine and human-to-human interactions.'

Related Posts:

in Software, Posted by log1i_yk