Discord explains how it reduced bandwidth per user by 40%

Discord , a popular chat app with over 200 million monthly active users, has posted an article on its official blog explaining how they have reduced the bandwidth they use.

How Discord Reduced Websocket Traffic by 40%

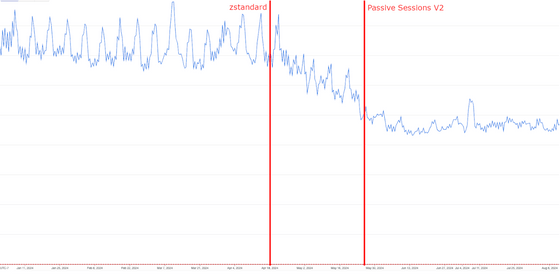

When a Discord client connects to a server, various data is sent and received in real time through a service called a gateway. In the second half of 2017, zlib was introduced to compress data, reducing the amount of data transferred by half to one-tenth.

Then, a method called Zstandard, which appeared in 2015, became popular and Discord decided to consider using it. Zstandard not only achieved higher compression rates and shorter processing times, but also supported dictionaries, so it was expected that Discord's usage, which involves exchanging a large number of messages with the same shape, would be more effective.

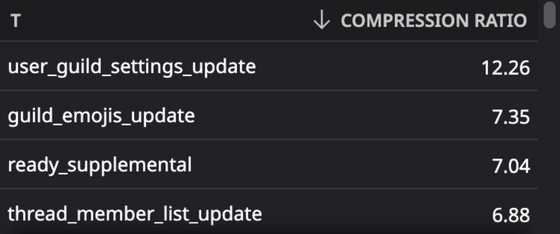

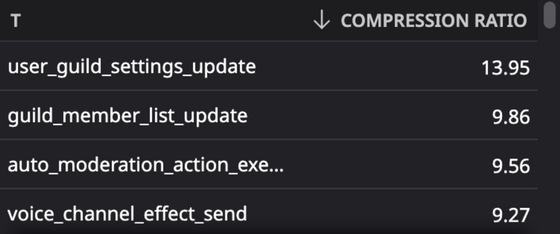

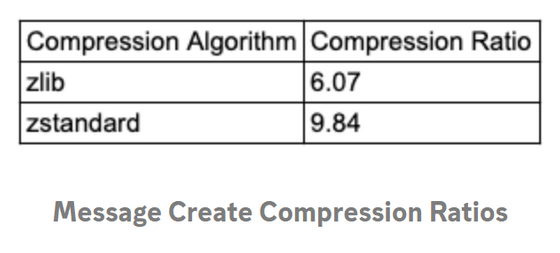

To make sure that theory holds up in reality, the Discord development team tested the difference in compression rates between zlib and Zstandard using a production payload. The figure below shows the compression rate of Zstandard.

The graph below shows the compression ratio of zlib. In the payload type 'user_guild_settings_update', where both achieved the highest compression ratio, zlib clearly outperformed, and in many other items, zlib achieved a higher compression ratio.

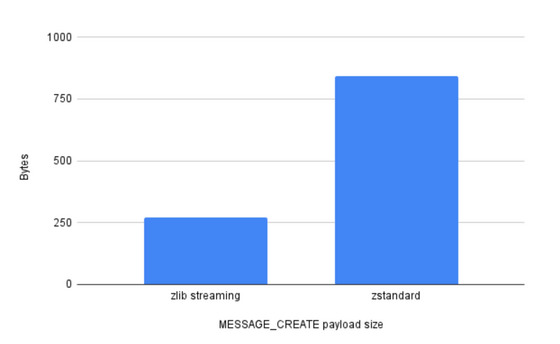

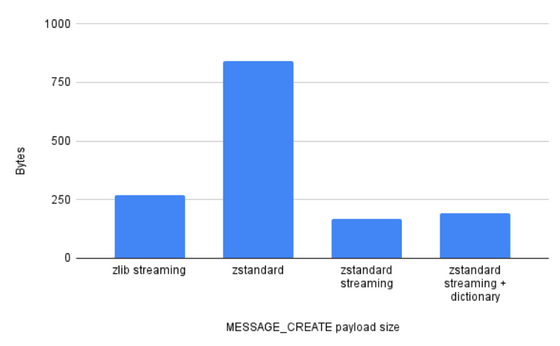

In particular, the payload type 'MESSAGE_CREATE' was more than three times larger when compressed with Zstandard than when compressed with zlib.

The development team investigated why Zstandard's compression rate was worse than zlib's and found that zlib was capable of streaming compression and could optimize the compression method from past payloads, whereas Zstandard was initialized for each message and could not make use of history.

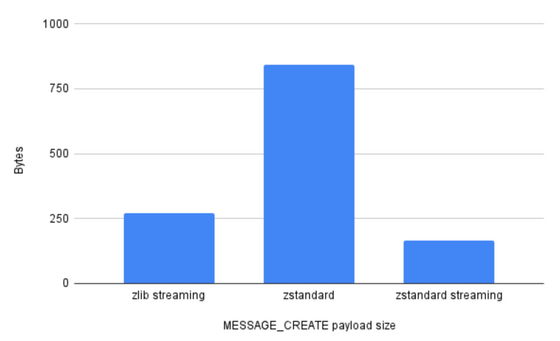

So the Discord development team forked the elixir/erlang library ' ezstd ' to add streaming support, and in the spirit of open source, they also provided support upstream .

Using Zstandard with streaming compression, we were able to achieve compression rates that far surpassed those of zlib.

We also succeeded in reducing the size of 'MESSAGE_CREATE', which was a particular problem, to below zlib.

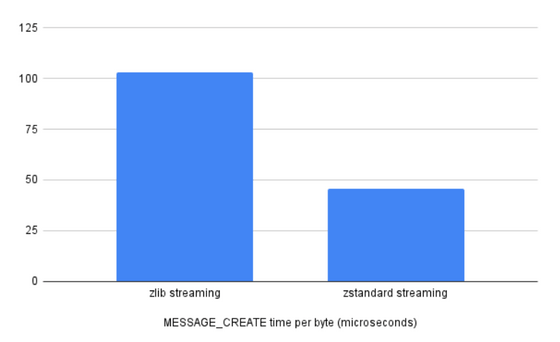

In terms of processing time per byte, Zstandard was able to reduce it from about 100 microseconds for zlib to about 45 microseconds for Zstandard.

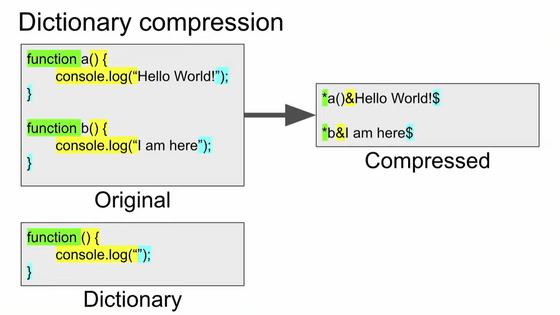

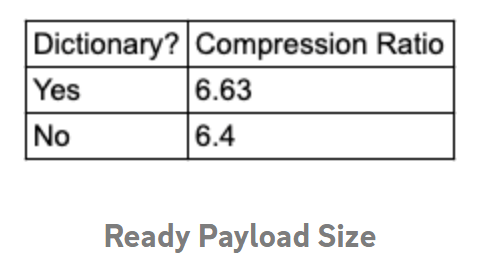

We also tried to further improve the compression ratio by introducing dictionaries. For example, in the case of a short payload called 'READY' that is sent when a server connection is completed, the compression ratio increased slightly from 6.4 to 6.63 when dictionaries were introduced. The 'READY' payload is a fixed phrase and should be more susceptible to the effects of dictionaries, but the compression ratio only increased slightly.

When we checked other payloads, we found that the dictionary was surprisingly ineffective. The amount of data increased when the dictionary was added to the 'MESSAGE_CREATE' payload. Although it is possible that the compression rate could be improved by using the dictionary with further optimization, the development team decided not to use the dictionary because they did not want to increase the complexity of the implementation while the effect was low.

The development team also tried to further compress the service by using the spare memory space during the day when there were fewer users. Although they introduced a mechanism to double the memory usage of Zstandard when there was a certain amount of spare memory, this did not work as well as the development team had expected.

The development team discovered that more memory was being allocated to each connection than was actually used, and the amount of free memory was being calculated incorrectly. The development team tried to optimize the memory allocation, but concluded that the implementation complexity was not worth the expected benefits, and so they reverted to the original allocation settings.

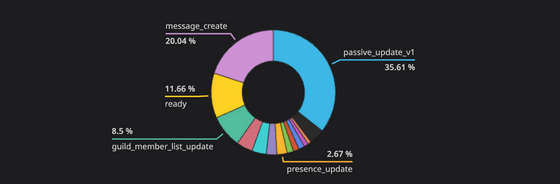

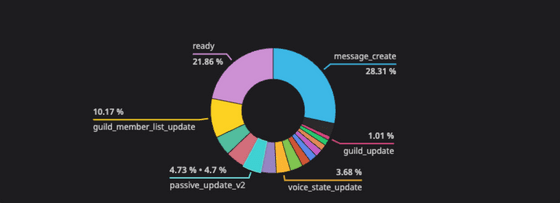

During the investigation, the team discovered that a large portion of the bandwidth was being consumed by a payload called 'passive_update_v1,' which was used to synchronize the state of servers that users had not opened for a while. This payload sent all data when one of the pieces of information that was synchronized even during inactivity, such as channels or users, was updated.

So the team developed 'passive_update_v2,' which sends only the differential updates, and reduced the overall bandwidth consumed by inactive servers from 35% to 5%, resulting in a 20% reduction in overall bandwidth.

By implementing both Zstandard and passive_update_v2, Discord was able to reduce bandwidth usage per client by an average of about 40% compared to before they were implemented.

Related Posts:

in Software, Web Service, Posted by log1d_ts