Cloudflare announces that it has reduced CPU usage by 1% for processes that run 35 million times per second by optimizing its code

Cloudflare, which develops CDN and DDoS protection services, processes a huge amount of HTTP requests, over 60 million per second on average. In a blog post, the company said that it had 'reduced CPU usage by more than 1%' by reviewing the processing of those HTTP requests.

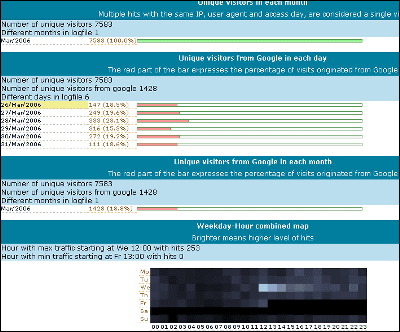

A good day to trie-hard: saving compute 1% at a time

The Pingora framework used by Cloudflare's services requires Cloudflare to remove headers that are used only internally by Cloudflare when sending a user's request to the actual destination server, the 'origin.'

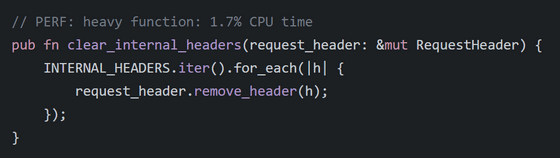

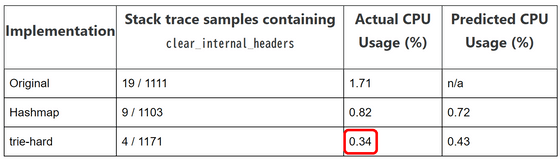

To remove the headers, Cloudflare performed the following process on all external requests: The 'clear_internal_headers' function below consumed more than 1.7% of the total CPU time of the 'pingora-origin' service, which processes external requests. With 35 million external requests per second, the impact was significant, so the engineering team decided to work on optimizing this function.

The team first used an evaluation library called

In the original code, the code iterated through all headers based on the internal headers table, repeating the process of 'find and remove a header' over 100 times.

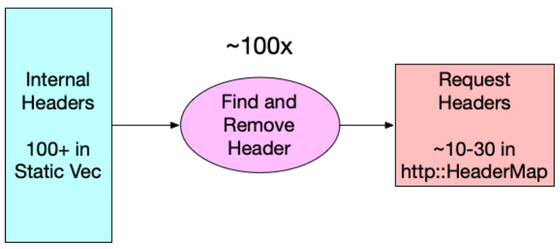

The engineering team noticed that in most cases, only 10 to 30 internal headers were used in each request. By changing the process to 'check each header in the request against the internal headers and remove them,' they were able to reduce the processing time to 1.53 µs. This alone made the function 2.39 times faster.

In addition, we started to speed up the part of 'checking each header against the internal header'. When written using O-notation , the read time of a hash table is O(1), so it seems that there is no room for further speedup, but in reality, it takes O(L) time to calculate the hash, where L is the length of the key.

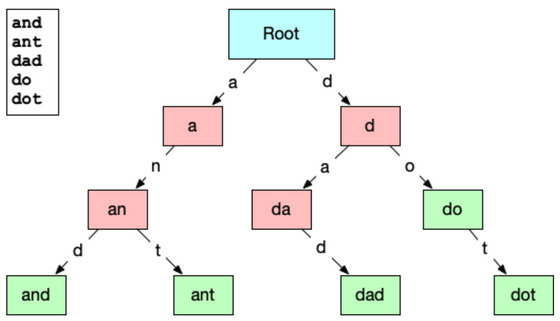

After much deliberation, the engineering team decided to adopt a trie structure. A trie structure searches for matches from the beginning of a string, and has the advantage that it can finish searching for strings that are not in the word list relatively quickly. For example, if the word list is made up of 'and,' 'ant,' 'dad,' 'do,' and 'dot,' any word that does not start with 'a' or 'd' can be immediately determined to be 'not in the word list.'

The trie structure can quickly reduce the search space, so the search time for non-matching word lists is O(log(L)). The search time for matching word lists is O(L), which is the same as a hash calculation, but because less than 10% of the request headers match internal headers, the search can be sped up.

As a result of efforts such as implementing a trie structure to suit Cloudflare's use case, they were able to reduce the CPU usage of the clear_internal_headers function from an initial 1.71% to 0.34%.

The blog post concludes by saying, 'The real takeaway from this is that knowing how and where your code is slow is more important than knowing how to optimize it.' 'Take a moment to use profiling and benchmarking tools.'

Related Posts:

in Software, Web Service, , Posted by log1d_ts