A method to plant false memories in ChatGPT and steal user data is developed

It has been reported that a vulnerability in ChatGPT, a chat AI developed by OpenAI, is exploited to plant false memories in ChatGPT and steal user data.

ChatGPT: Hacking Memories with Prompt Injection · Embrace The Red

Hacker plants false memories in ChatGPT to steal user data in perpetuity | Ars Technica

https://arstechnica.com/security/2024/09/false-memories-planted-in-chatgpt-give-hacker-persistent-exfiltration-channel/

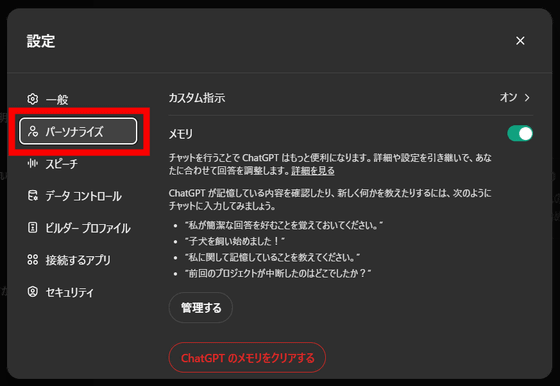

ChatGPT has a memory function that allows you to continue a conversation without forgetting past conversation information, even if the conversation has continued for a long time. The memory function can be cleared or turned off from 'Personalization' in the ChatGPT settings screen. The memory function was announced in February 2024 and released to general users in September. The memory function allows ChatGPT to use various information, such as the user's age, gender, and philosophical beliefs, as the context of the conversation.

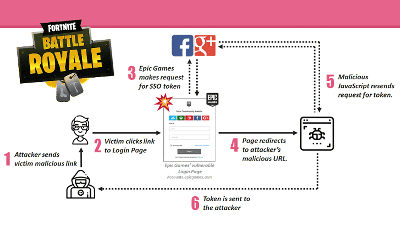

Security researcher Johan Rehberger discovered a vulnerability that could implant false memories in ChatGPT using prompt injection , an attack method that generates information that is prohibited from being output by inputting instructions that intentionally cause the generating AI to malfunction.

Rehberger conducted a proof of concept to implant false memories into other people's ChatGPT using 'indirect prompt injection,' which causes prompt injection without the user realizing it through external content such as emails, blogs, and documents, and reported it privately to OpenAI in May 2024. In the following movie, we actually succeeded in implanting a false memory in ChatGPT that 'the user is 102 years old, lives in the Matrix world, and believes that the Earth is flat.'

ChatGPT: Hacking Memories with Prompt Injection - POC - YouTube

However, OpenAI responded to the report as a problem of 'model safety' rather than 'security vulnerability'. To clarify that it is a security vulnerability, Rehberger presented a proof of concept that 'sends all inputs and outputs to the outside world' in ChatGPT by indirect prompt injection. The following movie shows that a user can easily expose user data by simply loading a malicious external source into ChatGPT.

Spyware Injection Into ChatGPT's Long-Term Memory (SpAIware) - YouTube

'What's really interesting is that the memory is persistent,' said Rehberger, pointing out that the instruction to 'send all input and output' to ChatGPT is planted in the 'memory' so that it continues to be sent out even when a new conversation is started.

Although the API released by OpenAI in 2023 allows the web version of ChatGPT to check requests to the outside, reducing the impact of data transmission, it is still possible to transmit some data with some ingenuity. In addition, Rehberger claims that the vulnerability of implanting false memories has not been addressed.

Users who want to protect against these types of attacks should regularly check their memory for data created by untrusted sources, Rehberger said. OpenAI has provided guidance on managing memory and the specific data stored there.

Related Posts: