Google releases AI model 'DataGemma' to prevent AI from generating misinformation, reducing hallucinations by referring to 'trusted data collections'

The development of large-scale language models (LLMs) used in chat AI and code generation is progressing rapidly, but LLMs have a problem called 'hallucination,' in which they output false information as if it were true. Google has released an AI model called ' DataGemma ' that can reduce this hallucination.

DataGemma: AI open models connecting LLMs to Google's Data Commons

Grounding AI in reality with a little help from Data Commons

https://research.google/blog/grounding-ai-in-reality-with-a-little-help-from-data-commons/

DataGemma is an AI model that can use information from the Google-led dataset collection project ' Data Commons ' to provide answers. Data Commons contains datasets published by trusted organizations such as the United Nations and the World Health Organization, and by including this information in the output, it is possible to reduce hallucination and increase the accuracy of answers.

DataGemma is a fine-tuned version of Google's open source LLM Gemma 2 27B , and is optimized for two methods: Retrieval-Interleaved Generation (RIG) and Retrieval-Augmented Generation (RAG) . The mechanisms of each method are as follows:

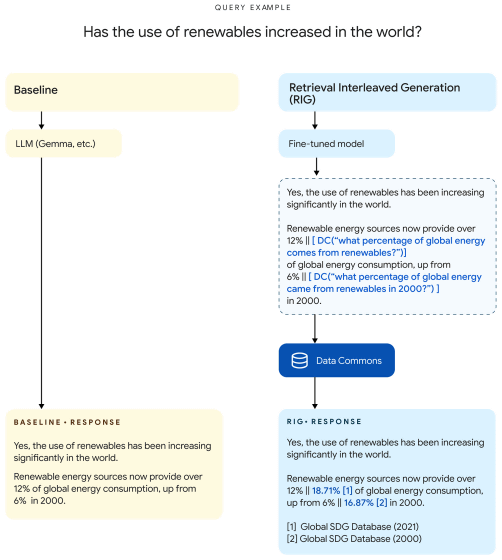

◆Retrieval-Interleaved Generation (RIG)

When generating answers, RIG goes through a process of retrieving data from Data Commons, outputting answers that contain reliable data.

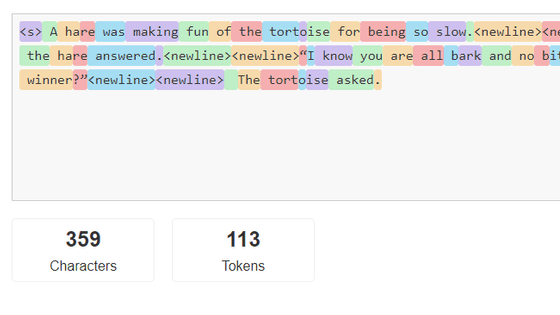

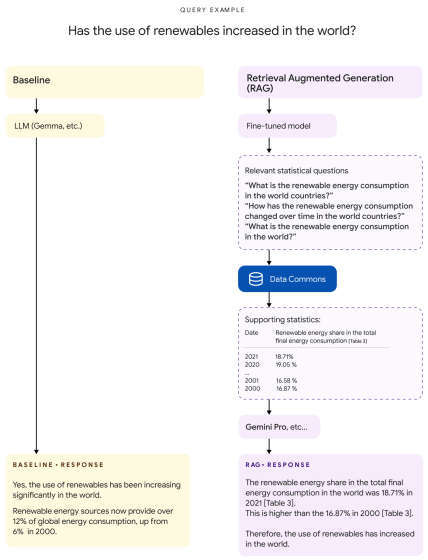

For example, if you ask a typical LLM, 'Is the use of renewable energy increasing?', it will likely output an answer like, 'Yes, it is increasing. More than 12% of the total is renewable energy, which is a 6% increase since 2000.'

On the other hand, before generating the final answer, DataGemma internally generates a sentence including a query to Data Commons, such as 'Yes, it is increasing. More than 12% of the total || [ask Data Commons what percentage of the world's total is renewable energy] is renewable energy, which has increased by 6% || [ask Data Commons how much the world's total renewable energy use has increased since 2000] .' After obtaining the desired information from Data Commons, it outputs an answer with the correct number, such as 'Yes, it is increasing. More than 12% || 18.71% of the total is renewable energy, which has increased by 6% || 16.87% since 2000.'

Although RIG can be applied to any question, there is a problem in that 'Data Commons data is not reflected in subsequent questions' because DataGemma cannot retain Data Commons information.

◆Retrieval-Augmented Generation (RAG)

In RAG, DataGemma generates 'questions for Data Commons' in response to questions, and inputs 'data obtained from Data Commons' into an auxiliary LLM to output the final answer.

For example, if the question 'Is renewable energy use increasing?' is input, DataGemma will generate questions for Data Commons such as 'What is the percentage of renewable energy use worldwide?' and 'What is the trend in the percentage of renewable energy use worldwide?', and input the data obtained from Data Commons into the auxiliary LLM to output answers such as 'The global renewable energy use rate in 2021 is 18.71%, which is 16.87% higher than in 2000.'

In Google's experiments, the size of the data input to the auxiliary LLM was an average of 38,000 tokens and a maximum of 348,000 tokens. For this reason, it is necessary to use an LLM with a large context window, such as Gemini 1.5 Pro, for the auxiliary LLM. In addition, depending on the content of the user's question, it may output an answer that is not intuitive.

Google has published DataGemma model data optimized for RIG and RAG at the following links:

DataGemma Release - a google Collection

https://huggingface.co/collections/google/datagemma-release-66df7636084d2b150a4e6643

You can also find DataGemma's research papers at the following links:

Knowing When to Ask - Bridging Large Language Models and Data

(PDF file) https://docs.datacommons.org/papers/DataGemma-FullPaper.pdf

Related Posts:

in Web Service, Posted by log1o_hf