Why is C2PA, which can distinguish between real photos and AI-generated fakes, not gaining widespread adoption?

As it becomes possible to create AI images that look like the real thing with just a smartphone, the need for a way to distinguish real photos from AI-created fakes is rapidly increasing. One representative example of such efforts is '

This system can sort real pictures from AI fakes — why aren't platforms using it? - The Verge

https://www.theverge.com/2024/8/21/24223932/c2pa-standard-verify-ai-generated-images-content-credentials

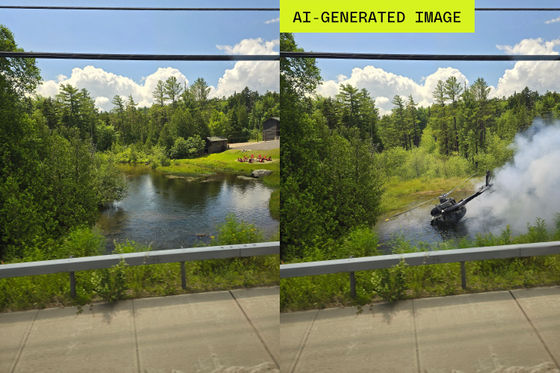

Below is a photo that The Verge processed with the AI function ' Magic Editor ' of Google Pixel 9 released on August 22, 2024. You can see how easy it has become to fake a helicopter crash scene or fabricate evidence photos of stimulant drug use.

To combat the confusion caused by these AI images, companies including Microsoft, Adobe, Arm, OpenAI, Intel, Truepic, and Google are working on C2PA certification, but so far the C2PA verified seal has been rare.

'It's important to recognize that we are still in the early stages of adoption. The specification is final and strong. It's being reviewed by security experts. There is very little implementation, but that's the natural progression of a standard as it's adopted,' emphasized Andy Parsons, a member of the C2PA steering committee and senior director of Adobe's Content Authentication Initiative (CAI).

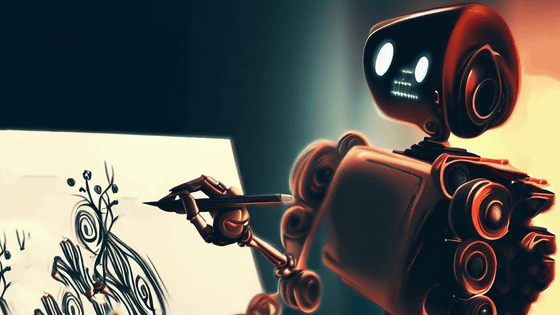

According to The Verge, C2PA's limited adoption is due to issues with interoperability, meaning there is a lot of variation in how the platforms involved are adopting it.

The problem starts at the stage of taking a photo with a camera. When you take a photo with some models from Sony, Leica, and others, a cryptographic digital signature based on the C2PA open technology standard is embedded in the file, but this is only supported by a limited number of models, such as new models from Leica like the M11-P, and existing Sony models like the Alpha 1, Alpha 7S III, and Alpha 7 IV.

The world's first camera 'Leica M11-P' with built-in 'content credential function' that adds AI usage history and creator information to images - GIGAZINE

The same goes for smartphones, which are more familiar photography devices than digital cameras. Neither Apple nor Google responded to The Verge's inquiries about introducing C2PA or similar technology to iPhones or Android devices.

In addition, while image editing software commonly used in the photography industry, such as Adobe Photoshop and Lightroom, embed C2PA-compliant “content authentication” information into image data about when and how the image was modified, popular, widely used image editing apps such as Affinity Photo and GIMP do not support a uniform, interoperable metadata technology.

A developer of the popular professional photo editing software Capture One told The Verge that the company is 'committed to supporting photographers influenced by AI and is considering adopting tracking features such as C2PA.'

Even if authenticity data is embedded in an image, it is not visible to viewers because online platforms such as Twitter (formerly known as X) and Reddit do not display the embedded authenticity data in uploaded images.

On the other hand, Facebook, which checks content using C2PA, has been so strict with its verification that it has labeled real photos as 'AI-made,' sparking backlash from photographers. There are many challenges to implementing the technology to distinguish AI images.

Report that Meta labels real photos as 'made by AI' - GIGAZINE

Even if C2PA miraculously spread to all devices, software, and social media at once, concerns about hoaxes and false information will not disappear. Some people only believe what they want to believe, as seen in the case of Trump supporters who believed the allegation that 'Harris' rally photos were fake images generated by AI' despite multiple verifiable evidence.

'None of these are panaceas,' said Mounier Ibrahim, chief communications officer at Truepic, a digital content authentication platform working on the C2PA. 'We can mitigate the risk of harm, but there will always be bad actors using generative tools to deceive people.'

Related Posts:

in Software, Posted by log1l_ks