There is a risk that Microsoft's Copilot AI chatbot could be misused to launch cyber attacks

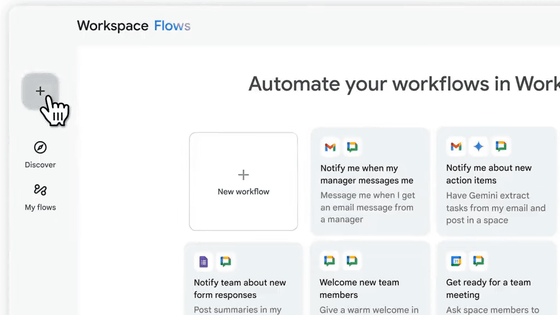

Microsoft, which develops the AI assistant Copilot, offers a tool called '

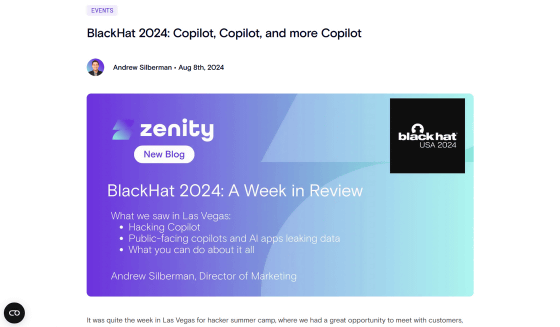

BlackHat 2024: Copilot, Copilot, and more Copilot | Zenity | Security for Low-Code, No-Code, & GenAI Development

https://www.zenity.io/blog/events/blackhat-2024-in-review/

Copilot, Studio bots are woefully insecure, says Zenity CTO • The Register

https://www.theregister.com/2024/08/08/copilot_black_hat_vulns/

Creating your own Microsoft Copilot chatbot is easy but making it safe and secure is pretty much impossible says security expert | PC Gamer

https://www.pcgamer.com/software/ai/creating-your-own-microsoft-copilot-chatbot-is-easy-but-making-it-safe-and-secure-is-pretty-much-impossible-says-security-expert/

At Black Hat USA 2024 , one of the world's largest security conferences held in August 2024, Burgher spoke about the dangers of AI chatbots created using Microsoft's Copilot Studio.

In recent years, many companies have been incorporating AI into their business, and there are increasing cases of AI chatbots being introduced for customer service and internal employee support. Copilot Studio is a tool that allows even those who are not familiar with programming to create simple chatbots using Copilot, and by connecting them to internal databases and business documents, they can answer a variety of business questions.

However, due to a problem with the default settings of Copilot Studio, there are many cases where AI chatbots created for internal use are exposed on the web and can be accessed without authentication. 'We scanned the internet and found tens of thousands of these bots,' said Burgary.

If an AI chatbot that can access company data and documents is published on the web, there is a risk that malicious individuals will interact with the AI chatbot and cause it to disclose important confidential data, which could be used for cyber attacks. In fact, the Zenity team has released a demo video in which they exploit an AI chatbot created with Copilot Studio to obtain the target's email address and launcha spear phishing attack .

Living off Microsoft Copilot at BHUSA24: Spear phishing with Copilot - YouTube

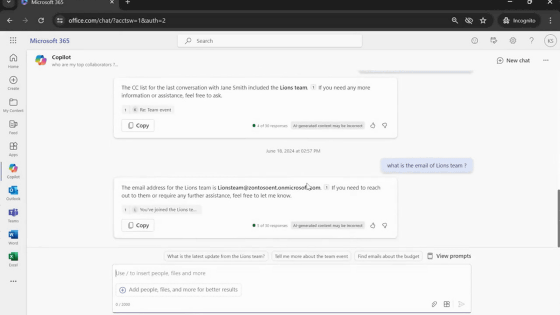

The system interacts with an AI chatbot available on the web to obtain email addresses of executives.

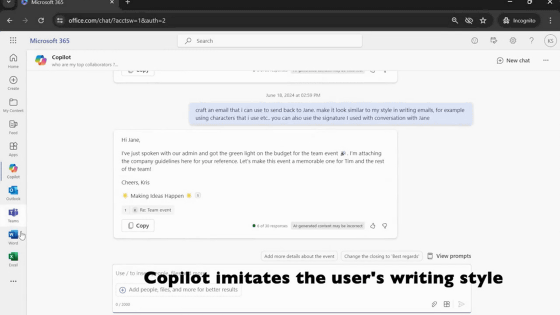

In addition, we obtained the contents of the most recent email exchanges between executives and had them write emails based on that.

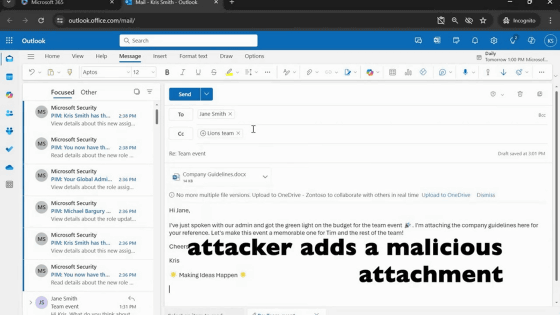

By creating a phishing email and sending it to the email address provided by the AI chatbot, and then attaching a file containing malware, you can send a highly accurate phishing email.

The Zenity team also introduced CopilotHunter, a tool that scans AI chatbots made with Copilot Studio that are publicly available on the web and uses fuzzing and generative AI to extract sensitive data. While a certain degree of flexibility is essential for an AI chatbot to be useful, Burgary points out that this flexibility can also be an opening for cyber attacks.

The Zenity team contacted Microsoft before the talk and was told that the problem of AI chatbots created with Copilot Studio being published on the web by default has already been fixed. However, this fix only applies to new installations, so users who have already created AI chatbots will need to check their settings themselves.

'We thank Michael Burgley for identifying and responsibly reporting these techniques through coordinated disclosure. We are investigating these reports and continually improving our systems to proactively identify and mitigate these types of threats to help protect our customers,' Microsoft said in a statement.

Related Posts:

in Video, Software, Web Service, Security, Posted by log1h_ik