Meta launches 3D Gen, a tool that generates 3D models from text in just one minute

On July 2, 2024, Meta announced 'Meta 3D Gen,' which can generate 3D assets with high-quality three-dimensional shapes and textures from text in under 60 seconds. Meta claims that 3D Gen can generate 3D assets 3 to 10 times faster than conventional methods.

Meta 3D Gen | Research - AI at Meta

Meta Unveils 3D Gen: AI that Creates Detailed 3D Assets in Under a Minute

https://www.maginative.com/article/meta-unveils-3d-gen-ai-that-creates-detailed-3d-assets-in-under-a-minute/

To get a good idea of what the 3D models generated by Meta with 3D Gen look like, play the video below.

Meta 3D Gen - YouTube

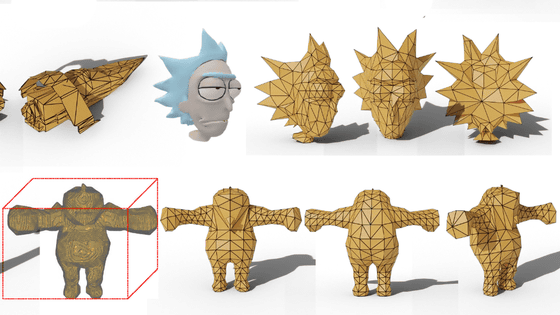

The 3D model generated by 3D Gen is of high quality not only for the front but also for the sides and back.

One of its features is that it can generate both three-dimensional shapes and surface textures. For example, the metallic pug below was generated with the text prompt 'metallic pug.'

The robot's name is 'Futuristic Robot.' It's dancing a fun-loving dance, but the joints were automatically created and animated with

Textures can also be generated according to the prompts. For example, the following is an example of a texture that looks like an 'amigurumi'.

If you select 'Horror Movie,' the 3D models of animals and food will be bloody.

Although not included in the movie, the (PDF file)

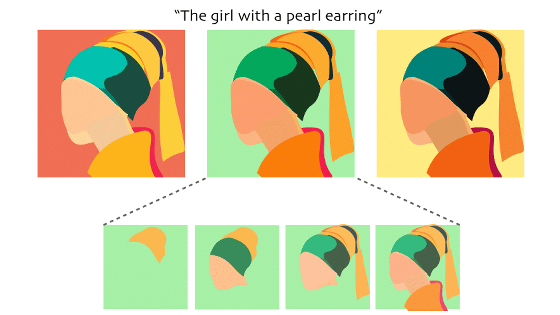

3D Gen creates 3D models in two stages: in the first stage, AssetGen generates 3D meshes using Physically Based Rendering (PBR) materials from text prompts, where the difficult task of transforming a 2D concept into a 3D structure takes place.

Meta describes the process as follows: 'AssetGen generates both a shaded view (light sources and shadows) and an albedo view (the color of textures) of an object. This dual approach allows our system to more accurately infer 3D structure and materials, resulting in higher quality assets.'

In the second stage, TextureGen refines the textures created by AssetGen, using a combination of view space (camera perspective in 3D modeling) and UV space (texture coordinates) generation techniques to match prompts and textures while maintaining consistency with the 3D model.

Maginative, an overseas media outlet that covers AI-related news, said, '3D Gen's speed and quality will allow game developers to easily create prototypes of environments and characters, significantly speeding up the game development process. It will also allow architectural firms to create detailed 3D models of buildings from text descriptions, streamlining the design process. In addition, in the fields of VR and AR, it will make it easier to create immersive environments and objects, which may accelerate the development of the metaverse.'

Related Posts:

in Software, Posted by log1l_ks