Apple explains its efforts to ensure the security of the AI processing server 'Private Cloud Compute' used for Apple's assistant AI 'Apple Intelligence'

The AI assistant 'Apple Intelligence' announced by Apple on June 11, 2024, runs high-load tasks that cannot be processed on the device on Apple's servers. Apple names the AI processing execution server 'Private Cloud Compute (PCC)' and explains its efforts to ensure the security of PCC on its official blog.

Blog - Private Cloud Computing: A new frontier for AI privacy in the cloud - Apple Security Research

https://security.apple.com/blog/private-cloud-compute/

Apple Intelligence is an AI assistant integrated into Apple devices such as iPhones and Macs, and can perform a wide variety of tasks such as summarizing notifications and generating content. The following article summarizes what Apple Intelligence does.

Apple announces new personal AI 'Apple Intelligence', Siri supports ChatGPT in partnership with OpenAI - GIGAZINE

Apple Intelligence has a device model that performs all processing on the device, and a server model that sends data to a server and processes it there. The server model is a high-performance model used to perform 'tasks that cannot be processed on the device', and it has been revealed that it shows performance approaching OpenAI's GPT-4-Turbo.

Apple releases benchmark results showing the performance of its assistant AI 'Apple Intelligence,' revealing the performance difference with GPT-4-Turbo - GIGAZINE

Apple is committed to ensuring user security and privacy. For example, iMessage is end-to-end encrypted so that only the sender and receiver can view the information. However, because Apple Intelligence processes information on a server, the information must be viewable on the server. This raises concerns for users, such as 'Will the information I send to the server be viewed by Apple employees?' and 'Will the data be stored on the server and leaked due to a cyber attack?'

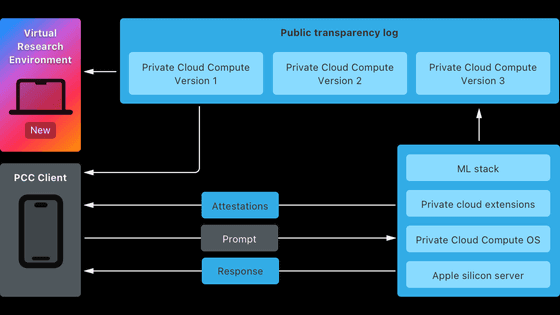

In order to process information on servers while ensuring user security and privacy, Apple has built a system called 'Private Cloud Compute (PCC)' that is different from existing cloud computing systems. Apple has highlighted the following main features of PCC:

◆ Protects against attacks with the same technology as the iPhone

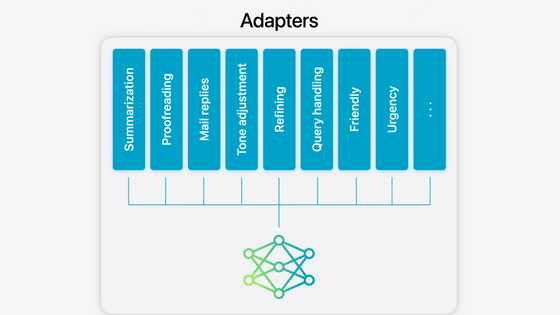

PCC's computing nodes use the Secure Enclave hardware protection technology used in Apple devices such as iPhones and Macs, as well as security technologies such as code signing and sandboxing that are equivalent to those used in iOS.

In addition, new components specialized for PCC operations are being developed, such as building a new machine learning stack with Swift on Server .

◆ Data encryption

When sending data from the device to the PCC, the device encrypts it with the PCC node's public key. The data is sent encrypted to the PCC node, where it is decrypted and processed. The PCC node deletes the data after processing is complete, so no data remains on Apple's servers.

In addition, the private key used to decrypt data is only held by the PCC node, and not by management systems such as privacy gateways or load balancers, meaning that even Apple employees with server maintenance permissions cannot view user data.

◆Prevention of code tampering

All code that runs through PCC must be signed by Apple, which means that only code that can be cryptographically proven to have not been tampered with will be executed.

◆ Eliminate privileged access mechanisms

A typical cloud computing system contains privileged mechanisms such as remote shells and interactive debugging systems, which theoretically allow privileged users to peek inside the system. In PCC, systems with privileged access are thoroughly eliminated, and there is no so-called 'developer mode.' In addition, PCC's log system is not a general-purpose system, but a system built specifically for PCC and audited, so that 'information leakage due to log monitoring' does not occur.

◆ Thorough re-examination of hardware

As the hardware used in PCC arrives at Apple's data centers, it is re-validated by 'multiple internal Apple teams that monitor each other,' and the re-validation process itself is monitored by 'a team of third-party auditors,' helping to protect the system from attacks that target the hardware.

◆ Ensuring transparency

Apple will release 'software images of all product builds of PCC' to security researchers. This will allow security researchers to identify issues in PCC and evaluate its safety. In addition, Apple will also release 'a virtual environment of PCC running on a Mac' to deepen security researchers' understanding of PCC. In addition, Apple will launch a bug bounty program that will pay rewards for discovering bugs in PCC.

Apple says it will soon begin opening up the data to security researchers.

In addition, Matthew Green , a member of the Johns Hopkins University Security Research Institute, has warned that in light of the integration of ChatGPT into Apple's OS, OpenAI's own data processing policy will be applied to ChatGPT processing.

Wrapping up on a more positive note: it's worth keeping in mind that sometimes the perfect is the enemy of the really good.

— Matthew Green (@matthew_d_green) June 10, 2024

In practice the alternative to on-device is: ship private data to OpenAI or someplace sketchier, where who knows what might happen to it.

Related Posts: