OpenAI is developing a tool to distinguish whether an image is AI-generated or not

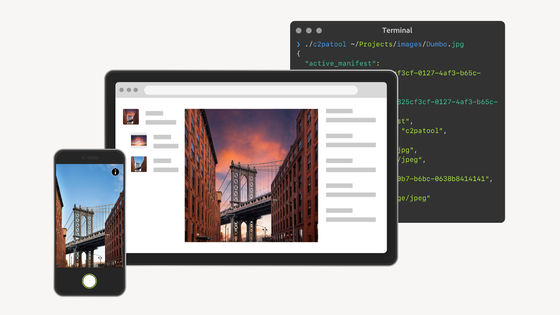

On May 7, 2024, OpenAI announced that it had joined the Coalition for Content Provenance and Authenticity (C2PA), a standardization organization for technology that tracks the provenance of digital content. Following Adobe, Microsoft, Google, Sony, and others, OpenAI has stated that it will work on implementing technologies such as digital watermarking and AI image detection tools in the future.

OpenAI Joins C2PA Steering Committee - C2PA

Understanding the source of what we see and hear online | OpenAI

https://openai.com/index/understanding-the-source-of-what-we-see-and-hear-online

Our approach to data and AI | OpenAI

https://openai.com/index/approach-to-data-and-ai

OpenAI says it's building a tool to let content creators 'opt out' of AI training | TechCrunch

https://techcrunch.com/2024/05/07/openai-says-its-building-a-tool-to-let-content-creators-opt-out-of-ai-training/

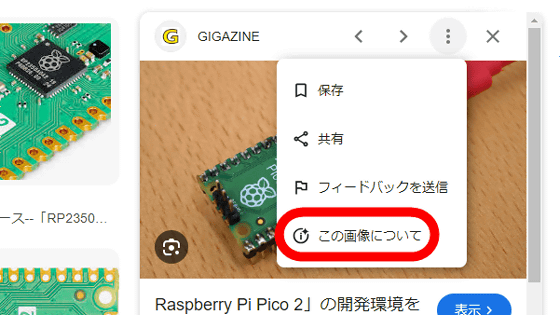

Starting in February 2024, OpenAI began embedding C2PA identifiers into images generated by its image generation AI DALL-E 3 to indicate that the images were generated by AI.

OpenAI's image generation AI 'DALL-E 3' starts embedding digital watermarks using the technical standard 'C2PA' to indicate that the work is AI-generated - GIGAZINE

OpenAI, which has now officially joined C2PA, has announced that it will develop new provenance technology to make digital content less susceptible to forgery and to strengthen content integrity.

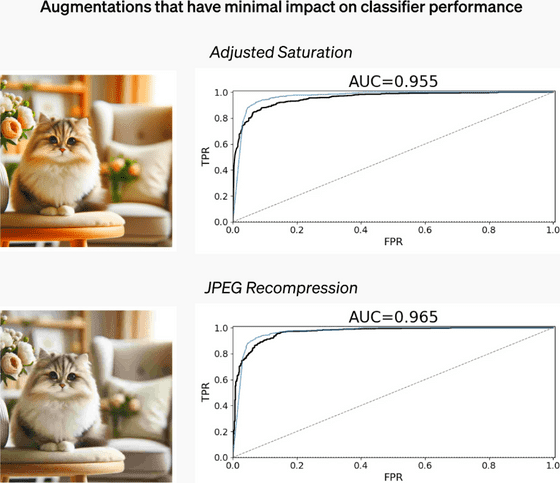

These include 'tamper-resistant watermarking,' which applies invisible marks to digital content that are difficult to remove, and 'detection classifiers,' which are tools that use AI to assess whether content was created by a generative model.

As part of this effort, OpenAI will begin accepting applications for a tester program to distribute the DALL-E detection classifier to research institutions from May 7, 2024. The DALL-E detection classifier is a tool that measures the likelihood that an image is derived from DALL-E 3 and will be distributed as an API.

In initial internal testing, the DALL-E detection classifier identified 98 percent of images generated by DALL-E 3, with a false positive rate of less than 0.5 percent for images not generated by AI.

Through the Access Program, OpenAI will validate the effectiveness of the DALL-E detection classifier and its performance in real-world use cases, gain a deeper understanding of AI-generated content, and thoroughly evaluate what is needed for the responsible use of AI.

In addition, OpenAI is developing a tool called 'Media Manager' that will allow content creators to opt out of AI training, according to multiple media reports.

Creators and content owners will reportedly be able to use the Media Manager to clearly label their work and specify how it may or may not be used in AI research or training.

Media Manager is expected to be released by 2025.

Related Posts:

in Software, Posted by log1l_ks