Internet watchdog warns that AI-generated child pornography is on the rise and could cripple reporting systems for child exploitation

Experts point out that the child sexual abuse hotline CyberTipline , which has been in operation since 1998, is not functioning adequately, and that as the amount of 'AI-generated child pornography' increases in the future, it will become even more difficult to find real victims of abuse.

How to Fix the Online Child Exploitation Reporting System | FSI

Report urges fixes to online child exploitation CyberTipline before AI makes it worse | AP News

AI-Generated Child Sexual Abuse Material May Overwhelm Tip Line - The New York Times

https://www.nytimes.com/2024/04/22/technology/ai-csam-cybertipline.html

On April 22, 2024, an internet watchdog group led by Stanford University published a report summarizing the results of interviews with several organizations and the National Center for Missing and Exploited Children (NCMEC), which operates the CyberTipline.

CyberTipline is the processing system for all reports of child sexual abuse in the U.S., where online platforms that identify child sexual abuse material are required by law to report it to CyberTipline.

As a result, the CyberTipline receives a large number of reports, which it then forwards to local law enforcement agencies.

However, reports sent by CyberTipline often lack important information, such as the identity of the criminal, and law enforcement agencies are unable to properly prioritize which reports to process.

The Internet watchdog cited three major issues:

1: Some online platforms do not put much effort into reporting to the CyberTipline, leading to reports lacking necessary information

2: NCMEC is struggling with financial difficulties, making it difficult to secure personnel

3. Legal constraints mean the NCMEC cannot tell online platforms what to look for or report.

As an example of legal restrictions, a Court of Appeals ruling stated that 'unless the online platform has viewed the file before reporting it, law enforcement must obtain a warrant before opening it.' Perhaps due to a shortage of staff or a desire to avoid exposing staff to harmful content, online platforms sometimes hash information and automatically report it to CyberTipline without allowing staff to view it, which means that law enforcement agencies that receive reports have to go through the trouble of obtaining a warrant to view the file.

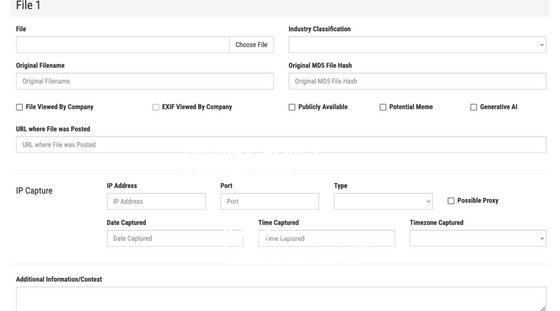

The reported content includes joke posts and memes that are unrelated to actual sexual abuse, but if it is still child sexual abuse content, it is illegal and online platforms are obligated to report it. The reporting form does have checkboxes that ask 'Is it a meme?' and 'Is it made by AI?', but if the reporter neglects to check them, law enforcement agencies will have to spend extra effort on memes.

In addition, the recent rise in fake child sexual abuse content generated by generative AI is only increasing the workload for law enforcement. 'We are almost certain that CyberTipline will be inundated with reports of highly realistic AI content over the next few years, making it increasingly difficult to identify real children who need to be rescued,' said Shelby Grossman, lead author of the report.

The internet watchdog recommended that tech companies and NCMEC focus on building systems to identify AI content, and that NCMEC work to improve its reporting forms to encourage more accurate reporting, and urged Congress to increase NCMEC's budget.

Related Posts:

in Posted by log1p_kr