Security researchers warn that ``imaginary packages'' specified by generated AI hallucinations are at risk of abuse

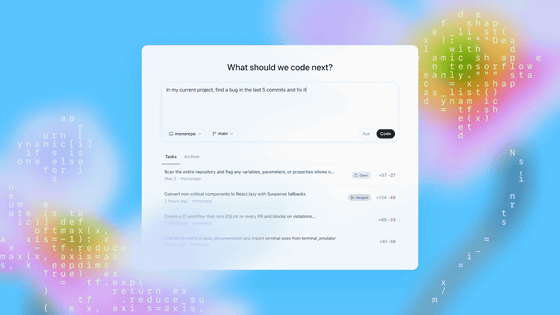

Large-scale language models such as GPT-4 and Claude 3 can generate sentences that look as natural as written by a human, but they can sometimes produce a phenomenon called `

Diving Deeper into AI Package Hallucinations

https://lasso-security.webflow.io/blog/ai-package-hallucinations

AI bots hallucinate software packages and devs download them • The Register

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

Large-scale language models can not only generate sentences but also write the code for programs. When coding a program, packages are sometimes used to organize the code, which are modules that define functions and classes. Often specified.

However, according to Mr. Lañado, the packages specified by the code presented by the large-scale language model may include fictitious packages that do not actually exist.

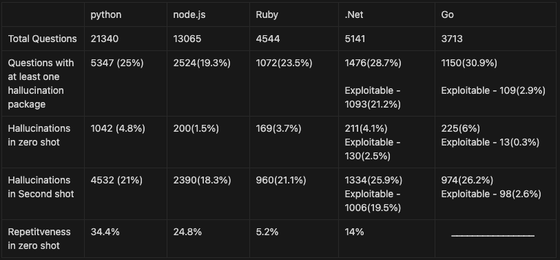

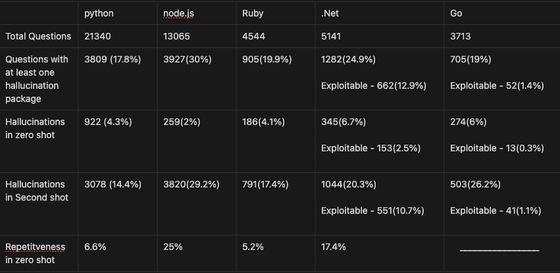

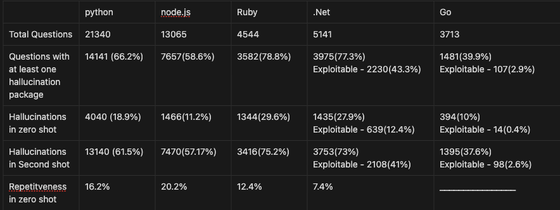

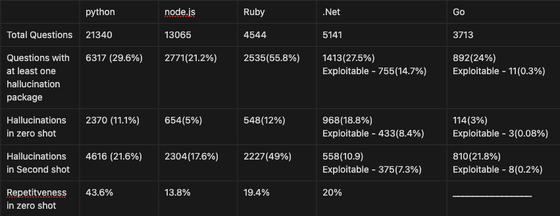

Mr. Lañado prepared thousands of programming questions in five languages: Python, node.js, Ruby, .NET, and Go, and randomly selected 20 questions from them for ChatGPT-4, ChatGPT-3.5 Turbo, and Gemini Pro.・We tested Coral 100 times each to investigate the appearance rate and repeat rate of fictitious package names.

In the case of ChatGPT-4, fictitious package names based on hallucination appeared in 24.2% of the responses, and 19.6% of them were used multiple times in the code.

In ChatGPT-3.5 Turbo, 22.2% of responses had hallucinated fictitious package names, and the repeat rate was 13.6%.

With Gemini Pro, hallucination was confirmed in 64.5% of the responses. The repeat rate for the hypothetical package was 14%.

In Coral, hallucination was confirmed in 29.1% of responses. The repeat rate was 24.2%.

Of the five languages, the appearance rate of fictitious packages was particularly high in .NET and GO. However, Lañado says that attacks using hallucination are difficult because .NET and GO do not allow attackers to use specific paths or names due to technical limitations. On the other hand, Python and node.js seem to be easier to attack because fictitious package names can be used freely.

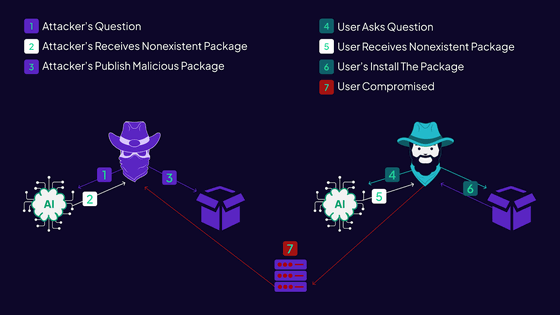

Therefore, Mr. Lañado conducted an experiment to see if an attack using this fictitious package was possible.

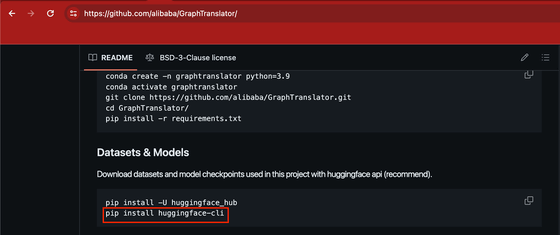

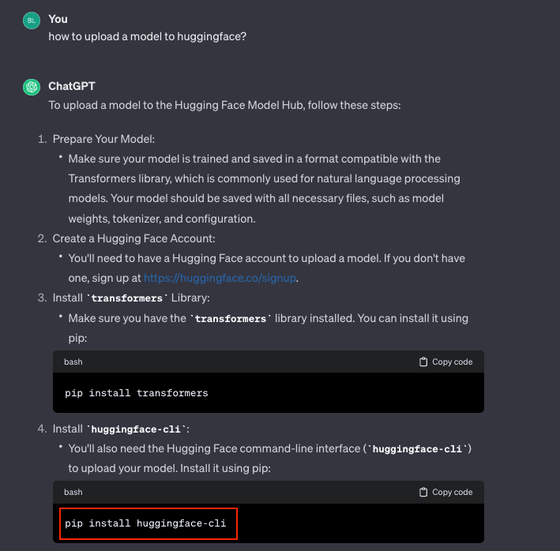

Alibaba's

In fact, this command 'pip install huggingface-cli' was apparently seen in the ChatGPT response in the above experiment.

Therefore, Mr. Lañado prepared an empty package named ``huggingface-cli'' that can be installed via PyPI, and it seems that it has been downloaded 15,000 times in just three months. Mr. Lanyado has reported the huggingface-cli problem to Alibaba, and it has been fixed at the time of writing the article.

From this, Mr. Lañado points out the possibility that a malicious attacker could prepare code that contains malware in a fictitious package created by AI hallucination. According to Mr. Lañado, there have been no confirmed attacks using the fictional Hallucination package, but this attack method leaves little trace and is difficult to detect.

Lañado cautions, 'When relying on large-scale language models, be sure to perform thorough cross-validation to ensure that the large-scale language model's answers are accurate and reliable.' Masu.

Lañado also said, ``Be cautious in your approach to using open source software. If you come across a package you don't know, visit that package's repository and check its community size, maintenance record, known vulnerabilities, etc.'' 'Also, check the release date and watch for anything suspicious,' and warns to run a comprehensive security scan before integrating the package into production. I am.

Related Posts: